The VCF Midwest 2024 Report

Hey, Chicago, whaddya say—are you ready for some old computers today? Here, in Userlandia, it’s time for a new and improved VCF Midwest.

Oh Vintage Computer Festival Midwest, how you’ve grown! After nineteen years of hard work you’re today’s premiere destination for connecting people with yesterday’s tech. But after last year’s blockbuster event a clear consensus emerged across multiple postmortem reports: the show had grown beyond the capacity of Elmhurst’s Waterford Banquet and Conference Center.

Of course, pointing out a problem is easier than solving it. But Chicago Classic Computing heard the feedback loud and clear, and on March 8th 2024 they announced VCF Midwest’s move to a new home: The Renaissance Hotel and Convention Center in Schaumburg, Illinois. To call this an upgrade is an understatement; it’s like replacing a 486 PC with a Pentium III. Now VCF Midwest is one of the many mid-sized conventions,

trade shows, and expositions that live in the Schaumburg Convention Center and its 97,000 square foot main convention hall. The Waterford's main ballroom was barely 12,000, and even when you add in 8,000 square feet of hallways and lobby space, that's still barely one fifth the size. And if that’s not enough there’s 50,000 more square feet available in the Schaumburg ballroom and other conference rooms.

Those are some big numbers, but would the new venue actually work? En route to Chicago, I pondered the consequences of this change—because there are always consequences. Sure, a larger venue can solve logistical problems like floor space, overcrowding, or parking. But what could go wrong? Did staff bite off more than they could chew? Would it change the character of the show? The only way to find out is to dive on in to the wonderful world of the new VCF Midwest.

Exhibition Excursion

Whether they were exhibiting, attending, or observing from afar, most everyone I talked to had one question: how would the new venue change the show? And when certain viewers with millions of subscribers say “Hey, I’m really looking forward to your video on this year’s show!” well… no pressure! But I love a challenge. So, here: what you've all been waiting for: exhaustively detailed coverage of a wonderfully exhausting event.

The obvious impact of all that extra floor space was a bigger list of exhibitors and vendors. This year’s lineup featured 197 distinct booths—over 60% more than last year. And all the available square footage meant many exhibitors could use multiple tables to set up some pretty impressive displays… that is, if they could afford them. VCF tables used to be free. Now they're fifty bucks each, with extra fees for four or more. I actually think this is good: VCF tables have costs in terms of setup, power delivery, and so on. Having to pay for it means people are less likely to no-show. It also keeps people from requesting more tables than they actually need.

But any attendee questions about all this newfound space were answered after stepping into the main exhibit hall. A seemingly endless ocean of tables covered in computers of all kinds created a bountiful bazaar of all things retro. At first glance it might seem like tables are assigned randomly, but after you walk the aisles and check the map, some patterns emerge. There were no hard and fast rules that, say, all platforms must be bunched together, but certain zones seemed to be a little more Commodore, Apple, or Atari. Celebrity YouTubers were in the southeast corner, and large vendors were mostly along the north wall. Last year, the big iron exhibits were all in one place. But arranging a convention hall gets tougher the bigger it is. Arranging the exhibitors to accommodate lines and minimize traffic jams is like a real-world game of Tetris… except the tables don't disappear. There’s practical needs like electricity and political ones like people who want their tables placed next to each other. The result was a bunch of C- and I-shaped islands. Crowds could gather at tables without obstructing foot traffic.

With a bigger venue comes more room for variety—and VCF had plenty of that to begin with. A CRT light show of epic proportions by Aron Hoekstra is powered by a Cromemco Dazzler, the first color graphics board for S-100 computers. If you found a Dazzler of your own and wanted to build a system around it, Jeffrey Wilson is here to help with his S100 Projects featuring an ATX S100 kit. A flight of retro PCs and Macs are brought together at Kokoscript’s system sampler, with this DoCoMo Post Pet giving me real D-Terminal vibes. Multiple first-of-their kind laptops from Kaypro through IBM are gathered at Ben Gennaria’s, with the coolest one being this working Atari Stacy. It’s rare to see one in such great shape. Did you know that Epson once made entire computers just as accessories for printers? It’s true! Steve Hatle had a QX-10 on display, and Brian Johnson had a QX-16 and even a portable PX-8.

Extreme specificity like that is catnip to me, and many exhibitors were more than happy to put extremely specific themes on display. MaidenAriana from RetroAlcove made a beautiful flowery pink table for her tribute to Wing Commander, featuring a playable period setup of the Sierra classic. CLIMagic boasts that anyone can become a UNIX wizard with a little guidance from a master mage. Quinn’s display of vintage logic analyzers lets you probe and prod with the tools of yesterday to see how hardware problems were diagnosed way back when. My award for the most specific niche goes to Isaac Z. Raske, whose Computing in the Dark display detailed a history of assistive interface technology. Braille keyboards, speaking assistants, and touch devices show how those without sight can interact with computers.

Kyle Gagnon’s collection of digital cameras spoke to me as an enthusiast of camera gear. Covering a decade of innovation in consumer digital imaging, these cameras represent a great period of experimentation. Of particular interest are the ones with tethered and pivoting lenses—a forgotten fancy for photographers freed from film. This exhibit paired well with its neighbor the MDCon Road Show and its traveling display of Sony MiniDisc players, media, and ephemera. You've probably heard of audio MDs, but the Roadshow has Data MDs and even Handycam Video MDs.

If PCI and ISA are too internal for you, Kevin Moonlight’s PCMCIA exhibit featured unusual PC Card expansions to let portable computers do things that were impractical for desktops. OS/2 And You presented by Joshua Conboy returns with more boxed OS/2 software and a new slate of PCs running IBM’s ill-fated operating system, including this IBM PC Server 310. It’s one of IBM’s rare transitionary platforms where you could option both PCI and Micro Channel.

Then there was section P, which was full of Atari goodness. The Suburban Chicago Atarians are back, taking advantage of more space. Next door was John Buell, with even more Atari gear. Though FujiNet is available on other platforms, they never forget their Atari roots. Scott K’s networked Atari Jaguars let you try multiplayer on a system you might’ve missed. Mixing it up is Slor’s Atari and Friends, showing that yes, Atari and Sinclair can be friends. If you’re interested in possible upgrades for your old Atari PCs, Stephen Anderson had several modified machines on hand. Other Atari fans could be seen across the hall, like the Atari Guy and his table full of Atari computers.

Although minicomputers and workstations weren’t as closely grouped together, there was plenty of old enterprise gear, like Hooloovoo’s DEC-powered plotting. You don’t often see HP minicomputers out in the wild, but Mike Loewen’s HP 2108 was punching paper tape souvenirs for everyone to take home. AT&T systems are another rarity, and John Orwin’s collection of AT&T boxes includes Unix PCs and a 6300WGS. There were fewer SGI and Sun setups than last year, but the two most popular Unix workstation makers still had some rep. Anthony Bolan returned with a colorful display of SGI workstations, emphasizing multi-seat applications running on one system. And if that’s too graphical for your tastes, Beehive Bit Bunker has actual Cold War-era nuclear control. This Nuclear Data Inc. spectral analysis system measured gamma radiation and other radioactive data. Local connection: NDI HQ was in Schaumburg! Or play some Zork on an AS/400 that's also running some businessware on the side thanks to Michael Mason and David Kudler. Just don’t tell their boss.

As always, Commodore commanded significant presence on the show floor—literally. Witness the mega-sized SX-64 Ultimax. Remember Tron? Jessica Petersen has a four-port joystick adapter for her Tron-esque Deluxe Light Cycle Game, and if I'd had more time, and three friends with me, I might’ve taken a turn myself. The Style demo group had a massive four-table end-cap with many Commodore machines running hardware-breaking demos and unique games. Steamed Hams, at this time of year, at this time of day, in this part of the country, running entirely on a VIC-20? Yes, and not only can you see it, you can try it! Cassettes fell off quickly here in the US as a primary storage medium but tape still had its day overseas, and Commodoreman’s collection of datasettes let you press play on all different kinds of tapes. A collection of history at Mike Shartiag’s table consisted of some interesting PETs and a Commodore cash register. Even more PETs were at Ethan Dicks’ corner running an enhanced version of the FLOPTRAN BASIC compiler. Hot-rod Amigas like Eric Wolfe’s PiStorm 1200 running AmiKit push the system’s limits from a hardware and software standpoint. And for a mixture of all kinds of Amiga ephemera, Mr Great & Booger Reborn brought demos.

For some people VCF is the only opportunity to touch tech that wasn’t popular on American shores. A ZX Spectrum Next and other systems were running the Lantern text adventure engine at Evan Wright’s table. If you don’t know which side you would’ve picked in the British Micro Wars, Chris Roth can help you decide. You can try a BBC Micro, ZX Spectrum, or watch a 1982 episode of The Computer Programme to educate yourself about computing across the pond. For a different kind of British computer, check out John Ball’s excellent timeline of Apricot PCs. His array of Apricot’s interesting IBM in-compatible MS-DOS PCs—you heard that right—includes an Apricot Portable and the stylish compact F1. Plus, there’s a rotating lighted sign!

Japanese computing has a fascinating history, and Noah Burney’s MSX Around the World shows the universe of MSX computers in Asia and Europe. With eight models to try you’ll see just how much variation a standard could accommodate Across the way was NEC Retro, returning to the show with a new slate of Japanese domestic PCs and desktops with integrated Famicoms.

Sometimes a table is just a collection of computers doing interesting things. Where else can you play a game of Rogue or check out a Brookhaven Instruments digital correlator but at Evan Gildow’s booth? When there’s too many computers but not enough table space, why not copy Dillon Tracy and build some shelves to let you use five different computers at once. And don’t forget Andy Geppert’s Interactive Core Memory, with its new 6502-powered neon pixel core simulator.

If you love Texas Instruments and Tandy, VCF has plenty for you too. Jim Mazurak’s maxed-out TI-99 combines its many expansions to transform this micro computer into a mega computer. Trash-80s are treasure at Neil’s Computer Service. I went cuckoo for CoCos at Ken Waters’ table of all things Tandy. Get up close to the processor powering these Tandys and more at Chris and Gavin Tersteeg’s spotlight on the Z80. And Ken Van Mersbergen’s table had a little bit of everything, with Tandy PCs and software and a spectacular tribute to the Coleco Adam. Terminals, robotics, and other obscurities team up at the fearsome foursome of Forgotten Machines, Nevets01, Josh Bensadon, and Dave Runkle. When you’re done learning to program the COSMAC Elf, you can explore the RCA CPU that makes it tick. Flip some switches to control an Altair powered robot, then try to puzzle out the Convergent Technologies AWS. I don't know if this grouping was intentional or a coincidence, but it was excellent.

Several museums sent exhibits. Hailing from Columbus, Ohio, the Sprawl Technology Library preserves old media, which includes games like Balance of Power. System Source was back too, this time with their IBM 1130 from VCF East and their own tag sale of used equipment. Midwest Classic Video Game Museum returns with a feature presentation about the APF Imagination Machine and their AndroMan robot. And the Museum of Batch and Time-Share Computer History brought perhaps the biggest beast of the show: an IBM System/36 with a quad-pack hard drive. The transparent disk covers revealed the delicate dance of heads across platters big enough to carry an extra large tavern pizza.

Other conventions had tables to promote their own events. VCF East says that if you had a great time at midwest, try New Jersey for a springtime shindig. West Coast more your style? VCF Godfather Sellam Ismail represented California’s VCF scene with pieces of West Coast Computer culture. Midwest Gaming Classic says there’s more retro fun up in Milwaukee at their massive celebration in April. And members of the Wisconsin Computer Club brought some cave aged pieces ripe for the playing.

One thing I’d like to see more of is people doing archiving for old media. I know that’s tough to do live, but I appreciate folks like Chris Simmons giving it a go with his ESDI hard disk rescue setup. I know there were folks doing the same with VHS and LaserDisc setups—maybe people could work together at future events to explore more archiving opportunities.

We’re in a boom time for modern retro computers, whether they’re FPGA recreations of old machines or new platforms inspired by the classics. Mega65 systems were back at Dan Sanderson’s table, with the batch 3 revisions currently shipping worldwide. Tex-Elec and the 8-Bit Guy had demos of the Commander X16, while Foenix Retro Systems paraded the latest updates to their F256K platform. Next to the Foenix booth were two developers for the F256: Matt Massie discussing C game programming and Micah Bly showing off a port of NitrOS-9. Community support for these platforms is growing every day, and demoing at conventions is crucial to raising awareness by giving curious folks a taste before committing the hundreds of dollars it takes to buy one.

I appreciate when people create novel exhibits of commodity hardware that runs MS-DOS or CP/M. It keeps things from feeling too same-y. Sierra Back-OnLINE has a library of classic Sierra games ready for you to play on different PC clones. These machines weren’t special back in the day, except that Compaq LCD portable, but attrition has turned them into collectibles. We joke about the Osborne effect, but Adam Osborne’s computer company got some love from several tables, like this one called Osborne Computer Group! Other luggables joined in at Nicholas Mailloux’s table, where I basked in the amber glory of an IBM 5155 Portable PC. Steve Maves’ timeline of laptop evolution chronicled the progress of shrinking computers. Interested in the early attempts to bring graphical interfaces to the PC? Regret_the_van’s Rise of the GUIs shows what might have been with a buffet of visual interfaces for sampling different interpretations for windows, icons, and pointers.

Apple fans would love this year’s crop of classic Macintosh, Apple II, and Lisa exhibits. Scott Barret and the Pittsburgh Classic Mac Lab recreated an early ‘90s classroom computer lab full of KidPix, Print Shop, and Oregon Trail. DanaDoesStuff’s monstrous Apple Network Server was live all weekend, flanked by old towers and PowerBooks. Video conferencing and collaborative drawing were excellent multi-user activities powered by Peter R’s network of classic Macs. Ryan Burke’s 40 Years of Macintosh returns with a bigger lineup of classic Macs, but the star is this mountain of a Macintosh Plus with an astounding quadruple SCSI hard drive chain. Who says you couldn’t buy a tower Mac back then? Exhibiting for the first time at Midwest is Kate the Macintosh Librarian, and her mascot Maccy made friends with everybody stopping by. Mac Enthusiast friends of the show were around, starting with Action Retro and his lineup of greatest hits. Play a round of Creepy Castle on Ron’s Computer Vids’ breakout Macintosh SE powered by his boards he had for sale. Experience the latest in HyperCard stacks with Eric’s Edge and his home-brew Adventure stack. And Steve of Mac84 brought a very special guest: his color LCD SE/30. Luckily his mousepads arrived just in time to be put on sale. SIT happens!

Many popular exhibits were multimedia marvels that demonstrated how computers were behind some of our favorite memories. Frank Palazzo and Evan Allen had restored a Cyberamic control system, Those ran the animatronic stage shows at Chuck-E-Cheese. These tapes and cards turned a cigar-chomping rat into a rock-n-roll star. Beefy Betamax cameras were the bread and butter of the broadcast industry, and you could try them yourself at Kyle’s Digital Lab. Sorry about catching you when your batteries were low, Kyle—hope you felt better! Genericable added new service to their homegrown television network, like the ability to call in an emergency weather alert. An interactive Delorean time stack at Ted N’s table lets your inner Marty McFly go back in time.

Vintage synthesizers and computer audio are big hits at VCF, and the opening act is The OPL Archive. A display of PCs, sound cards, and MIDI boxes teamed up with classic synths to form an FM synthesizer supergroup. Next door was Skye Janis with more FM history, including a Mac SE driving Yamaha synths to produce some incredible music. Avery Grade’s IIGS stack is ready to jam with some sampled digital audio, and their next door neighbor Bea Thurman at the Sampling Apple rocked a Greengate DS:3 Apple II synthesizer. But something that was new to me was this Blaster PC at NightWolfX3’s table. It’s a TigerDirect barebones PC building kit with a motherboard that integrated a SoundBlaster Live. Tiger didn’t sell these for very long, which make them a rare bounty for Creative collectors. The folks displaying it lamented that they were missing drivers for things like the remote control, but they were in luck because a random passerby mentioned that he had a driver disk! When he got home, he uploaded the contents to archive.org. Now the remote is fully operational. Only at VCF.

These computer conventions are great for networking, and I’m not just talking about the human kind. Computer networks, phone networks, and radio networks are tons of fun in person. Leading the charge was the VCF Midwest BBS, returning this year with more terminal types to show how different platforms display the same board. ProtoWeb and Darren Young’s Nabu Retroweb let those who weren’t around for the ancient web or TV computers get a taste of old-timey networks. Also returning are the Atari BBS Gurus, whose Atari-centric presentation lets you peek into the life of a terminally online ST user. Ronald Coon Junior’s Never Land BBS is still running after 35 years, and you could post live from the show floor. Do you miss QuantumLink? Check out the Commodore precursor to America Online at QLReloaded. Six modems stuffed in an Apple IIe powered Steve K’s recreation of DiversiDial, a dial-in chat service that worked like CompuServe chat for a fraction of the cost. If you’re interested in networking that’s a little more local, Old World Computing had a classic AirPort setup featuring all the colors of the iBook rainbow. And John Mark Mobley’s TI Silent 700 terminal challenges you to level up your Linux skills by connecting to a remote bash shell using a paper teletype.

ShadyTel Midwest’s central office serves a key role at VCF Midwest by providing phone and network services to exhibitors. But this year brings a new connection: cell phones! A collection of vintage Motorola cell phones lets you relive the glory days of yuppie status symbols. Other telephony tricks include Thomas Major’s Time-Division Multiplexing System, a small scale implementation of a long-distance serial link. And if you don’t need wires where you’re going, ByteShift can show you how to transmit your data over long distances using Ham radio packet communication.

More tables this year featured people promoting their YouTube channels, blogs, and podcasts. Maybe some day I’ll be among them! There’s something fun about finding recommendations in person, especially when they’re backed by interesting exhibits. CityXen put up a demo of the C64 game Whackadoodle, with some controllers from RetroGameBoyz that were utterly unique. This Heroes of Might and Magic Necropolis model isn’t just for show—the Canadian Computer Collector built an entire PC inside of it. I gave five dollars to SavvySage for a copy of QuarkImmedia—now that’s a purchase I’ll never regret! If you wanted a live podcast experience the hosts of the ANTICS and Floppy Days podcasts were available for some back and forth. And June’s Nybbles & Bytes promoted the Commodore 128's multi-display power with a psychedelic game of Drunken Snake.

Now after this whirlwind tour you might be thinking “wow, that’s an amazing amount of stuff,” but that was only two thirds of the tables! I haven’t even gotten to the vendors and VIP placements yet. But having all of this stuff doesn’t mean squat if you can’t see or reach any of it. And thankfully the greatly expanded expo center meant lots of space for crowds to flow. Even the VIP tables had lots of room for folks wanting a selfie or face time with a high profile YouTuber without interfering with general traffic. I’m not sure how things would’ve worked if LGR was there—he had the longest lines in the past—but there was enough room to plan around such things if he was.

One area that could use improvement for both exhibitors and vendors is the differentiation between tables. Some exhibitors seemed to blend together and it could be hard to tell where one exhibit ends and the other begins, especially when similar themes are grouped together. The best way for an exhibitor to differentiate their table is a custom tablecloth and a stand-up banner—wise investments if you’re regularly tabling at shows like these. First-timers might try the thrifty option of tabletop sign holders from an office supply store. But the event could help too. Alternating tablecloth colors or adding small gaps between each exhibit wouldn’t hurt. Those tiny green table tents with names and numbers were hard to see or sometimes missing entirely. Many shows hang simple foam core signs with the booth’s name and number on the front of the table. That’d definitely help see who’s who in the ocean of tables.

And if you didn’t see your exhibit in this video, please accept my deepest apologies. This show was so massive that I spent most of Saturday filming as many booths as I could, and I know I missed a few. Some I overlooked because I misidentified two or three adjacent tables as a single exhibit. And then there were the ones I missed because I thought “Oh, I’ll get them tomorrow” and it turned out that they sold out of all their product or they bailed early on Sunday. And most shameful of all I accidentally deleted a subfolder of clips of some VIP tables. What’s worse is that I did it while sorting and backing up other footage! I wanted to highlight that really cool fansubbing setup by Retrobits, Adrian’s C64s, and B-roll of the other guest tables, and now I can’t. I’ve learnsed a lesson about immediately duplicating footage SSDs that I won’t soons forgets. Can you ever forgive me?

Venue Variables

Outgrowing the Waterford’s ballroom wasn’t the only reason VCF needed a new venue. Highway access, parking, accommodations, and food service matter to attendees, and what worked for a small show—or smaller, anyway; this isn't the first time they've needed to find a new home—no longer satisfied a growing VCF. Fortunately, the Renaissance is a more suitable location. It’s located twenty minutes away from O’Hare airport at the crossroads of I-90 and 290. And you'll definitely have an easier time parking, too—the Ren has more than four times as many parking spaces than the Waterford. And that’s with the Ren hosting a very swanky wedding on the same weekend—though I did notice some wedding guests hunting for spots around lunchtime. Sorry, folks; hope the honeymoon went great. You might’ve had a long trek from the farther-flung lots, but it beats last year’s overflow arrangements.

The Ren is also a massive improvement in terms of lodging. I didn't stay there myself—I stayed at my buddy Mark’s place—but I have no qualms booking a room there because I've stayed in other Rens in the past, and it's a consistent brand. The general ambiance outside the panel room and expo hall is modern four-star business hotel. This is in contrast to the Waterford and Clarion Inn, whose decor… well… It's a little too on the nose for an exposition of computer hardware from decades past. Now, the Waterford’s retro style was part of the charm of VCFs past, but it's hard to argue with a better class of service. I know several people who were jumping with joy when they saw elevators to the guest rooms—or who would’ve been if they hadn’t been carrying so much stuff, anyway. Exhibitors wheeling stuff through cramped hallways is now a thing of the past thanks to loading docks! And if you couldn’t secure a room at the Ren there were official overflow hotels and other lodging in the area.

A better class of hotel also brings a better class of food service. The Waterford’s basic cafe and rolling snack cart was inadequate for feeding hundreds of hungry hungry hackers. The Ren does have a cafe for snacks and sandwiches, but for something more substantial the Schaumburg Public House offers actual dinner at hotel restaurant prices. When you want to kick back with some friends for a post-show drink there’s a full service bar and lounge. And if none of that tickles your tastebuds or fits in your budget there’s plenty of restaurants and fast food joints nearby. You don’t even need to drive—the Village of Schaumburg shuttle will bring you to the Woodfield Mall and other local destinations. And for those who can’t wander too far from their tables, there's a concession stand in the expo hall - though I wouldn’t exactly call it gourmet. It’s your standard convention center fare—burgers, hotdogs, chicken tenders, and personal pizzas—with convention center prices to match.

Another subtle but smart idea was a room set aside for chill space. Nestled between the expo hall and the main panel room it was perfectly positioned for a break from the show floor. Pull up a seat to recharge your batteries—literal or figurative—and keep the chill vibes flowing. Given the size of the crowd it’s good to have some getaway space. Hard and fast numbers aren't available—VCF is free, so tracking attendance is difficult—but, but I think it’s safe to say that the show’s year-over-year growth continued in 2024. And thanks to all the extra space the presumably larger crowd wasn’t as stressful as last year. It was refreshing to walk around the hallways and lobbies of the Ren without needing to dodge and weave around endless waves of bodies. Inside the expo hall the wide aisles and buffer spaces meant you could cruise from one table to another without bumping into people trying to buy something or playing a demo. There’s finally enough room for the crowds of today and tomorrow.

Snags and glitches cropped up, of course. A group of tables lost electricity on Saturday morning, which was an issue for people like Genericable where every minute without power is a minute that visitors couldn't interact with old WeatherStars. I think they were down for an hour or two before things were fully working again. The only crowd control problem I observed was the initial rush for T-shirts and merch at the VCF show tables. Just like last year the show tables were located by the main entrance, and the line snaked right through the doors and around the main hallway. If you didn’t know better, you’d think this was the line to get in, when in reality you could just walk by it if you didn’t want some merch—just like last year. It did clear up after a few hours, and having the merch booth by the main entrance is a boost to visibility, but there ought to be a proper queue set up.

And I might be a minority on this but I’d still like some form of printed information guide. There was a giant sign by the main entrance with a map and table directory, and I’m sure people were expected to take pictures of it with their phones. But sometimes phones are busy doing things! I’m not asking for 27 8x10 color glossy photographs with circles and arrows and a paragraph on the back of each one. A simple black-and-white folded sheet with a map on the inside and a legend on the back would be super helpful. If there was one and I missed it, I offer my sincerest apologies to the show. And yes, I know the website’s a thing, and while that faux-QuantumLink design looks cool it’s not very phone friendly. Combine that with occasional signal issues in a busy hall and you can understand why a low-tech option might be useful.

Lastly, the hallway between the panel room and the expo hall felt a bit bare at times. Aside from the classified whiteboards and the VCF banner at one door you’d be hard pressed to tell what kind of show was going on. I obviously prefer the new venue, but part of VCF Midwest’s charm is that it feels like a big party. When the show monopolized the Waterford that vibe was easy to see because people were everywhere and little diversions like the LAN were situated in hallways and lobbies. At the Ren the party was still going on; it just moved to lounges, lobbies, and restaurants. Things are just… a little more spread out. Depending on the show’s agreements with the venue they might not have the ability to add more set-dressing to the open areas. But if they could find room in the budget for bigger, bolder signage for the alternate entrances to the expo hall or the conference rooms that’d help liven things up. But really, that'd just be a nice extra. Overall the new venue is such a massive improvement that it’s hard to find nits to pick. Well done.

Vendors, Makers, and Traders

VCF Midwest is a good place for a temperature check of the retro and vintage computing economy. With the expansion in floor space there’s even more ways to trade your hard-earned cash for a touch of technological treasure. Need a specific system or part? The many professional resellers and amateur collectors unloading surplus or refurbished equipment might have what you need. Or you could hit up the numerous makers and creators selling and demonstrating new hardware and software solutions.

At the Waterford, the vendors were mostly positioned in the big hallway and lobby outside the ballroom. Note, ‘mostly’—there were always some mixed in with the exhibits. But at the Ren’s expo hall those divisions are gone. Now vendors of all sizes are mixed in amongst the regular exhibitors. The closest equivalent to the old hallway might’ve been the north wall where returning large-scale vendors set up shop. Jeff’s Vintage Electronics, whose parts table is a familiar sight to many VCF attendees, anchored the row. They were joined by Bonus Life Computers’ array of refurbished PCs and accessories. Ecotech Computer Solutions covered their tables with software that you could buy along with a PC to use it on. Rounding out the wall was Ecotronix E-Waste, selling recovered PCs and parts to new owners to keep them out of the scrap heap.

Long lines of tables along walls weren’t the only way to accommodate sellers with lots of wares. All that extra space allowed for numerous end cap and corner spots. Take Eric Moore—AKA The Happy Computer Guy—and his gargantuan IBM system shop. His five-table corner was covered with IBM keyboards, terminals, and the only DataMaster I’ve seen in person. I don’t know if anyone took that PC precursor home, but they should’ve! And the record for most CRT displays goes to the E-Waste Mates, where after a round of Smash on N64 you can bask in the glow of a five-by-five TV wall. Digital Thrift’s numerous shelves towered over their end cap full of Apple gear, big box games, and their own share of CRTs. Those hunting for parts for vintage builds would do well at Uncle Mike Retro’s acres of video cards, chips, and motherboards. The prices at Crazy Aron’s PC Parts are so low he’s practically giving this stuff away!

Vendors big and small can set themselves apart by combining their sales with some kind of exhibition. It’s nice to show that the machines you’re selling are functional, but a separate demo PC or a game console is a great way to attract customers. Quarex’s table of TI-99 goodness leads you right to buckets of big-box games, with lots of PC classics that were snatched up quickly. Same with the Windy City TI-99 Club, who piled their table high with items for Texas Instruments computers. Matt’s Cool Old Stuff employed a similar strategy—have a Power Mac G3 and other interactives on one table, and a pile of parts and cards on the other. When you were finished shopping at Joseph Turner’s massive booth you could enjoy a round of Mega Man on a Lightning McQueen TV. If you were hungry for a Sun pizza box, Paul Rak had a stack hot and ready for take out. Then again, a static display could be just as interesting—this massive old hard drive at Ben Armstrong’s table certainly attracted its share of admirers.

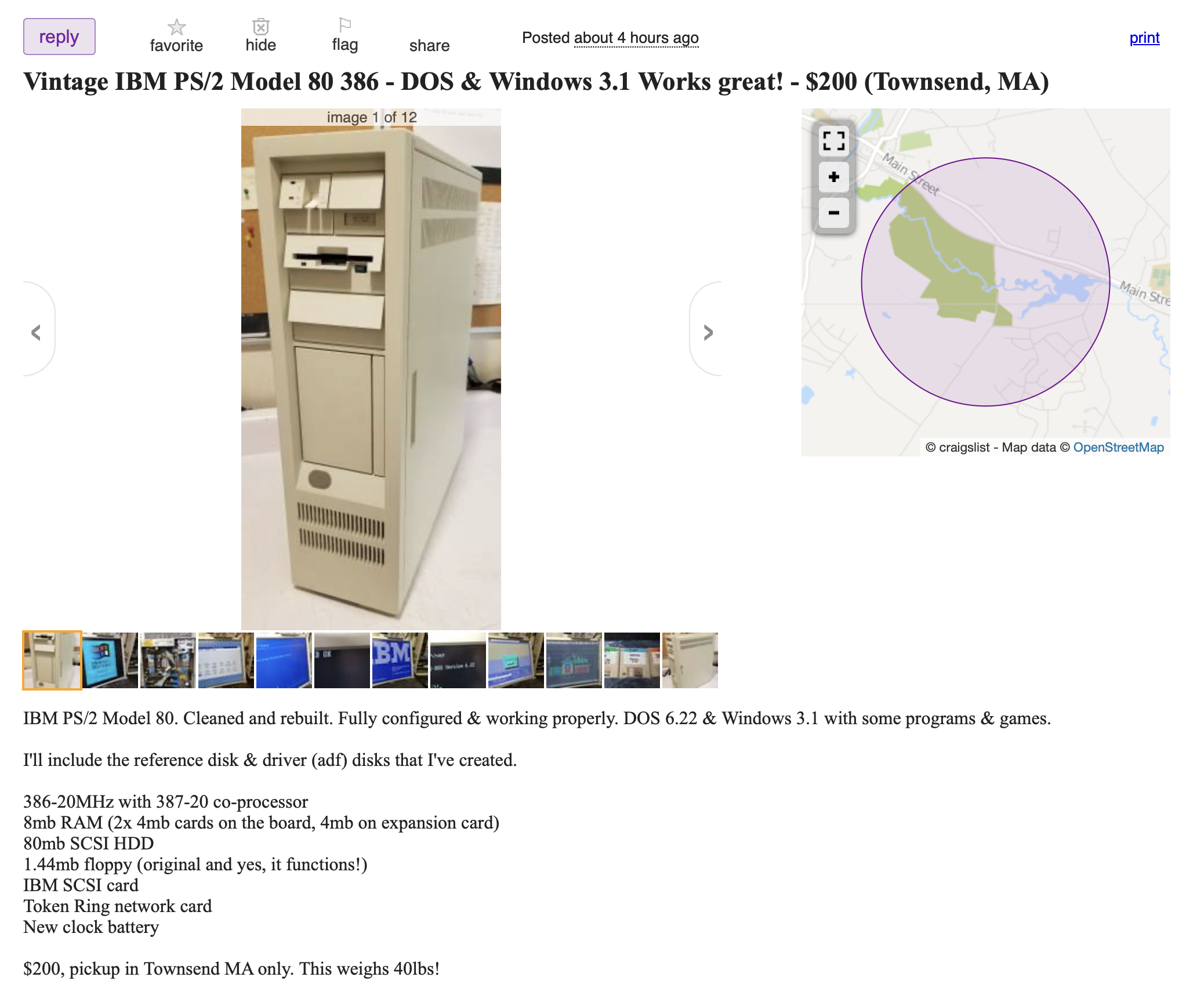

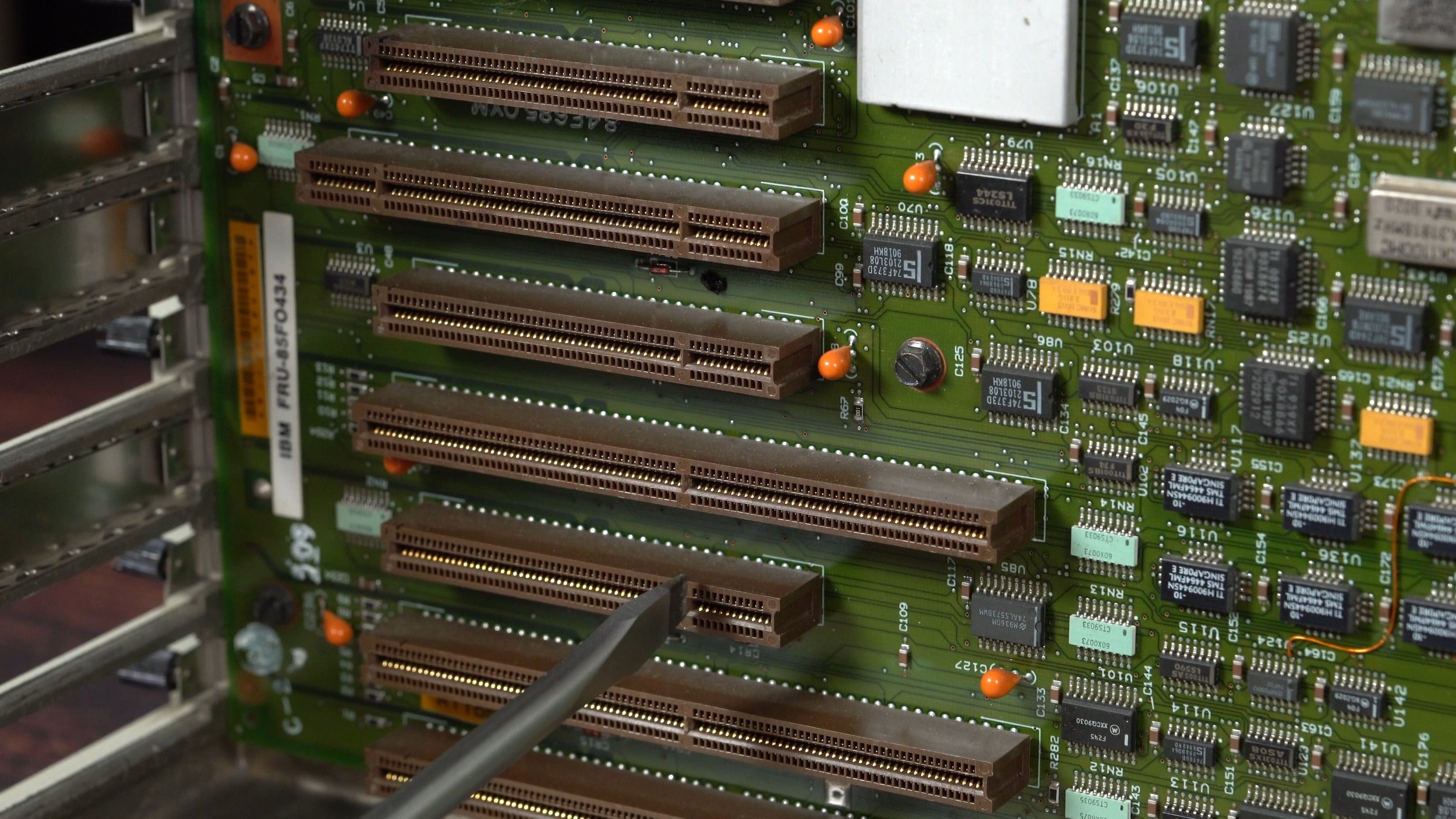

Embracing a theme is another way to distinguish your table. It’s all IBM all the time at Sam Mijal’s corner, with multiple PS/2s and ATs for the discerning Big Blue buyer. A bushel of compact Macs were ready for picking at 68K Rescue, with a stack of Mac II desktops also looking for forever homes. Logic boards, keyboards, RAM, and other bits were available to help refurbish your adopted Mac. MACNician offered refurbished PowerPC and Intel Macs along with edutainment software and teaching materials. But the biggest beige bounty was at Corbin Johnson’s corner table, where piles of Performas, LCs, and Quadras were available for reasonable prices. Most worked, but if you wanted to save a few bucks and also give yourself a project for a rainy afternoon, you could pick one that almost worked. And why not buy a NuBus network card while you’re there? Amiga fans were coveting these A3000 and A4000 setups at Peter K Shireman’s booth. Boxed Amiga software, rare peripherals, and documentation were just some of the odds and ends he brought, along with this very beige Amiga jacket.

FreeGeek Chicago is back with their ongoing mission to connect people with affordable technology, and they brought some of their inventory of refurbished PCs and parts to sell. If you missed them, they recently opened a new retail store where they accept donations and volunteers to help restore old machines to get them into the hands of people who need them.

Dozens of makers and creators attended this year hoping to get products they built or designed into your hands. Want to play around with an Altair but don’t want to pay obscene prices for a real one? Adwater and Stir’s Altair-Duino project lets you build an affordable replica of the famous MITS microcomputer. Add a bit of color to your classic computer with a light-up keyboard from Long Island’s American RetroShop, who made their way to Midwest for the first time. The Retro Chip Test Pro was also available to help you troubleshoot RAM, ROM, and more.

Custom cards and adapters for Apple, Commodore, and Atari were available at 8bitdevices, like these Apple II video cards for connecting modern monitors. New from MacEffects this year is their wonderful iMac inner bezel replacement which saved many iMacs from cracked and shattered CRT bezels. It joins their regular lineup of clear and colorful cases for Macs and Apple IIs. Also on sale were upgrades and expansions to make your Mac as colorful on the inside as the outside. Next door was 8bittees selling a wardrobe of pixel shirts to upgrade your style along with useful items like Apple IIc USB power supply adapters. CayMac Vintage returns with TechStep clones, PDS boards, and enough ROM SIMMs to make every SE/30 at the show 32-bit clean. The ever-popular BlueSCSI hard drive emulators were flying off the shelves at Joe’s Computer Museum—I bought four! Have an old broken iMac that you want to upcycle into something useful? Juicy Crumb Systems’ DockLite can breathe new life into old all-in-ones by converting them to external monitors. Same goes for Sapient Technologies’ recreation Lisa boards, which can revive temperamental Apple Lisas.

Commodore users were well served by folks looking to sell modern solutions for old systems. RetroInnovations brought not one, but two SX-64s to round out their demo display of various Commodore products for sale. After buying a C64, C128, or Amiga be sure to visit Kevin Ottum and Jim Peters to pick up a NuBrick power supply and save yourself from the danger of those old potted bricks. You’ll want a disk drive too, and over at BitBinders these modern recreations of 1581 disk drives can finally give you the 3.5 inch drive you always wanted for your Commodore. There’s even dual drive mechanisms for those who need to copy a lot of floppies. And don’t forget to pick up a joystick at Tech Dungeon to complete your new system.

One of my favorite products from last year is back: Scott Swaze and the Wifi Retromodem. Hayes versions are still available, but he showed off a prototype of a version for US Robotics Sportsters! These modems are more plentiful than the Hayes, so it’s more affordable than ever to make old modems useful again. Friend of the show Ian Scott’s PicoGUS sound card practically sells itself with an excellent display of its audio capabilities. It keeps getting better all the time—the latest update enables simultaneous SoundBlaster and MPU-401 output!

I’m also a fan of tables that don’t fit the traditional mold of VCF vendor. Inverse Phase is back with more record albums and now they’re promoting the Bloop Museum. Also several artists entered the expo hall this year to test the market for selling retro-themed art. Posters, postcards, buttons, and stickers featuring paintings of classic computers and consoles were all over Melissa Baron’s booth. As a fellow professional con artist I approve of this setup with fully-stocked shelves, clear pricing, and lovely watercolors. For those looking for something more cartoony, Your Sinclair was selling manga-style characters based on classic computers and operating systems. And these retro lithoplates at Lige Hensley’s booth project a color image on these reliefs to create a cool three-dimensional image from your favorite games.

Throughout the show a seemingly inexhaustible mob of buyers swarmed tables to tell vendors to shut up and take their money. Bigger tables and more space at each booth meant sellers could bring more merch to sell. Even with more inventory some tables were picked clean halfway through the weekend. Most vendors managed to keep things contained, but I did notice some booths with merch spread out on the floor. Usually floor items were tucked under a table, but in rare cases I noticed boxes strewn in front of booths. This happened at corners of the C-shaped islands and therefore the boxes weren't blocking traffic in the aisles. But a few were fairly large, like Dan Arbaugh’s cornucopia of boxes from 25 years of collecting rare and interesting Apple documentation, software, and accessories. Dan, thank you for remembering to keep space clear so that people could visit adjacent exhibits. I hope all your materials found good homes. Like the awnings in the parking lot last year, one or two instances isn’t a big problem. But the show might need to tweak their surveys about the size of collections for sale to make sure people are placed optimally in the hall and that vendors request the right amount of tables.

From a reviewer’s standpoint there’s a lot to like about this vibrant variety of vendors. And when talking to the people behind the tables I heard few complaints about their experience at the show. Yes, it was exhausting and exhilarating, especially on a busy Saturday. Granted, there were sometimes issues with cell reception when processing cards or Venmo. The most common request was for stricter vetting of people in the hall during setup—just like last year there were non-exhibitors floating around before opening hours. I don’t think people are worried about shoplifters; they just don’t want excited early birds interrupting their setup time. Personally I’d like to see the more flea market-type sellers grouped together, but given the complexities of arranging this space I think placements were balanced overall.

Anchoring all this was VCF central command where the show accepted donations of both cash and merchandise to sell in the garage sale to replenish its coffers. Volunteers were busy all weekend sorting through contributions, selling shirts, and helping attendees. It was hard to keep tabs on items moving through the garage sale, but I’d say the array was on par with previous years. I think the only thing it needs is some bigger signs or banners, because at first glance it sort of blends in with the other vendors that were nearby. Still, if the shrinking pile of stuff behind the counter was any indicator, this was a very successful fundraiser.

After picking over the garage sale you could pick up official VCF merch, like these delightfully ‘70s-style T-shirts and tote bags. I’m told the design’s an homage to Illinois Bell Phone Center stores, which is obviously lost on a New Englander like me. But you don’t have to be a local to appreciate good typography. I also appreciate that it was offered in a rich shade of malaise-era chocolate brown. But by the time the lines died down and I got a chance to shop I had missed out on medium-sized browns. Black isn’t quite as fun, but it's better than no shirt at all.

And lastly, the renowned Free Pile settled into its new home at the northeast corner of the hall. With more tables than last year it was easier to donate and browse items without fighting the crowd. Getting a subjective feel of items up for grabs was tough, given that I didn't stay by the pile the whole time. As I see it, this year’s ratio of junk to treasure leaned slightly towards junk, but who am I to call something junk if it’s useful to somebody else? Stuff moved so quickly that I saw completely different things every time I walked by on Saturday, which is a good sign in my book. I contributed some Zip drives—one working, and one for parts. Sunday was slower, with some of the undesirable stuff hanging around longer before finally being picked up. It was a better experience all-around than last year, even if I didn’t personally take anything. Kudos both to the staff for the improvements and to the community for their spirit of generosity.

People, Panels, and Events

Though exhibits and sales tend to take up most of the oxygen around VCF events these days, the truth is that these are social events meant for us to share our love for the obscure and obsolete. The VCF website promised a fun-filled weekend of panels, roundtable discussions, LAN events, and DIY building. And with a new venue comes the potential for bigger and better versions of the events we all know and love.

The LAN parties—that’s right, plural—were a great example. Last year’s LAN was a cozy little diversion tucked away in the Clarion Inn connector. This year it was upgraded to a major attraction in the expo hall with twenty stations available for pick-up PC gaming. And if that wasn’t enough, a nearby mini-party powered by the LANCommander digital distribution system offered more multiplayer madness. I think there’s enough potential for future LANs to expand into one of the venue’s many conference rooms, should budget and logistics allow. For now having them on the expo hall floor makes it easy to draw players in from the crowd. And when they were done gaming they could hop over to the DIY build area anchored by Build-a-Blinkie. Its footprint has grown significantly to allow more people to try soldering for the first time. With over forty workstations and an expanded variety of ready-to-build kits anyone could learn the basics of building their own electronics in a safe and supervised environment.

New to the show this year was an arcade and pinball zone brought by AVS Home Arcade. One might say “Hey, these guys are vendors!" and I’m sure they made a few quadruple-digit business deals. But in my experience you’re not getting any kind of arcade or pinball machines at shows like these without a company or a local museum that’s willing to loan some inventory. These aren’t vintage machines; rather they’re modern recreations or multi-systems targeted towards the well-heeled enthusiast. But it’s still within the spirit of the show to let people get hands on with some retro arcade action. If I had money to blow I’d be very tempted to buy this recreation of one of the creepiest, kookiest tables of all time: The Addams Family.

Once you were satisfied with your soldering and finished with fragging, you could pop in on a panel at the Schaumburg west conference rooms. The setup for this year’s talks was similar to prior events: a single main conference hall hosting all the panels, with subject matter ranging from extremely specific presentations to free-form roundtable discussions. The closest thing to a keynote at Midwest is the annual YouTube personality roundtable. Hosted as always by the genial and gregarious Jim Leonard, this year’s iteration featured a star-studded lineup of retro computing personalities with nearly two and a half million subscribers [2,494,930] between them. A standing-room only crowd hung on their every word as Jim peppered the panel with questions about their favorite platforms and the state of the retro community.

A new event which I sadly missed was the first VCF Midwest concert. Sean from Action Retro, Taylor & Amy from their self-named show, and Veronica from Veronica Explains combined their musical talents to form The Stop Bits for a truly outrageous show. I don’t believe it was recorded, but when I asked people that saw it they said they had a great time. Musical acts aren’t unusual at conventions, and since it went well I’m betting we’ll see more music at VCF in the future.

Most of the panels were presentations hosted by passionate members of the community. Peter Shireman got a little meta with his presentation about creating digital slideshows with Amigas in the early ‘90s. Those looking to save retro treasure from e-waste scrappers should listen to Chris Skeeles of Boardsort.com gave hints on ingratiating yourself with local e-waste facilities. For the game historians, Ken van Mersbergen took a look at Nexa Corporation and the games it published before merging with Spectrum Holobyte. The annual Mac collecting panel hosted by Ron and Steve concentrated on the clone era, one of my favorite periods of Apple history.

Many panels focused on creating hardware and software. Veteran hardware designer Jeffrey Wilson shared forty years of lessons learned while designing circuit boards and FPGAs. The Commander X16 update by the 8-Bit Guy and TexElec showed off new audiovisual demos, games, and software for their modern retro computer. A deep dive by Daniel Baslow into the Area 5150 demo shows how skilled hackers squeezed every bit of power out of CGA video and the 8088 CPU. Old MS-DOS fractal generators get a second life thanks to Richard Thomson’s open source efforts. And a roundtable about designing new hardware and software for vintage machines brought some of the finest makers and creators together on stage for the first time. Useful products don’t just appear from thin air, and the group shared their setbacks and successes in a frank discussion about home-brew product development.

Creating this schedule couldn’t have been easy. Over three times as many panels were submitted as were selected, and some hard calls had to be made when choosing the candidates. And while the venue does have enough space for a second panel track, the show likely couldn’t afford it this year from a financial, technical, and volunteer standpoint. That could change in the future, but for this year I think the staff made reasonable trade-offs in terms of scheduling the events.

From my attendee’s perspective the panels seemed to run like clockwork. There were no hiccups like last year’s technical difficulties that obliterated footage from the YouTube roundtable. It was thanks to Veronica from Veronica Explains filming from the audience that there was a full version of 2023's roundtable! You can't always count on that kind of luck, so the show took extra steps to prevent it from happening again. The only real flaw was the panel room’s inability to accommodate the crowd at the biggest events. I’m sure the show wanted more space, but their hands were tied due to sharing the venue. The wedding booked the majority of the Schaumburg Ballroom’s floor space, leaving one quarter slice for VCF. Only 203 seats fit in the room, a fifteen percent increase over last year’s 175-ish. It was enough space for the majority of panels, but not for the auction or the YouTube roundtable. Hopefully there's an opportunity next year for a larger seating area, logistics and finances permitting.

Speaking of the auction, VCF Midwest wouldn’t be complete without its fabulous fundraiser. Jason Timmons returned to the stage in full suit and tie to resume his role as auctioneer in an epic battle of the bids. The pace of last year’s auction suffered from having all the items upstairs in the Waterford lobby while all the participants were downstairs in the panel room. Bringing the items down just wasn't feasible, so VCF set up a remote feed for the audience. No such hack was needed this year since shelves of items could be rolled in from the expo hall. Jason kept things going nice and smooth, calling out bids and moving items along. Early Apple II logic boards, Motorola core memory, interesting old PCs, and weirdly cool printers were all vying for your generous monetary donation. There was even what looked like an old payphone saved from the scrappers.

Though the auction itself went off without a hitch, I noticed that a list of auction items was missing from the website. In past years VCF staff updated the listing page during the show to give you an idea of what was up for bids. Either a technical or logistical problem prevented these updates, so unless you checked out the racks in the expo hall you’d have no idea what was in store. But thanks to an advance posting on VCF’s Facebook page I knew of one very interesting entry: an IBM ThinkPad 701. That’s right: a butterfly keyboard laptop. They don’t show up locally very often and even parts machines tend to go for hundreds of dollars on eBay. But I had room in my budget for one unreasonable purchase, and I knew that hot butterfly had to be it.

Said budget was in the range of $3- to $400, but I wasn’t confident that would be enough. A rare item like this could gavel for $500 or more. When it came up for bids it looked to be in decent physical shape and the keyboard mechanism unfolded perfectly. But there was no power supply and therefore nobody tested it to see if it turned on. Just before bidding an audience member asked a crucial question: what kind of screen did it have? The stage manager checked the bezel and announced model number 701C… s. That pesky s drew a chorus of groans from the crowd—unlike the 701C-no-s and its nice active matrix screen, the 701C-with-s has an inferior passive matrix screen! Oh well—something something, beggars choosers. Still, the keyboard was the star, and if it worked—and that’s a pretty big if—the winner would walk away with one of the most collectible ThinkPads.

Bidding started at $100 and entries were coming in hot and fast as the price climbed over $200. Bidders dropped one by one as the price reached $300 until it was down to me and one other person. At $360 it went once… twice… sold, to the guy with the beard and the t-shirt advertising his YouTube channel! And it was still within my budget, too. I picked it up from VCF central command and immediately played with my new toy. Keyboard goes out, keyboard goes in! Keyboard goes out, keyboard goes in! But enough about the trick keyboard—does it actually work?

After watching the last few panels Mark and I headed back to his place to test the Butterfly. After removing the corroded battery and plugging in a spare ThinkPad power supply we held our breaths and pressed the power switch. The laptop powered on! Yes, it powered on with a ‘missing operating system’ error, but that's not nothing! To quote one of the great luminaries of our time: it freakin’ works! After we restored the IBM factory hard drive image it booted right into IBM’s dual-OS selector for Windows and OS/2. We loved the introductory video of a besuited IBM flack welcoming us to the ThinkPad family and how a ThinkPad embodies IBM’s “spirit of excellence.” And that passive matrix screen? It’s not so bad after all. A magical folding keyboard makes up for a lot, and I have a hunch it’ll show up in a future video.

The Winds of Change

I have to tip my cap to the VCF Midwest staff. In last year’s review I speculated on ways they could work around the Waterford’s limitations. While I thought a new venue was necessary I didn’t think moving was in their plans. Well, they proved me wrong by making the tough call to spend the money and effort to relocate to a larger venue. And it worked out! Their new home filed off many of the rough edges and the result is a better, less chaotic VCF Midwest. That’s not a bad thing per se—there comes a point in every show’s life when they’re forced to decide if they’re a glorified meetup or a capital-C Convention. VCF Midwest isn’t a scrappy little get-together anymore. It’s a big show now, and it’s acting like it.

I’m sure some people are grumpy about the loss of intimacy, or how it’s impossible to get a table or panel. And they're not necessarily wrong, but those are gripes about any growing convention. Now the show’s ongoing concern will be sustaining itself from a financial and logistical standpoint. This is the case for all conventions, of course, but for VCF it's more important than ever, since the Ren is more expensive but attendance is still free. I understand the desire to keep admission free as in beer because the staff aren’t in this for profit. But if I was treasurer I’d be exploring additional revenue streams to help finance expansions like a second panel track. Creating sponsor packages to attract larger donations from individual attendees might motivate some people to give more to the show. Or it could be a boondoggle that costs more than it brings in. But given how the staff handled the venue change I think they’d be able to figure it out.

Something else that needs to evolve is my coverage of the show. One benefit of the new venue solving a bunch of problems is that I could spend more time talking about the actual content on the show floor. And the downside is that there’s so much more to see that I struggled with how to present it all. It’s tough writing blurbs without devolving into “here’s this table, and there’s that table.” I care about presenting the show honestly, and it’s daunting to weave a satisfying narrative when you’re staring down over five hours of recorded footage. I have a feeling I might split next year’s coverage into two videos: one for an overall review, and another one for in-depth coverage of all the tables. We’ll see what happens next year.

And for all I’ve said about venues they’re just a means to gather the amazing community that shows up to put on fantastic exhibits, find old machines new homes, and talk about their particular passions. The Midwest staff knows this and when the size of the Waterford became a barrier to that goal they moved to new digs. We don't go to a show to see the venue, we go to see the show! Vintage and retro computer fandom is powered by a sometimes irrational desire to keep ancient and forgotten tech alive. It’s infectious, especially up close when people get to try the hardware and software of their dreams. I always encourage viewers like you to check out vintage computing events, especially local ones. And if you decide to make the big trip out to VCF Midwest next year, it’ll be ready for you.