The Mac Studio Report: X Marks the Mac

Note: this is an edited transcript of a live podcast.

Welcome back to another off-the-cuff edition of Userlandia. Off the cuff, because we just had an Apple event today. I didn't do one of these when we had the MacBook Pro announcement, because I knew I was going to buy one and I was going to write a massive review about it. But I'm not going to buy the new Mac Studio, so I'm not going to do a big, giant review of it. So I think it was probably better for me to get some thoughts and other things about it out of the way. It's the late evening here when I recorded this here in the lovely Northeast. So it's been some time since the announcement and I’ve been able to ruminate about various things.

RIP the 27 inch iMac

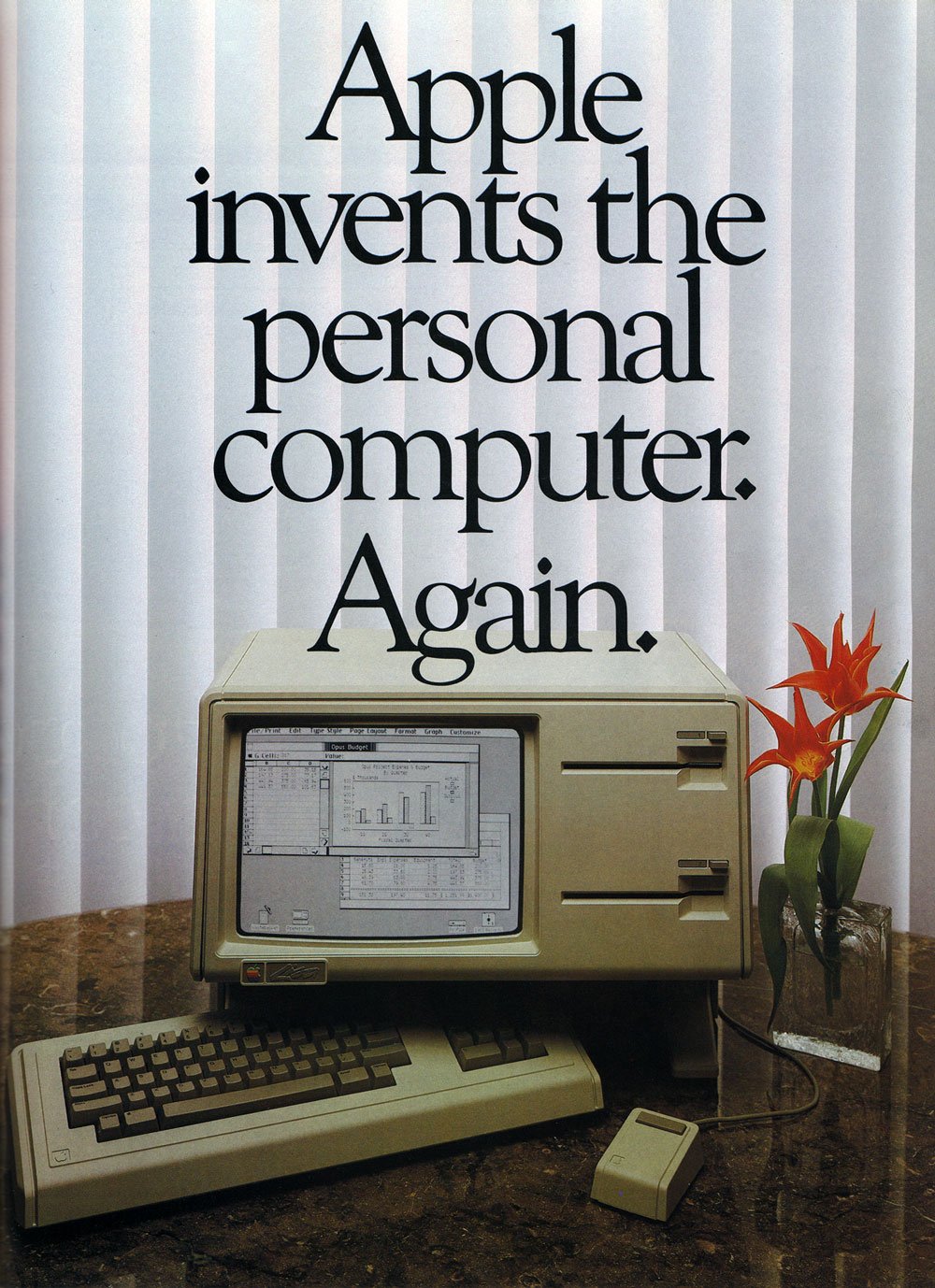

Today’s announcement was Apple unveiling the new Mac Studio and Studio Display. Now before I get started, I’d like to give a little honor to the 27 inch iMac. I’ve got a bottle of Worcester’s own Polar seltzer, and I’m gonna pour some of this blueberry lemon out in tribute. The 27 inch iMac’s been around for quite a while. Starting at 1440P and then going all the way up to 5k, it had beautiful screens attached to a decent enough computer. But with the announcement of the Mac Studio, it vanished from Apple's website. The 27 inch iMac is no more. In its place is the Mac studio, the Mac that everybody thinks they want: A new headless Mac that will forever separate the iMac’s beautiful screen from the computery guts within.

And, you know, I liked the 27 inch iMac. It was a perfectly fine machine for what it was, and you usually had a really nice value. It had a really nice screen with a usually decent enough computer, but never really a barn burner because it’s compromised by the thermals of the display. Plus, Apple over the years made the sides thinner and thinner and a little more bulbous in the back which didn’t help the thermal performance. The result were iMacs with really loud fans and CPUs that would throttle after a while. And it took the iMac Pro to balance that out by completely redesigning the internal heat removal system. With the Mac Studio, Apple has basically done two things: they've made a iMac without a computer—that's the new Studio Display is. And they also made an iMac without the display, which is the new Mac studio.

It's serving that same sort of high-end iMac user who doesn't necessarily need expansion capabilities. For some users that's a benefit since they don't want to throw away “a perfectly good monitor” when they want to upgrade their computer. And for some other folks, they liked the value that they got when they bought that 27 inch iMac and I just sold the old one and recouped some of the cost. I think there's kind of six and one half dozen of the other when it comes to that. But with the way Apple is moving forward with Apple Silicon, and other things, along with people requesting nicer screens to go along with other Macs, it's hard not to see the 27 inch iMac and saying “well so long, and thanks for all the fish. “

Does that mean that the large iMac form factor is dead for good? I don't know. I personally think an iMac Pro, such as it is, would probably be welcomed by some people, but maybe they're going to hold out until we get whatever 30 inch XDR model is coming down the pike. Who knows. But for the time being, if you are a 27 inch iMac owner, you're either going to be buying a Mac mini and the 27 inch display, or you're going to be buying the Mac studio and the 27 inch display. And whether that works for you or not, I guess we'll have to see what happens on the reviews and everything else come in.

The Mac Studio Design

Why don't we start with addressing the Mac Studio’s design. Those renders had come out a few days before and while they didn't look exactly the same as the finished model, they pretty much predicted what we got. We’ve got a slightly taller Mac Mini with better cooling. It has ports and an SD card slot on the front, which is addressing some complaints people had about the Mini—that you always had to reach behind it to plug stuff in or pop in an SD card. There were similar complaints about various iMacs over the years about the same port arrangement. Why couldn't they put it on the side, we asked? Well, now you don't have to go around the back to go and plug stuff in. I’m all for that—it's nice to have front panel ports. Practicality seems to be the name of the Mac Studio’s game. There's USB A ports. There's 10 gigabit ethernet. There's four Thunderbolts on the back, which is perfect for monitors. And while you’ll need dongles for the front USB C ports, that's becoming less of an issue as time goes on. So I think people will be pretty happy with the ports.

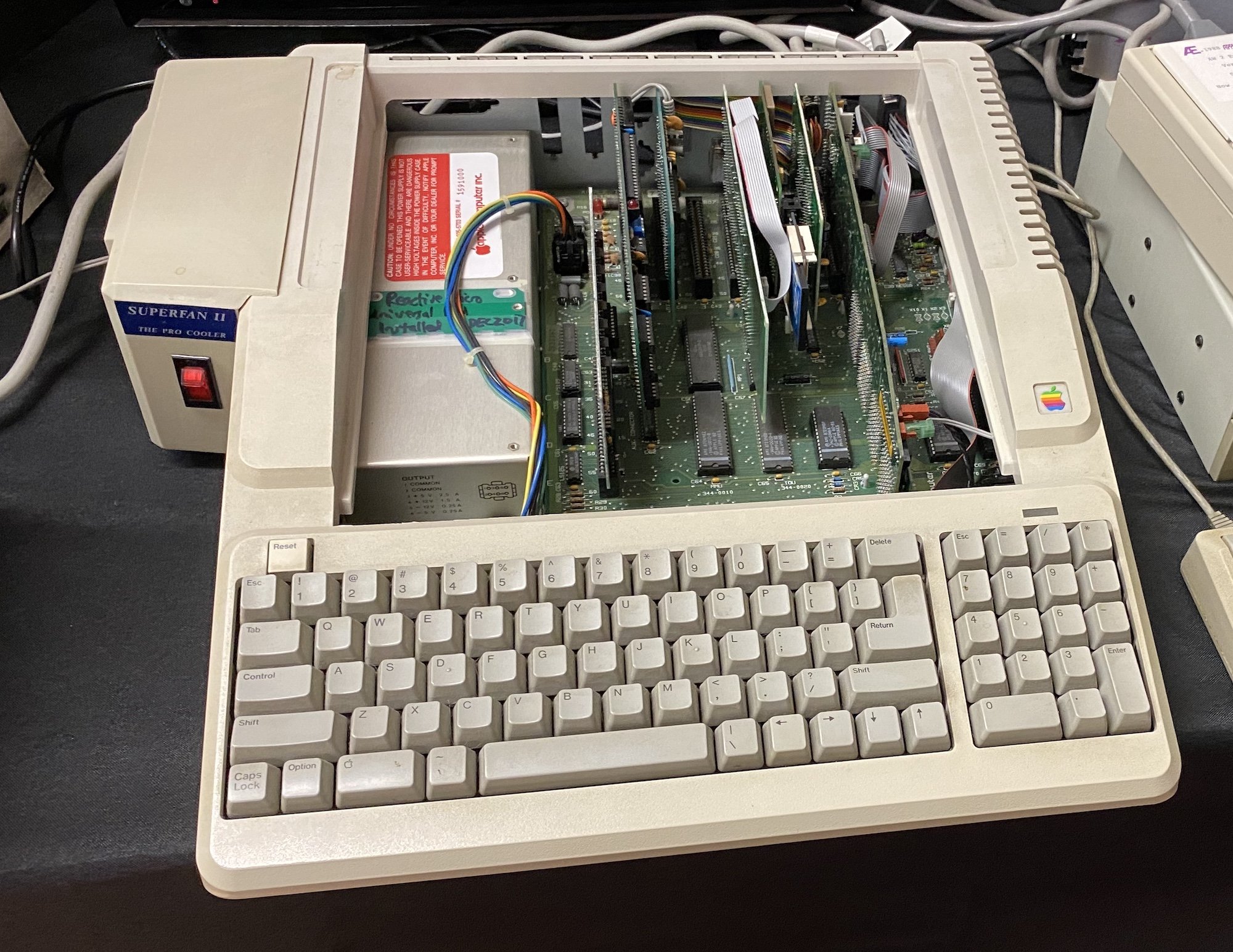

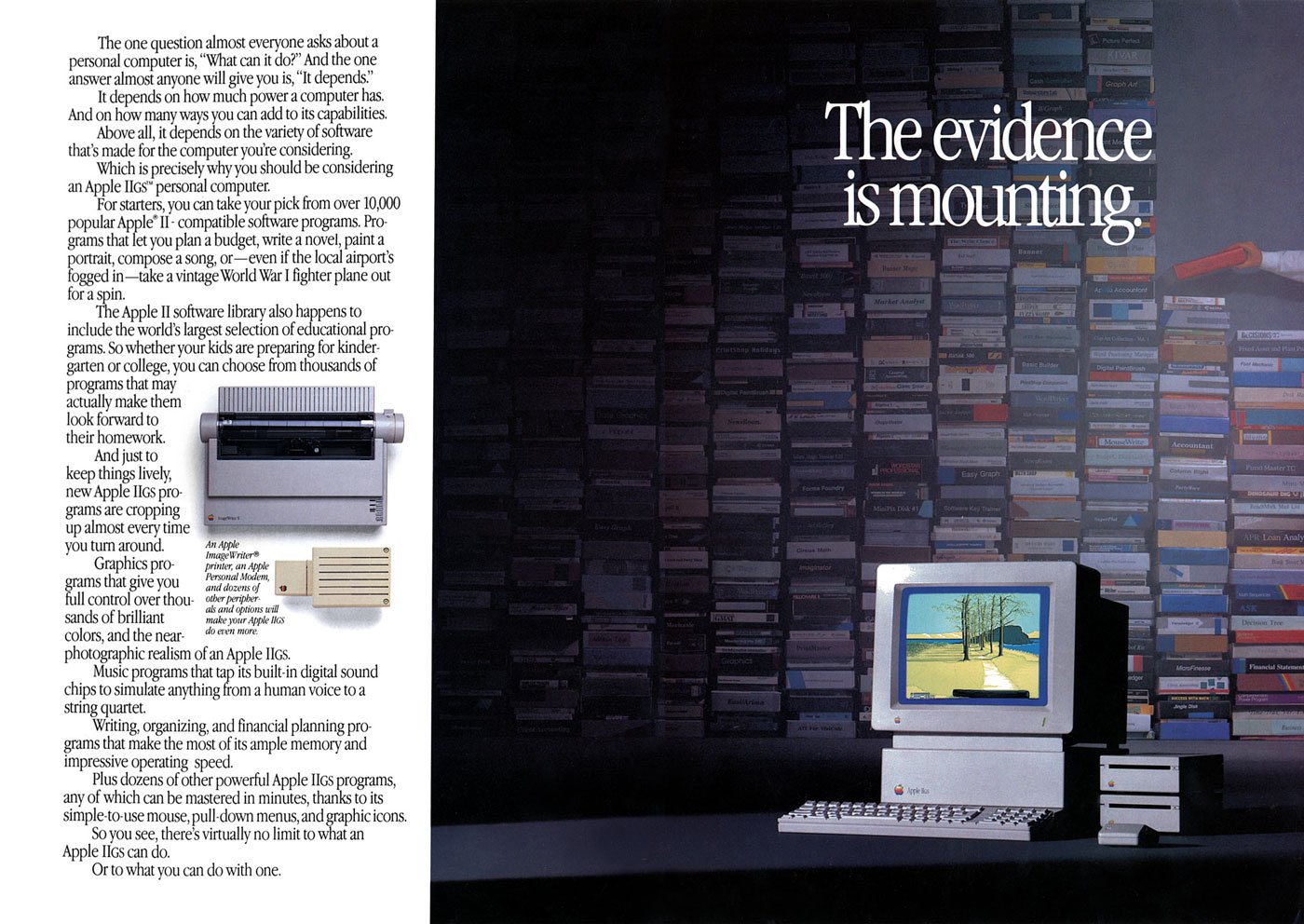

Of course, in the run-up to this, everybody was asking, “oh, will this finally be the mythical xMac?” If we want to have mid-to-high-end performance without having to buy a big iMac, is this finally it? Some grognards, and probably myself, will come along and say “it can't be an xMac without slots.” Well… maybe I won't say that. I've always been of the opinion that the xMac was supposed to be a headless iMac and then scope creep came in and people kept saying, oh no, it needs to have slots and an upgradable GPU to truly be an xMac. The beautiful thing about the xMac is that it could be anything to anybody at any time. The goalposts just keep shifting and we have no idea what anybody actually means.

With the way things are going these days with systems becoming more and more integrated—and not just on Apple's side, either—it makes sense that the Mac Studio is the machine that you want to buy if you want performance and you don’t need extremely specialized cards. Ultimately the writing has been on the wall for systems on a chip, Apple's integrated GPU, and other strategies. Apple may do something more traditionally expandable but that's clearly going to be in the Mac Pro realm of things. So when it comes to this machine, they're just stuffing as much power into as small of a form factor as possible.

Now I'm not the only one to make the observation that this machine is basically the G4 Cube, except a lot more powerful, a lot quieter, and less chance of seams going through the sides. When you're looking at the people using the Mac Studio in the launch video, it looks like the personal workstation that the Cube was meant to be. It doesn't have the pretense of the Cube—it's not saying, “oh, I’m an object of art.” It's a well-designed and it fits in with your work, but this machine is a machine designed to do work. It’s not designed just to be beautiful. They've put function a bit ahead of the form. Especially when it comes to the function of cooling and performance, when the G4 Cube had no fans.

This machine is much smaller than the Cube, yet it has two very large—and probably very quiet—fans. The 16 inch MacBook Pro is already quiet, so we should expect similar performance here. After thinking about it for a while, I realized that the Mac Studio is functionally the 2013 Mac Pro reborn. I prefer calling it the Darth Mac instead of the trash can Mac, because I think the concept of that Mac was fine. It was a machine engineered around external expansion, geared towards heavy GPU compute with a pretty powerful processor inside of it. The difference here, of course, is that you can't replace the processor, you can't replace the video cards and you certainly can't put more RAM or NVME SSDs into it either. But if you put the two next to each other? You can say, yeah, this is that cylinder Mac Pro at a more affordable price point.

If you look at the Darth Mac, it was introduced at $2,999. The Mac Studio starts at $1999, which is $1000 cheaper, with a heck of a lot more performance under the hood. And the M1 Ultra configs are competitive with the old dual GPU options. Of course, the downside is you probably can't shove as much RAM into it, but I don't have the cylinder Mac Pro’s specs currently in front of me to confirm that. If you don’t need PCI Express cards, you could swap out your fleet cylinder Pros with Mac Studios using just a few Thunderbolt adapters. Unlike the cylinder’s trick thermal tunnel design, the Studio is a Mini that's been beefed up in terms of cooling. It’s designed to cool a specific amount of heat, but that’s OK because we're clearly going to have room in the market for a model above this. And I think had Apple kept a machine with slots along with the cylinder Mac Pro, I think the cylinder would have been a lot better received. Thankfully they did that at the end when they said “oh yeah, by the way, we know about the Mac Pro—we'll come back to that another day.”

So that's just giving people permission to not freak out and go “aaah, slots are going away again!” But with the 27 inch iMac dead, and this machine here, there is a very big gap in power between the M1 Mini and this. I genuinely thought that we would have had an M1 Pro machine to start the lineup with at $1299 or even $1499. It’s one thing to say “okay, the M one mini is not enough. I need more monitors. I need more RAM, but I don't need a gigantic GPU or anything like that.” And I think they're missing a trick by not having that there. On the flip side, the M2 Mini may solve that problem for us. It wouldn’t surprise me if the M2 Mini supported 32 gigs of RAM and gain an extra monitor connection to support up to three monitors. That’s what those lower-end 27 inch iMac customers are asking for. So if it turns out that we get an M2 Mini in the summer or fall time, and it includes all those things, then I guess they just have to wait a couple months.

Chips and Bits: the M1 Ultra

I understand why Apple started with the M1 Max and the M1 Ultra, because that high-end market has been waiting. They're going to go and spend all the money they’ve been saving. Users with big tower Mac Pros will probably be okay waiting for another six months to hear whatever announcement Apple is going to make about the Mac Pro. Though the entry point is the Max, the Studio was designed around the Ultra. The M1 Ultra model is a little heavier because they've put a beefier heat sink into it. It’s hopefully designed to run at that 200-ish watts of full-blast power all day long.

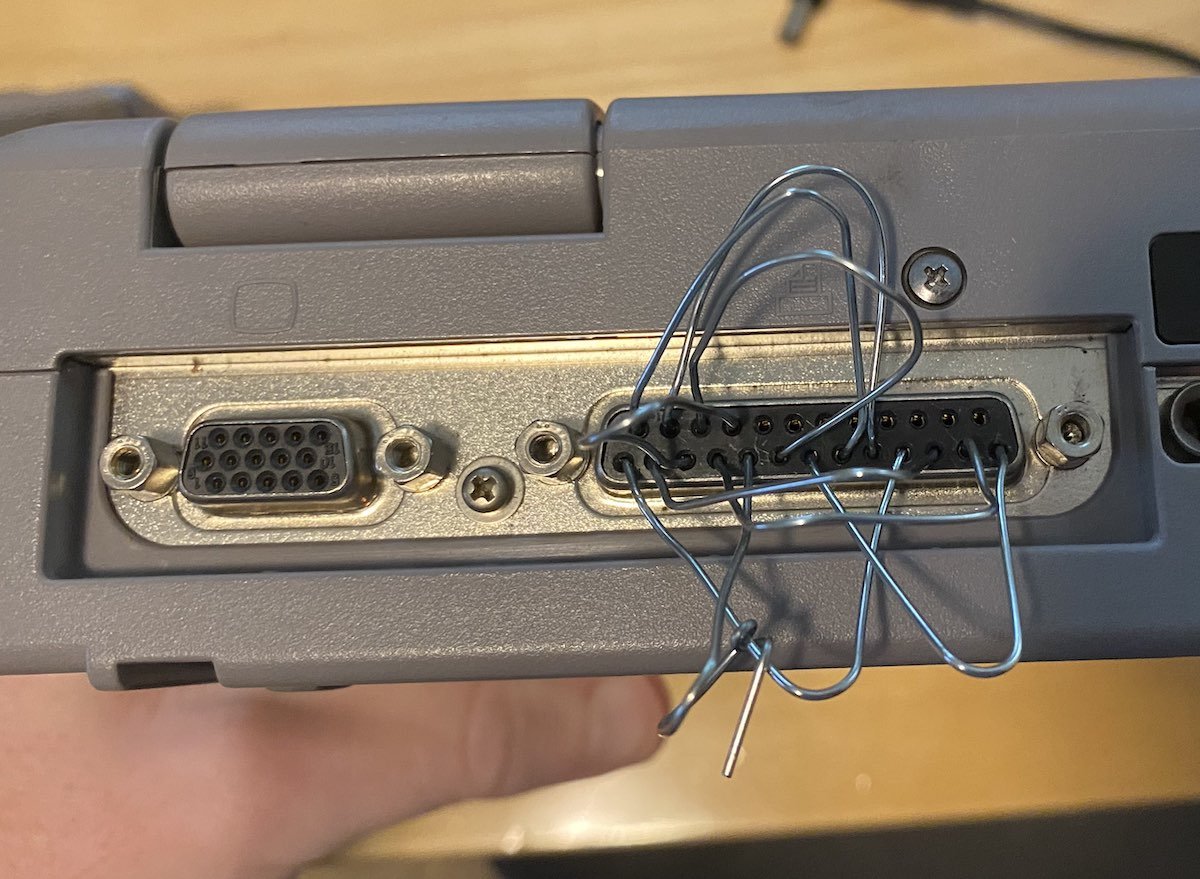

We knew about the Ultra because the rumors talked about the leaked duo and quadro versions of the M1 Max. And what we got in the Ultra is the duo. Put together not as a chiplet like AMD, but instead with an interposer. We've seen TSMC interposer come up here and there. People at first thought Apple would use it for HBM memory. Instead they just kept doing their standard on-package memory construction while using the interposer to connect the two processor dies together. That interconnect means we don't have to worry about latency or anything between two chiplets. There's benefits to AMD’s method, namely that if one chiplet is good and one chpilet is bad, it's easier to manage yields. Whereas with the interposer I’m pretty sure both dies have to be good to make a valid processor. Whether apple will ship M1 ultras that have one whole block disabled remains to be seen, I guess we’ll have to see how they'll manage yields on it.

Another question with this method is whether performance will scale linearly. If Apple keeps throwing more cores and more memory channels at problems, especially where GPU is concerned, will that let Apple compete with an RTX 3080 or 3090. That graph comparison they showed was against a 3090, which is very ambitious. As we saw with M1 Max benchmarks, they started reaching some limitations when adding more cores. Some of it’s due to software optimization, of course. But still, if they manage to treat all the cores as one monolithic unit and the GPU manages to get access to all 800 gigs per second…still what a number, right? That’s crazy.

I don't think Apple necessarily needs to beat the 3090 as long as they can trade blows and come up just short and other benchmarks. The fact that they can do as well as they are and still have access to all of the memory is pretty good. If you've got workload that's greater than 24 gigs of VRAM, this might be the machine for you, I suppose, but the fact that they're able to get as close as they are while using less power is impressive., I don't know. I have a 3080TI I in my 5950X workstation. If I don't undervolt that card, it’ll bounce against its 400 watt power limit all day long. If Apple manages to get very close to 3090 performance while using, say, 120 or 150 watts of GPU, I’d call that pretty good.

But the other thing to keep in mind when comparing to something like a 3080 or a 3090, is that this is performance that people will largely be able to buy. Because people aren't buying Apple graphics cards to go mine Bitcoin or Ethereum, they’re buying them to do work. I suppose people could go and buy these machines to be miners. They would be fairly efficient, but I don't see it working from a density standpoint. I haven't done specific price breakdowns on comparing a 3090 to anything else, Keep in mind that if you can manage to get one at retail, you're going to be spending $2,200 on a 3090 that doesn't even include the computer. So if you wanted to build a 5950 X plus 3090 system, you're going to be spending a lot of money to do that.

I put together something that was a little more in line with the base price Mac Studio. If you want just to match the lower end—let's say a 3070 and a 5900X—your whole chassis cost is going to be in the range of about $2,300 to $2,400. And you're going to put another $1,200 to $1,300 on top of that to do a 3090. You're going to be very close to the cost of a Studio. So, if you're thinking about doing work and you've been worried about picking up graphics cards, you can say “well, I can just go buy this whole computer here.” And that won’t help you if you're just upgrading from a 2080TI to a 3090. Still, if you're looking at it from a “I need to buy something to do work” standard, that's going to be harder to argue with. Even through all these production problems that everybody's been having, it's still fairly reasonable to get a hold of 14 and 16 inch MacBook Pros.

And that's what these machines are. They're headless 16 inch MacBook Pros that have additional performance options. The fact that you can just go and buy them in a store is very much a mark in their favor. That said, as of this recording, GPU supply is getting better. I've noticed just over the past week that 3080, 3080TI, 3070TI, and so on have actually been showing up on sites like Newegg, where you can just go and buy them and not have to deal with the Newegg Shuffle. You might have a problem trying to buy one in the afternoon or whatever, but I've been watching every day and it's been getting easier and easier to just go and add something into your cart without getting it completely yanked away from you.

The downside of course, is that prices have not yet adjusted to that reality. If you want to buy a 12GB 3080, you're going to be spending $1200 or $1300. 3080TIs are the same deal. You're going to be spending $1,400-1,500 ballpark. 3070TIs would be around $900. That's what you're going to be contending against. So if you're within the Apple ecosystem and you've got GPU reliant stuff that can run on Metal, it's something to keep in mind if you're thinking about switching to Windows. If you run CUDA, these won’t be very helpful. You'd have to port yourself over to Metal.

The Studio Display

For some people, the more interesting half of the announcement is the Studio Display, which comes in at $1599, which is I believe $300 more than the LG UltraFine 5K. If you want to get the stand that pivots up and down, that's an extra 400 bucks on top. A Nanotexture coating costs another 300 bucks. So you could theoretically spend $2299 on just one of these monitors. On the flip side, if you want to use a VESA mount, there's no extra charge for that. Just make sure you know what you want up-front. There had been rumors that the display would have an A-series chip built into it, and it's going to run an OS and on and on. And I think a lot of people don't realize that monitors these days are pretty smart. They have SOCs and other things inside them. Those SOCs just tend to be smaller, cheaper, less expensive ones to do image processing and drive the on-screen display.

But it's clear that the A-series inside is more about enabling other features. Things like Hey Siri, Spatial Audio, TrueTone, et cetera. And they're actually decent speakers which look very similar to the ones in the new iMac. Those iMac speakers are actually good sounding speakers compared to the junk that's in the LG and Dell monitors that I have laying around. The webcam looks similar to ones we’ve seen in iPhones. Add Center Stage along with better image quality and it embarasses the ancient camera in the LG UltraFine. It would certainly outclass what’s in a MacBook Pro or the kind of stuff that you buy on Amazon. Of course, they're showing people hooking three of these monitors up to one machine and it’s a bit silly that you've got three webcams now. Will they allow stereo support? Can you have a steroscopic 3D webcam? Or maybe you could say, “oh, I'll pick the webcam that has the best angle on me.” Who knows. We'll see if you can actually choose both of them. There's also a USB hub built in. One of the ports should have been a USB A port, but I’ll never stop beating that horse.

It does look nice.

It is a 5k panel which looks to be pretty much the same or similar to the 5k panel that we've known for a long time now. That means it's not 120 frames per seecond, which I know people are grumpy about. I think for this particular monitor, it's a case where the trade-off is being made for prioritizing resolution over frame rate. And right now with Thunderbolt’s specs, you're not going to get a full say 5k 120 FPS over one of the 40 gigabit duplex connections. You might be able to get 5k 120 over the 80 gigabit half-duplex connections, but then you would give up items like the USB ports, the webcam, and the speakers. For some people, those are important features that matter more than frame rate.

I still think apple should introduce a 4.5k external monitor. I get why they're not. It's very hard to compete with the $400-ish 4k productivity monitors that are out there. But I do think a smaller 4k XDR monitor would make sense. That’s for someone who wants 120 FPS mini-LED, so on and so forth. You can say “well, I don't need as much display space, but I do want different image quality.” That would probably work. There was no mention of support for any kind of HDR, obviously, because the backlights and stuff are not intended for that. If they did support HDR, that would mean even more bit depth than other data to worry about. Which again, encroaches on bandwidth a little bit.

I can understand why they made the trade-offs they did, and that probably won't satisfy some people who might have different priorities than others. But given that the LG 34 inch ultra-wide 5k2k monitor that I own generally retails for about $1299, getting the extra vertical real estate along with other features probably justifies that $400 tax. I can’t buy one because I wouldn’t be able to use it on my PC. And there's also no built-in KVM switch support or support for multiple video inputs, which is disappointing.

The Right Spec

So now let's talk about what these machines mean from a value standpoint—what's the right spec? If you had to buy one of these today, what would you choose? Let's take an easy comparison. The $1999 base model is exactly the same as the 14 inch M1 Max MacBook Pro. The difference is that the $1999 model has no monitor, no keyboard or trackpad, has more ports, and has 10 gigabit ethernet built-in. Compare that to the laptop, which is $2899. You're spending $900 on the monitor. You're losing some ports, but you're going to get probably similar performance. Honestly, the studio will probably perform better because it has better cooling, a better heat sink, and will remain just as quiet. If this is intended to be a desk machine, you'd probably be okay with it, especially if you already own a few monitors.

Now, if you have a 27 inch iMac, that becomes more troublesome because, well you can't use that 27 inch iMac as a monitor. My advice would be to sell that machine as soon as you can and get as much money for it as possible and put that towards another monitor. That would probably put you in line to what a 27 inch mid-range iMac would cost. At least most people I know who were buying the 27 inch iMacs were usually paying $2,500 for that. You could go and buy that $1999 machine and buy a 27 inch 4k monitor. But you're going to be running it at either a scaled resolution or 2x 1080, and most people I know don't like 2x 1080.

On the other hand, you've got the $2399 Studio model, which has a one terabyte SSD and a full 32 core GPU. Compare that against the $3499 16 inch MacBook Pro that you can just walk into an Apple store and buy. That’s a considerable difference. The two machines share the same specs, except the Studio gets more ports, the 10 gig ethernet, and everything else. You're just not getting that 16 inch monitor and that's saving you $1,100. That's $1,100 that you can put towards another monitor. Between these two, I would spend the extra little bit of money and get the one terabyte 32 gig one. And you would probably use that machine for five years and it would more than make the money back if you were using it to do actual work.

The one option box I would tick.

Of course, something that’s not mentioned in that price is that there’s no keyboard and no mouse or trackpad included in that price. It’s another way it’s just like the Mac Mini. So if you already have a mouse and keyboard, you're good. If you want to buy a Magic Keyboard and a Magic Tackpadad, you better pony up 300 bucks on top of your Studio’s price to actually use your new computer. Or, you could go and use whatever keyboard you like. If you're buying one of these machines, you're probably a mechanical keyboard nut, and you probably have your own custom board that you've been typing on. I thought it was funny that Apple’s demo video showed people using MX Masters and other pointing devices. They know their audience—it might make sense to just let them spend the money the way they want. If they want to spend it on an Apple accessory, great. If not, whatever. For 27 inch iMac buyers, I would say, wait a little bit. If your machine is several years old, the winning move might be to sell it and put the money towards a monitor.

A Mac Studio plus a Studio Display is going to be about $3,600. You probably spent $2500 to $3,600 on your 27 inch iMac when you kitted it out initially. It’s a tough call—you might spend more money, but you don't have to buy the Apple Studio Display—you can use whatever monitors you want. So if you want to go and buy the LG Ultrawide, you can save a few hundred bucks. If you've got a LG UltraFine 5K, you can save a few hundred bucks. Otherwise, it looks like you're going to be spending some money. On the flip side, in a few years you won’t have to throw that nice monitor away or sell it along with your computer. You can just go and buy a new Mac Studio, plug it in, and there you go.

A PC and Mac Pro Comparison

Now, what if you compare this to a PC? Now with PCs, there's all sorts of things you say, like, “I found this pre-built machine for less money.” From my point of view, I've been building PCs for the past 20-odd years. I went to PCPartPicker and put together an AMD 5900X with a 30,0 regular—not TI—and an 850 watt power supply, 32 gigs of RAM, and a quality one terabyte SSD with an ASUS B550 motherboard. I came up to about $2,400. It's a slightly less—really more like $2340—but it was very close. And the reason why it's so close is because GPU prices are still sky high. A prebuilt PC from someone like Dell or HP is probably still going to be around a $2,000 ballpark. You might save 200 or 300 bucks, but you’re not going to get the same performance for half the price. And as far as the M1 Ultra goes, if you want to do a 5950X plus a 3090, again, it's going to be very close, especially because you're going to have to upgrade the power supply. I used a Noctua cooler, which you can get for a hundred bucks, and I use that in my own 5950X machine. But if you want a big, high-powered radiator, you’ll probably spend even more money. Putting a 3090 into this same build would add $1,300 at MSRP—and that's not including negotiating with a reseller or whatever they're deciding to call themselves these days. So if you're looking for that kind of performance, you're not going to be building a machine for half the price of the M1 Ultra, especially with the way the market is. 5950X prices have come down a little bit and you're going to save around 200 bucks because Intel has finally gotten their act together a little bit. But if you're building an AMD build like that, you're going to be in a smilar price range.. All that's said and done my recommendation, the $2199 1TB SSD model.

I think most people aren't GPU limited, and they care more about RAM. So this gets you 32GB of RAM and more storage, and you’re probably not going to miss those six GPU cores. You’ll be happy with it on your desk, it’ll run great for five years, and you'll hopefully sell it for 6-700 bucks when it’s all said and done. I’d avoid the M1 Ultra unless you know you need that GPU or CPU compute power. And even then that's a real big price increase for the increased horsepower. But you're going to ask me, “Dan, my needs aren’t being met by this. What can I do?” Well we know the Mac Pro is coming—they told us themselves at the end of the event.

That just raises further questions! Are they going to put in the rumored quad Max chip? I don’t think they’ll call it an M1, since the Ultra was pronounced the end of the line. They could call it something like X1—Like Mega Man! We’ll do Mega Man X naming conventions. A problem with the M1 Ultra is Apple’s way of doing RAM on package. If you need 128 gigs of RAM, you have to get the Ultra, even if you don’t need all the cores or GPU power. That is a problem that they need to solve for a Mac Pro, because this doesn't scale. Assuming if you do a quad, you could probably have 256 gig, maybe even 512 if we have higher density LPDDR5 modules. Pro users who are used to the 1.5 terabyte maximum in the current Mac Pro will demand a better solution, and Apple's going to have to find some way to match that. And I'm not sure there'll be able to do it with the on-package method. On the other hand, it’s hard to see them going back to DIMMs after touting the on-package performance. So it could be that we could end up with an Amiga situation. We could have chip RAM and fast RAM again! On-package memory that's devoted towards the GPU and then logic board memory that's devoted towards CPU. But then we're right back to where we were a couple of years ago, and all of Apple's unified memory architecture starts going out the window. It's a legit problem. I'm sure they have a solution that they're working for on it right now.

But we'll just have to see. The other thing pro users want are PCI Express slots, and I can't see Apple making the mistake of ditching slots again. After their big mea culpa with the 2019 Mac Pro, they’ll have a solution for keeping PCI express slots. They’re probably not going to put eight of them in there and they're not going to have gigantic MPX modules. A new Mac Pro is going to have regular PCI Express slots that you can put regular interface cards into for Pro Tools and such. The question is can they manage making the Mac Pro into something that can scale from the quad up to something even more? I really think they've got the CPU and GPU power nailed, they just need the rest of the package. Apple need to have the ability to have more GPU power, more RAM, and more storage that can scale to high levels. That's all stuff that I know that they have the capability of doing, the question is how are they going to execute it? And we don't really know that right now.

Speaking of monitors, the 6K 32 inch Pro Display XDR it's going to be replaced. Are they going to replace it with an even bigger, meaner monitor? I can see them sacrificing the USB hub and using the unidirectional thunderbolt mode to make a monster 8K display. I can see them doing that with Mac Pro-level machines, but the demands of having even say a 5k 120 or a 6K 120 along with higher bit depth for HDR are significant. That's just a lot of pixels to have to push, especially if you don't use display stream compression, which so far Apple has not mentioned at all.on these types of things. I suppose they could just punt and say “Hey, you want the 8K monitor? You need to use two Thunderbolt cables.” That’s not an elegant design, though.

Final Thoughts

So did Apple meet the expectations of the community and its customers? My gut says yes. I can imagine a lot of people are going to buy and enjoy these machines and do a lot of great work with them. I don't think they're going to satisfy everybody. Some people are already griping that, “oh, it doesn't have slots. Doesn't have replaceable this. It doesn't do that.” Sure, whatever. I have to say that I do like them bringing back the Studio name, especially for the Studio Display. Maybe we should bring back other dead names. Let’s call the Apple Silicon Mac Pro the Power Mac, eh? Ah, a man can dream.

I also don't think this machine is really going to quell any of the complaints that other people have about repairability. It's a logic board and everything's soldered onto it. That can be a problem for some people. Unlike the Mac Pro, it’s still the same problems that we have with a laptop or a Mini. The long arc of computing history has been towards integration. More and more stuff gets integrated. We don't buy sound cards anymore. Well, most of us don't, and if we need something, we're fine with external DACs.. Even on the PC side, more and more stuff is getting integrated into our motherboards, like network cards. Apple is just ahead of the curve here. I have a feeling we’re going to see more of this style of integration on the PC side as well. The ATX standard is getting really long in the tooth, especially as far as thermal management goes. Whether that'll change remains to be seen.

But as the event hype dies down, we can look at this from sort of a higher level standpoint. The Mac Studio really kind of is the machine that Steve and everybody else thought of when NeXT came together. It is a personal workstation that’s very punchy and you can do a lot of really cool things with it. It's small, it's unobtrusive, it doesn't get in your way. It does have expandability, for the most part. You can't put stuff inside of it, but you can still attach a lot to it. There's a lot of Thunderbolt and USB ports. Looking at Apple's strategy over the past year, this really feels like Apple is going for the throat when it comes to the Mac. Obviously we've watched these events and they have all these sparkly videos with special effects and marketing hype. But at the end of the day, the machine's performance speaks for themselves. And even just the base $1999 Studio with M1 Max is a very respectable machine for people doing a lot of content creation and 3d visualization. That’s a very accessible price point for that kind of power. It’s plug and play and you're pretty much ready to go, and I can respect that.

We’ll have to wait for reviews to get the full picture. I don't expect the entry-level Mac Studio to be that much better than the 16 inch MacBook Pro. It'll be better in some ways, but ultimately it's just a cheaper way of getting that level of power if you don't need it to be in a portable form. Consider a Power Mac from 20 years ago, a 1999 Power Mac both in terms of price and the year that it came out. Back then, $2,000 didn't buy you a lot of Power Mac—it bought you a base, entry-level machine. Whereas this is not really entry-level, this is definitely extremely powerful. But ultimately the thing that speaks loudest are the people that I know who had been holding out, and holding out, and holding out are finally buying something. Somebody who was nursing along a 2012 Mac Pro went out and bought one. Same with another friend who was waiting things out with a 2012 Mini. I think that speaks a lot about this new Mac.

For the longest time, people said “Oh, Apple can't do an xMac. Oh, they can't do a mid range desktop because nobody will buy it.” I think the reality was that the value had to be there. And I think this is a case where even if Apple's not going to sell 10 million of these Macs a year, they're still valuable things to have in the lineup. I think they realize that sometimes you do have to make a play for market share. And as far I can see this is a play not just for market share in terms of raw numbers, but market share of saying, “we have this available, we can do this. We're not ignoring you, the customer.” You can only ignore somebody for too long before they look elsewhere. And I think we're really seeing the fruits of that big refocus meeting that was four years ago at this point.

The Mac Studio is shipping in just a couple of weeks, and we'll be seeing plenty of people writing reviews and benchmarks. As I said earlier, I’m not buying one, since I already have a machine of similar power and my desktop is a Windows machine. I have no need for a Mac desktop. So while it's not for me, I think it’ll make its target audience very happy.