Video Version of Compaq ProLinea Computers of Significant History Now Available

A video version of the Compaq ProLinea 4/33 Computers of Significant History episode is now available. I made a few edits and updates as well. Enjoy!

A video version of the Compaq ProLinea 4/33 Computers of Significant History episode is now available. I made a few edits and updates as well. Enjoy!

The slide rules, the jacquard looms, the abacus—when did you first get into collecting retro tech? We might not be going back as far as Herman Hollerith and his punchcards, but we will take a look at his great-great-grandputer. How are we gonna do it? Here in Userlandia, we’re gonna PS/2 it.

Welcome back to Computers of Significant History for another personal chapter in my personal chronology of personal computing. After examining the role of the Commodore 64 and Apple IIe in my life and yours, I’d be remiss in not addressing the big blue elephant in the room. The influence of International Business Machines could be felt everywhere before, during, and after the PC revolution. “Nobody ever got fired for buying IBM” as the old saying goes, and many people's first exposure to computers was an IBM PC being plopped onto their desk.

You might recall from a previous Computers of Significant History that a Commodore 64 was my primary home computer until October 1997 when my uncle gave me his Compaq ProLinea 486. That’s technically correct, which as you know is the best kind of correct. But that's not the whole story, because there was another computer evicted from the house that fateful October evening. Tossed out the door with the C64 was an IBM PS/2 Model 30 286 that I had acquired just six months earlier in April. As a fellow collector of old tech, you might feel bad for those computers now—but they had no feelings. And the new one was much better. Clearly I hadn't learned anything from watching The Brave Little Toaster as a kid.

That's right, my very first IBM compatible PC, the one I left out in the cold on that windy October evening, was a genuine IBM. It came with a matching color VGA monitor, a modem, a Microsoft Mouse, and a Model M meyboard-er, keyboard. With 2 MB of RAM and a 20 MB hard drive, it met the minimum requirements to run Windows 3.1, though the experience felt less like running and more like walking. Still, it could run Windows! And I so desperately wanted a machine that could run Windows, even though I couldn’t do much more than play Solitaire or type something in Windows Write.

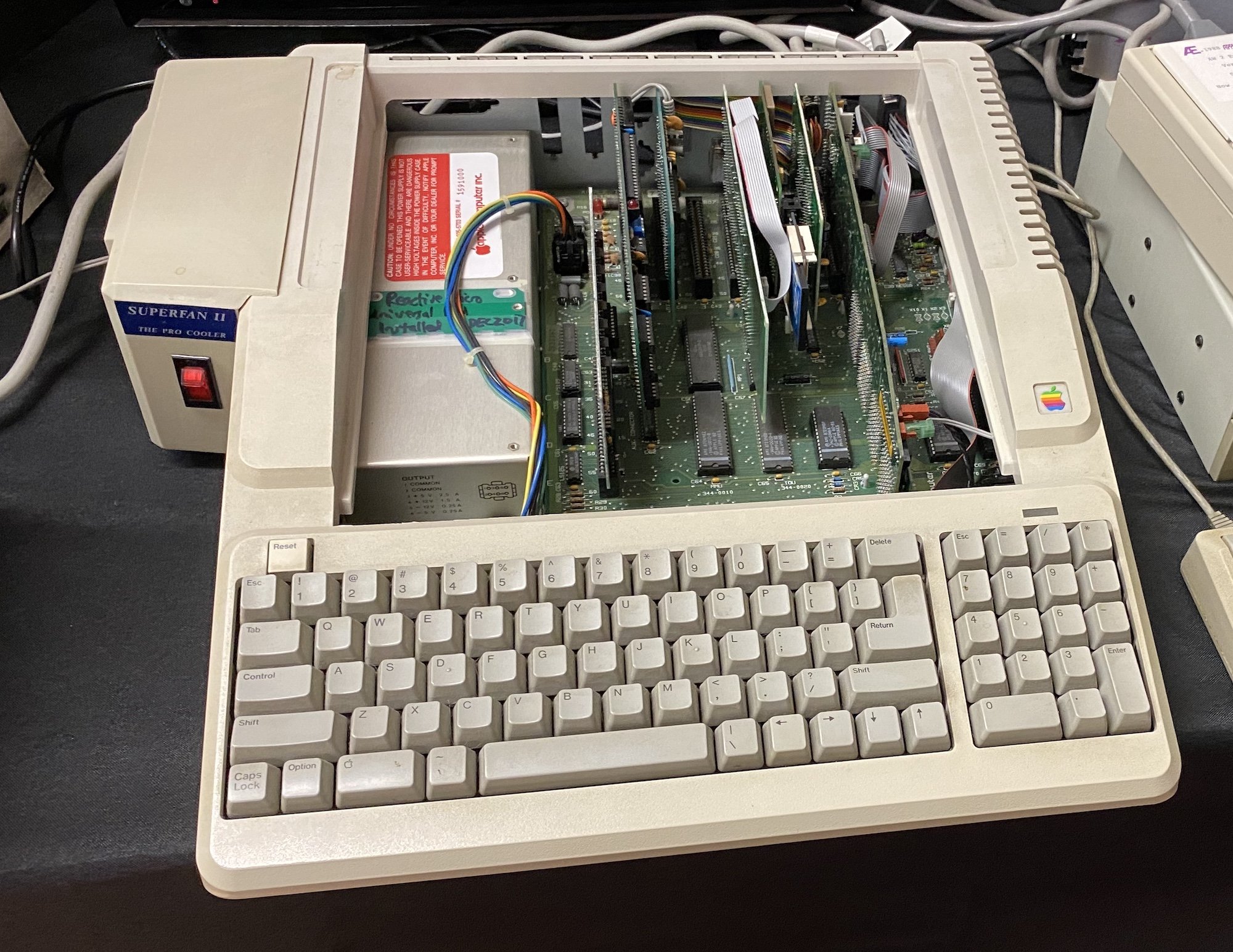

So in an effort to bring you that same experience, I found one on eBay for a sensible price. With 1MB of RAM and a 30MB hard drive, this config would’ve retailed for $1,895 in 1989 (which is about $4700 in today's dollars). It also came with a Digiboard multiport serial card which apparently connects to a semiconductor validation machine! That's for inspecting silicon wafers for defects at chip foundries. Neat! For a computer that’s apparently lived a life of industrial servitude it wasn’t terribly dirty inside or out when it arrived. And the floppy and hard drives still work, which is impressive given their reputation for quitting without notice.

If the drives were dead, replacing them would be challenging. IBM said that the PS in PS/2 stood for Personal System, but I wouldn’t be alone in saying that “Proprietary System” would be more accurate. Not Invented Here is a powerful drug, and IBM was high on its own supply. The PS/2 series is the classic work of an addict. You might be familiar with the most famous symptom: Micro Channel Architecture, IBM’s advanced expansion bus with features like plug and play (sort of) and 32-bit data widths (sometimes). But look inside a Model 30 and you’ll see no Micro Channel slots at all. There’s three 16-bit AT bus slots, which you know better as the Industry Standard Architecture. Omitting the patented protocol had a practical purpose; the entry level models needed lower costs to maximize margins and ISA was cheaper.

But everything else inside these boxes was just as proprietary as its more expensive siblings. Need more memory? You can’t use ordinary 30 pin SIMMs; you needed IBM branded SIMMs with specific pinouts for the PS/2. Floppy drive gone bad? Its power and data connections are combined into one cable with a completely different pinout than a standard drive. The hard drive is proprietary too—it uses a special edge connector and its custom ST506 controller is unique to these low-end PS/2s. Even the power supply had to be special, with this wacky lever for the power switch and a connector that’s completely different from an AT.

Thirty years on, upgrading and repairing these PS/2s is more complicated than other PCs. PS/2 compatible SIMMs command a premium on the used market, though handy hackers can rewire some traces on standard modules to make them work. If your floppy drive can’t be repaired, you’ll need an adapter to use a common one, and you’ll need to 3D print a bracket to mount it. Unless you stick to specific IBM-branded hard disks you’ll need to sacrifice one of your slots for a disk controller or XT-IDE adapter.

And this doesn’t stop with hardware. IBM rewrote its BIOS for the PS/2, so a machine like the Model 30 286 that walks and talks like an AT isn’t actually an AT and shouldn’t be treated as such. The PC AT included a Setup floppy to configure its BIOS settings, and the PS/2 retooled this concept into Reference Disks for Micro Channel models and Starter Disks for ISA models. We gripe about setup disks today, but firmware storage back then was pretty limited and a setup program took up too much space on a ROM or flash chip.

Since the 35 year old battery inside the PS/2’s Dallas clock chip had expired, I needed to replace it. Instead of dremeling out the epoxy and soldering in a new battery, I bought a replacement chip with a removable battery. Next step: the starter disk dance. This was no problem thanks to disk images from the Ardent Tool of Capitalism website. I imaged a floppy on my modern PC, popped it into the PS/2, and booted into IBM’s system setup utility. BIOS configuration is pretty painless for a machine of that era—all I needed to do was set the time. And, credit where it's due, it didn't even complain about Y2K. There’s even a system tutorial on the starter disk, which is surprisingly friendly.

Doing this setup routine reminded me of the last time I ran the starter disk. My original PS/2 came my way thanks to the generosity of one of my middle school teachers. In the spring of ’97 I was a fourteen year old seventh grader who’d earned a reputation as a computer whisperer. This was before formal district IT departments had taken over the management of my middle school’s tech stack, and computer labs were more like fiefdoms of the individual teachers who ran them. If a regular classroom had a computer, that was yet another responsibility thrust upon our overworked and underpaid educators. Precocious kids who spent too much time reading computer books could be tapped to solve pesky computer problems.

Seventh grade was also when students were introduced to their first technology classes. “Technology” was a catch-all term for classes about applied engineering. One day you could be building a balsa wood bridge and testing its weight load, while the next day you could be drawing blueprints in computer aided design software. Mr. Reardon’s computer lab was full of the early nineties PC clones that we’re all trying to recollect today. A motley collection of 386, 486, and Pentium PCs, this local area network introduced me to the magic of AutoCAD.

Across the hall was Mr. Nerrie’s shop. Kids today would call it a “maker space,” what with the fabrication machinery. There were plenty of computers mixed in amongst the lathes and saws: an old Mac Classic, a no-name 386, and a PS/2 Model 30 286. They ran software like circuit building programs, wind tunnel simulators, and bridge construction games. Though the PS/2 wasn’t a speedy machine it eventually told me all the flaws in my designs. Mr. Nerrie had picked up on my affinity for computers, and encouraged me to try board-level repairs. My only experience with building electronics was one of those Radio Shack circuit builder kits, so learning how to use a soldering iron helped me level up my hardware skills.

One morning in April I noticed the PS/2 had vanished from its desk. In its place was a 486 tower that had migrated from Mr. Reardon’s lab. Now the PS/2 was sitting near the outside door alongside a box of accessories.

I asked what had happened to the PS/2, and Mr. Nerrie said that it was destined for the dumpster. Then the gears started turning in my head. “Well, if they’re just throwing it away… can I take it?” After a short pause, he said “Sure, why not. It’s better off being used than in the scrap pile.” I hooked it up to some nearby peripherals and started with the starter disk. After setting up the BIOS, I formatted the hard drive and installed a fresh copy of MS-DOS and Windows 3.1. With a wink and a nod to cover this misappropriation of school property, I could give this machine a second life. Getting it home was a chore—thanks for picking me up, mom. After setting it up in my bedroom, I stared at the DOS prompt. Now that I had this PC, what was I going to do with it?

A PS/2 Model 30 286 wasn’t exactly a barn burner when it was new, and by the time I got one in 1997 it was laughably obsolete. That was the year of the Pentium II and 3dfx Voodoo breaking boundaries in 3D gaming. The only thing more absurd than using a 286 every day in 1997 would be… well, using a C64 every day in 1997. But when you’re lost in an 8-bit desert, any 16-bit machine feels like a refreshing glass of water.

Compared to my Commodore, the PS/2 had some significant advantages. Its 10MHz 286 CPU wasn’t a Pentium, but it was far more capable at crunching numbers than a 6510. 2 MB of RAM dwarfed the 64K that gave the C64 its name. VGA graphics with 256K of video memory gave glorious 256 color video, which was sixteen times the 64’s sixteen colors. 1.4MB floppies had nearly ten times the storage of a 170K CBM disk. The cherry on top was the 20MB hard drive, which wasn’t much but was still better than the C64’s nothing. The only advantage the C64 had was its SID sound chip which blew the PS/2’s piezoelectric PC speaker away, Memorex style.

Sadly, this machine wasn’t meant for games. The true second wave of DOS PC gaming relied on the combined power of a 386 CPU, SoundBlaster digital audio, and VGA graphics. Even if I added a sound card to the PS/2’s VGA graphics, I would still be missing the third piece of the PC gaming triforce. At least the PS/2 could manage some rounds of SimCity Classic or Wolfenstein 3D. That was fine by me because game consoles filled in the gap. We still had our collection of regular and Super Nintendo games, and my older brother had recently bought a PlayStation thanks to money he earned from his job at Stop and Shop.

The PS/2 might have lacked gaming prowess, but it could do something that my games consoles and C64 couldn’t: connect to the outside world. Included in the accessories box was a 1200 baud Hayes Smartmodem. Before you “well, actually” me, I know that my Commodore could dial into a BBS. There were plenty of C64 BBSes back in the eighties. But our C64 didn’t have a modem and by the time I was old enough to dial in—well, there may have been some Commodore boards left, but I certainly couldn't find them. PC BBSes, though—there were plenty of those.

Being a terminally online teen was harder in 1997. If I wanted to surf the Information Superhighway, I had to do it after school in the computer lab. Even if I could have afforded an ISP’s monthly service costs, a 286 PS/2 couldn’t run a graphical web browser. Bulletin boards were text based and thus had much lower system requirements. And they were free, so even a broke teen like me could use them. But there were strings attached. Because most boards had only one or two phone lines, every user had a connection time limit. After using your hour of credit you were done for the day. The same went for file transfers—if you wanted to download a file you needed to upload something in return lest you be branded a leech. And the Sysop who’s actually footing the bill can ban anyone at any time for any reason.

Armed with a copy of Telix I downloaded at school and a notepad of numbers from my buddy Scott, I was ready to dial in to my first bulletin board. He recommended The Maze, so I keyed in the number: 413-684-… well, I won’t say the last four digits. After some squawking and buzzing noises from the modem the PS/2 successfully connected to this magical world. I sat and watched as a logo crafted from ASCII text slowly painted line by line across the VGA monitor. 1200 bits per second was excruciating; I was used to my middle school’s blazing fast 128 kilobit frame relay connection. After this interminable wait, I was presented with a login prompt.

I had to register an account to gain access. This meant writing an introductory post to the sysop and creating a handle. Introducing myself was easy enough because Scott was already a Maze member and he could vouch for me. But a handle, that was more difficult. Bandit or Rubber Duck were too obvious. Then it struck me: I could use a character name from a video game. Even though I was hooked on the PlayStation at the time, I still had a soft spot for the Super Nintendo. Final Fantasy was one of my favorite video game series and it was full of memorable characters. It was settled: Kefka would be my handle.

After I filled out the registration form, the board said to check in the next day to see if my account was approved. After a seemingly endless day of school, I raced home to dial in and see if my request had been granted. I powered on the PS/2, launched Telix, and mashed number one in the phone book. After waiting an eternity for the logo to draw line by line, I typed in my user name and password—and it worked! The main menu slowly painted in its options: file downloads, message boards, something called doors—was the owner a Jim Morrison fan? Navigating the board took ages because of the modem’s narrow 120 character per second pipe. In hindsight it was probably a bad idea for a 14 year old kid—even a smart and precocious one like me—to be in an environment like this. The average user of The Maze was a college or late high school student with interests to match. I was a stupid newbie which meant I made a bunch of mistakes. Shep—the sysop—was a friendly enough guy who gave me some firm lessons in board etiquette, like “don’t drop carrier” and “don’t ping the sysop for a chat every time you log in.” I learned quickly enough to avoid being branded a lamer.

But while I never got into trouble online, my bulletin board adventures managed to get me into some trouble offline. Thankfully not the legal kind, as no teenage boy would ever try to download warez or inappropriate material. No, I made the most common mistake for a computer-loving kid: costing your parents money. One day I came home from school to find my mother angrily waving a phone bill in my face. My parents begrudgingly tolerated my modem usage as long as it was early in the morning or late in the evening when we weren’t expecting phone calls. However, there was one rule I literally couldn’t afford to break: no long distance calls.

Massachusetts’ 413 area code spans a lot of area from Worcester county all the way to the New York state border. You could incur toll charges calling within your own area code, and NYNEX offered a Dial-Around plan for those who wanted a consistent bill instead of surprise charges. But most people were like my parents—they didn’t make enough toll calls to justify its price and took their chances on metered billing instead. NYNEX and the other baby Bells published a list of exchanges that were within your local dialing area. Pittsfield’s list felt arbitrary—one number fifteen miles away in Adams was free, while another number eight miles away in Lee was a toll call. So I checked every board’s number to make sure I wouldn’t rack up a bill.

My cunning scheme was undone by the fact that NYNEX would occasionally break apart exchanges and shift around which ones were in your toll-free region. These weren't unannounced, but I was fourteen and I didn't read the phone bill. One local BBS number turned into a long distance call overnight, and I wasn’t checking the toll status after marking local numbers. Unbeknownst to me I was racking up toll charges left and right. My free computer and free bulletin boards wound up costing me eighty dollars, and I had to pay back every penny to the bank of mom and dad.

Most of my memories of the summer of 1997 revolve around the PS/2 and the hours I spent dialing in to various bulletin boards. Another teacher lending me a faster modem certainly helped. Mrs. Pughn, who ran the Mac lab, lent me a US Robotics Sportster 9600 modem to use over the break. This was a far more usable experience than my 1200 bit slowpoke. Menu screens painted almost instantly. I could download a whole day’s worth of message board posts for offline reading in two minutes instead of fifteen. That saved valuable time credits that could be spent playing door games instead.

Every bulletin board had its own flavor imparted by the sysop that fronted the cash for its lines and hardware. This was especially true of The Maze, which ran CNet BBS software on an Amiga 4000. I learned that a door wasn’t a music reference but a term for games and software launched by the BBS. One of my favorite door games was Hacker, where other board users set up simulated computer systems that everyone else tries to take down with virtual viruses. I played a decent amount of classics like Legend of the Red Dragon and TradeWars 2002, but nothing quite lived up to Hacker in my eyes.

My uncle’s hand-me-down 486 came with a 28.8K modem, and that opened up even more BBS opportunities. Downloading software, listening to MOD music, and even dial-up Doom sessions were now part of daily life. But the 486 also brought the Internet into my home. By 1997 America Online had an internet gateway and could access the World Wide Web. My uncle was an AOL subscriber, and he convinced my dad to sign up too. Now that I was browsing the web at home, how could a BBS ever compete? BBSes were already declining in 1997, but 1998 was when things really fell apart in the 413. One by one boards went dark as their owners traded BBS software for web servers. I spent the summer of ’98 crafting my first Geocities homepage and getting sucked into my first online fandom: Final Fantasy VII.

By the fall of 1998 the Maze would shut down and my regular usage of BBSes died too. The web was just too compelling. Some 413 regulars tried to set up an IRC channel called 413scene on the EFnet network, but it didn’t last beyond 1999. I still remember boards like The Maze, Mos Eisley, and The Void like the way people remember old nightclubs. Handles like Menelaus and Boo Berry still stick with me even though I have no way of contacting them. If you were active in the 413 scene in the late nineties, send me a message. Maybe we crossed digital paths.

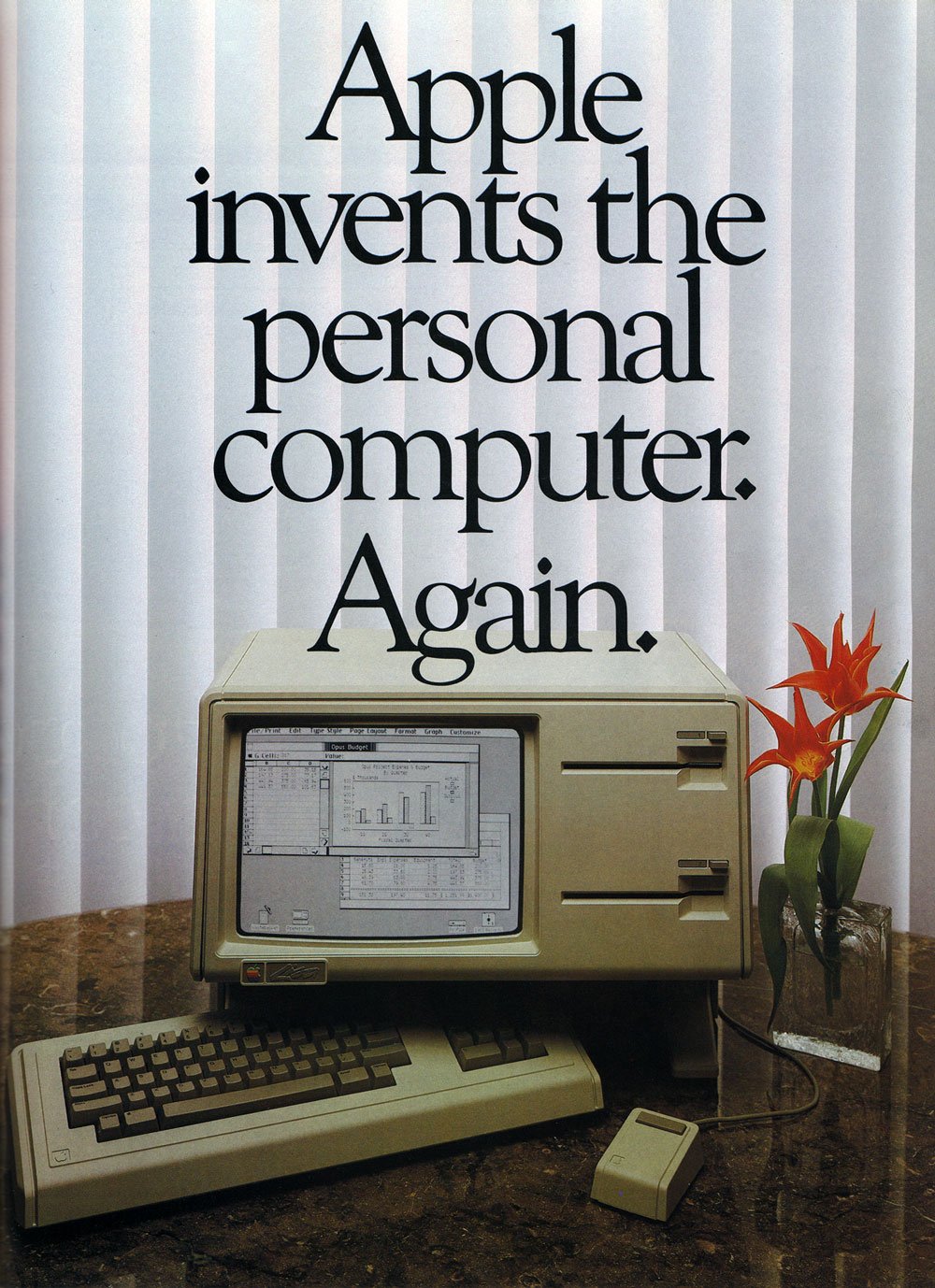

Once upon a time a personal computer was any kind of small system meant for a single user. But somewhere along the way the term came to mean something much more specific: an IBM compatible personal computer. One by one the myriad independent microcomputers of the 1980s succumbed to the “IBM and Compatible” hegemony. Even if you stood proud aboard another platform, it was only a matter of time until IBM and the armada of cloners aimed their cannons at your ship’s market share.

But despite having created the leading personal computing platform of its day, IBM wasn't as in control as they thought. Lawsuits could stop the blatant copies of their BIOS made by the likes of Eagle—but not clean-room reverse engineering. Now cloners are grudgingly tolerated because they followed IBM's standards. But the sheer effrontery of a cloner thinking they can dictate a standard?! Preposterous! We are IBM! We are personal computers!

So when Intel announced the 80386 CPU in October 1985, everyone in the industry—especially Intel—expected IBM to adopt the new 32-bit processor. The 286’s segmented memory model was unpopular with programmers, and the 386 addressed those criticisms directly with an overhauled memory model. And Intel managed to do this without breaking backwards compatibility. This was perfect for the next generation of PCs—great performance for today and 32-bit capability for tomorrow. But the mood in Armonk was muted at best.

Intel planned to ship the 386 in volume in June of 1986, but IBM’s top brass was skeptical that Intel could hit that date based on prior experience with the 286. They also thought that was too soon to be replacing the two year old PC AT. Big Blue’s mainframe and workstation divisions thought a 32-bit personal computer would encroach on their very expensive turf. This was in direct conflict with IBM’s PC pioneer Don Estridge, who saw the potential of the 386 as he watched its development in late 1984 into early ‘85. He wanted to aggressively adopt the new CPU, but he faced tough internal barriers. Estridge was losing other political battles inside IBM, and by March 1985 he lost his spot atop the Entry Systems Division to Bill Lowe. After Estridge tragically died in the crash of Delta flight 191 in August 1985, there was no one left in the higher echelons of IBM to advocate for the 386. They were committed to the 286 in both hardware and software. And so IBM gave Intel a congratulatory statement in public and a “we’ll think about it” in private.

But you know who didn’t have any of those pesky internal politics? A feisty little Texas startup called Compaq. Intel wanted a partner to aggressively push the 386, and Compaq wanted to prove that they were more than just a cloner. This was a pivotal moment in the evolution of the PC, because Compaq and Intel weren’t waiting around for IBM to advance the industry standard. Compaq launched the DeskPro 386 in September 1986. It was effectively a slap in the face from Compaq CEO Rod Canion and chairman Ben Rosen, daring IBM to release a 386 machine in six months or lose their title of industry leader. Such a challenge could not go unanswered. Seven months later in April 1987 IBM announced the Personal System/2, a line of computers that thoroughly reimagined what the “IBM PC” was supposed to be. Big Blue would exert their overwhelming influence to change the course of an entire industry, just like they did six years earlier. Or, at least, that was the plan.

The PS/2’s development history isn’t well documented—contemporary sources are thin on details about the engineering team, and there’s no present-day oral histories or deep dives from primary sources about its development timeline. According to the book Big Blues: The Unmaking of IBM by Wall Street Journal reporter Paul Carrol, there wasn’t a single 386 chip inside IBM when Compaq announced the DeskPro. I do know that Chet Heath, the architect of Micro Channel, started designing the bus back in 1983. So when Bill Lowe was forced to react to Compaq’s challenge, he saw an opportunity to pair Micro Channel with the 386. IBM announced the Model 80 386 along with its siblings in April of ’87, but it didn’t actually ship until August. That was almost a year after Compaq launched the DeskPro 386. Compaq issued that six-month challenge because they were confident they'd win.

Then again, IBM didn’t see it that way. They weren’t designing just a single computer, but a whole family of computers. The PS/2’s launch lineup—the Models 30, 50, 60, and 80—covered every segment of the market from basic office PC to monster workstation server tower. Besides Micro Channel the PS/2 would usher in new technologies like ESDI and SCSI hard drives, VGA graphics, bidirectional parallel ports, high density 1.4MB 3 1/2 inch floppies, and tool-less case designs. IBM was sure the PS/2 would redefine the trajectory of the entire industry. And, to be fair to Big Blue, that's exactly what happened. Just… not in the way they'd hoped.

Aesthetically the PS/2 was a clean break from IBM’s previous PCs. IBM always had a flair for industrial design, and a PS/2’s bright white chassis featured sharp angles, repeating slats, and a signature blue accent color. They wouldn’t be mistaken for any old clone, that’s for sure. These sharp dressed desktops were also considerably smaller than previous PCs. Integrating more standard features on the motherboard meant fewer slots and cards were needed for a fully functioning system. Disk drives took up a lot of space, and using 3 1/2 inch floppy and hard disk drives let them save many cubic inches. Ultimately, the Model 30's slimline case was almost half the size of a PC AT by volume.

A consequence of this strategy was abandoning the standard AT motherboard layout. Now each model had its own specially designed motherboard—excuse me, planar—and the number of slots you got depended on how much you were willing to spend. The entry-level Model 30 only had three 8-bit ISA slots. The mid-range Model 50 bought you four 16-bit MCA slots. The high-end Models 60 and 80 came in tower cases with eight MCA slots each, three of which were 32-bit in the model 80 to unleash the power of the 386. It’s ladder-style market segmentation that only an MBA could love.

IBM had a very specific customer in mind when they designed the Model 30: businesspeople who spent all day in WordPerfect or Lotus 1-2-3 and weren’t particularly picky about performance. IBM took the aging PC XT, dumped it in a blender with some PS/2 spices for flavor, and the result was a slimline PC with just enough basic features for basic business work. And if IBM sold a few to the home office types, well, all the better. The stylish new Model 30 was your ticket to the modern business world, and your friends at IBM were happy to welcome you aboard their merry-go-round of service agreements and replacement parts.

A launch day Model 30 with an 8086 CPU, 640K of RAM, 720K floppy drive, and 20 MB hard disk retailed for $2,295 in 1987. If you were strapped for cash, ditching the hard disk for a second floppy would save you $600—which you could use to buy yourself a monitor, because those weren't included. And don't go thinking you'd get full-fat VGA graphics for that price, either—the Model 30 had MCGA, a skim version of VGA that was never widely supported.

PC XT clones were still a thing in 1987, but they were advertised as bargain machines, which the Model 30 was decidedly not. Flipping through the PS/2’s launch issue of PC Magazine shows the state of the market. Michael Dell’s PCs Limited would happily sell you a Turbo XT clone with EGA graphics, 20MB hard drive, and color monitor for $1699. Or you could get an 8MHz 286 for the same price as a Model 30 with hard drive. If you were feeling more adventurous, the back-page advertisers were offering monochrome Turbo XTs for $995 and under. Things aren’t much better when we compare the Model 50 and 60 against Turbo AT clones, let alone the Model 80. CDW offered Compaq DeskPro 386s with 40 meg drives for $4879. A base Model 80—with 1MB of RAM, a 1.4 Meg floppy, and a 44MB hard disk—was just under $7,000.

And IBM’s prices didn’t get any better despite improvements to the Model 30. Fourteen months later, the Model 30 286 came out. With 512K of RAM, VGA graphics, and—oops, no hard drive—it could be yours for just under two thousand dollars. If you wanted a 20MB hard drive, that was a mere $600 extra! These prices looked even worse a few months later, when the cloners would sell you 10MHz AT with the same specs and a color VGA monitor for $1899. The price for a Model 30 286 with 1MB RAM and 20MB hard disk fell to $1795 in late 1989, but the competition was getting even tougher. By 1990 you could get double the memory, double the hard disk space, and double the processor speed for the same price. All IBM could muster in 1990 was a 40MB hard drive option before discontinuing the Model 30 in 1991.

It’s a pretty cool coincidence that IBM announced the Model 30 286 on the same day that Compaq and eight other manufacturers announced the 32-bit Extended Industry Standard Architecture bus. The new Model 30 286 was seen as an admission by IBM that they couldn’t quite kill ISA despite Big Blue’s protestations. For all of Micro Channel’s vaunted technical superiority, IBM had a hard time convincing others to adopt the bus. The main impediment was royalty fees. Building MCA machines required a license from IBM plus a royalty of up to five percent of your revenue for each machine sold—and back payments for all prior machines sold with the AT bus. Some clone makers did end up licensing MCA. Tandy was the first to sell a third-party MCA machine, and ALR and NCR produced a decent amount. But vendors like Dell backed out because MCA was either too expensive or difficult to implement. And even if you did put the money and effort into it, Micro Channel clones were slow sellers.

Peripheral makers weren’t doing any better. Micro Channel introduced the concept of vendor IDs, which told the computer who made the board and what kind of board it was. These IDs were a requirement for MCA’s self-configuration ability. But IBM slow-rolled the assignment of those IDs, leaving board makers like AST in the lurch when IBM didn’t answer their phones—and that's not just an expression, IBM literally didn't answer when AST called them. Even when IBM got around to assigning IDs, sometimes their left hand issued ones that their right hand had already issued to different vendors, resulting in device conflicts. For a while there was a risk that being “Compliant” would be no better than just assigning yourself an ID number and hoping it wouldn't conflict.

By 1992 Micro Channel’s window of opportunity had closed despite IBM adding it to their RISC workstations. EISA gained a foothold in the server market, VESA launched a new Local Bus standard for graphics cards, and Intel was busy drafting the first version of PCI. The PS/2 wasn’t a complete failure, because IBM did sell a lot of them, but its signature feature ironically worked against their plans to reclaim control of the PC market. Its real legacy was its keyboard and mouse ports along with the VGA graphics standard, because IBM didn't keep them in its hoard along with Micro Channel.

By the fall of 1994 the Personal System brand was dead. The entry-level PS/2s, the PS/1, and the PS/ValuePoint systems for home users fell in battle and were replaced by the Aptiva line of PCs. Higher-end PS/2s were replaced by the “PC Series” computers, which is totally not a confusing name. The clones had won so decisively that they had evolved beyond that simplistic moniker. Compaq, Gateway, Dell, and others joined with Intel to define the standard for PC hardware, and the era of “IBM and compatible” was well and truly dead. It was replaced by the Wintel platform of x86 Intel processors running Microsoft Windows. It was like the end of Animal Farm—or maybe Server Farm. Looking from IBM to the cloners and back to IBM, and not being able to tell which was which.

I still have some misplaced fondness for IBM, even though they haven’t manufactured a PC for nearly twenty years. One part comes from that summer of 1997 where my scrap-heap PS/2 was my way of connecting to a new and unfamiliar world. Another part is industrial design. They’re not Apple, but you can look at an IBM product and know that it was designed by people with principles. The last part is the fact that IBMs were Serious Computers for Serious Business, and having one meant you were a Serious Somebody.

But in order for “nobody ever got fired for buying IBM” to be true, IBM had to remain a safe bet. And while PS/2s were generally reliant and performant machines, IBM had stepped on several strategic rakes with Micro Channel. That, plus uncompetitive pricing, meant that IBM products weren't automatically the safe choice. The mark against clones was that they could be incompatible in ways you didn’t expect. Or, at least, that was true in the mid-80s, but by the time 1990 rolled around BIOS developers like Phoenix, Award, and American Megatrends had everything sorted out. Even if the cloners were competitors, they could work together when it counted and develop standards like EISA and ATA to fill in their technological gaps. If IBM products couldn’t actually do the job you wanted them to do, what what was the point with sticking with Big Blue?

So consider the Model 30 286 in this scenario. Because it used 16-bit ISA slots and was an unassuming office machine, it was able to wiggle into more places than a Micro Channel Model 50 could. That’s why the Model 30 286 sold as well as it did to business and government customers, even when faced with stiff clone competition. But even those sales dried up when 286 PCs stopped being competitive. The release of Windows 3.0 and the ascendancy of PC multimedia energized the home PC market, which is where IBM historically had problems—hello, PCjr. It’s not that they didn’t try—see the PS/1 and PS/ValuePoint computers—but, like today, most people were more price sensitive than brand loyal. When they were flipping through mail-order catalogs of Dells and Gateways or going down the aisles of Nobody Beats the Wiz, they weren’t going to spend an extra 20% on the security blanket of an IBM badge. After all, "nobody ever got fired" only really applies to jobs. This was reflected in IBM’s balance sheet, where they posted billions of dollars of losses in 1993.

Thankfully for IBM there was still a place for them to flex their innovative and proprietary muscles, and that was the laptop market. The ThinkPad started out as a PS/2 adjacent project, and the less price sensitive nature of business travelers meant IBM didn’t have to worry about economizing. Featuring tank-like build quality wrapped in a distinctive red-on-black industrial design, the ThinkPad actually stood out from other PC laptops thanks to innovations like the TrackPoint and the butterfly keyboard. But the ThinkPad is best saved for another episode.

I can't recommend a Model 30 286 for, say, your primary retro gaming PC. It’s slower and harder to fix than contemporary AT clones and isn’t quite up to snuff for enjoying the greatest hits of DOS gaming. 386 and 486 PS/2s might have enough CPU power, but finding Micro Channel sound cards is a pain and the same proprietary pitfalls apply. But that doesn’t mean they’re not worth collecting—just have one as your secondary machine or run software that plays to its strengths. They look sharp on display, especially if you can scrounge up all the matching accessories. Besides, Micro Channel machines are historical artifacts that provide a window into another weird, forgotten standard that lost its war. Kinda like Betamax, or SmartMedia cards, or HD-DVD.

Now that we’re thirty years beyond the turmoil of Micro Channel and the clone wars, a PS/2 is no longer bound by the rules and desires of its creator. A computer may just be a tool, and unfeeling Swedish minimalists might say that our things don’t have feelings, but we’re humans and we have feelings, damnit. And an object can express our feelings through its design or function. So while my specific PS/2 didn’t go on a globetrotting adventure to find its way back home, I think spiritually it’s found its way back, and I’ve lovingly restored it just as the Master did to his toaster. While some might scoff at a Model 30 and say “no true PS/2 has ISA slots,” the badge on the front is pretty clear. Although its tenure in my life was a short one, this system was pretty personal to me.

A video version of the Apple IIe Computers of Significant History is now live! It’s re-recorded, remastered, and full of freshly recorded goodness. Check it out!

The video version of the first Computers of Significant History is live! Featuring genuine Commodore 64s and more. Why not give it a watch?

Here in Userlandia, I think I’m a clone now.

Welcome back to Computers of Significant History, an analysis of the history of computing in terms of how it affected the life of one writer/podcaster. In previous episodes, we looked at two pivotal computers from 1983, when I was a baby. Now let's jump forward to 1993, when I was in grade school. The unpredictable and chaotic market for personal computers had settled into a respectable groove. IBM compatibles were number one in home and business computers, with the Macintosh plodding slowly behind them. High powered RISC workstations from Sun, Silicon Graphics, IBM, and Hewlett-Packard had completely overtaken the high end of the market. Commodore was in a death spiral, and Atari had already crashed and burned. Acorn hadn't dropped out of the desktop market just yet, but was finding more success in licensing their ARM architecture for portable devices. Other companies had switched to making their own IBM PC clones… if they hadn't given up on computers entirely. If you wanted to replace your aging Eighties machine, you could get an IBM compatible, or you could get a Mac, or you could sit back and not complain because there were starving children in other countries who didn't have any computers at all.

Jack Welch, recurring guest on the hit TV show 30 Rock. Boo this man.

Attribution: Hamilton83, CC BY-SA 3.0, via Wikimedia Commons

As I mentioned a few episodes back, my family kept a Commodore 64 as our primary computer until nineteen ninety-seven. Actually replacing the old Commodore was difficult from a financial standpoint despite its growing obsolescence. True, that old C64 was becoming more obsolete every day, but two thousand dollars—in early nineties money—was a tough ask for a working-class family like mine, because General Electric CEO and cartoonish supervillain Jack Welch was busy destroying tens of thousands of lives in his ruthless quest for efficiency and profit. Not that I'm bitter. Jack started his professional life in my hometown of Pittsfield, Massachusetts, but fond nostalgia didn't stop him from lopping off various parts of the city's industrial apparatus and selling them to the highest bidder. First to go was GE’s electric transformer factory, which was raided, closed, and left to rot. Next in line was the defense business, sold to Martin Marietta for three billion dollars. Only GE's plastics division—which, by pure coincidence, is where Welch got his start—was spared. My father was one of thousands laid off from their well-paying blue collar industrial jobs at "the GE.” My dad joined GE straight out of high school, and 25 years later it was all he knew. He had to scrounge for work, and my mom had to start a career too. My oldest brother was a freshman in college, and tuition was priority number one. Big-ticket items like a new computer were way down the list.

A PS/2 Model 30/286. My own photo, but not my own machine.

When mom and dad can’t open their wallets, enterprising teens look for alternatives. Sometime in the spring of 1997 I rescued an IBM PS/2 Model 30/286 from my middle school’s e-waste pile. My tech teacher discreetly permitted this misappropriation of school property, telling me it was better that I took it than it wind up on the scrap pile. With two whole megabytes of RAM and a whopping great 10MHz 286, that machine could run Windows 3.1… technically. And, technically, you can still listen to music on a hand-cranked gramophone. Running MS-DOS in 1997 wasn’t much of an improvement over the C64 status quo, but there was one thing I could do with the PS/2 that I couldn’t with the Commodore: I could dial into bulletin boards. But those tales are best saved for another day, and perhaps another episode.

The PS/2 and the C64 were uneasy roommates until October 1997, when my uncle made a surprise visit. In the back of his Ford Taurus was none other than his Compaq ProLinea 4/33 with Super VGA monitor and Panasonic color dot matrix printer. He had recently bought a shiny new Pentium II minitower, you see, and the Compaq needed a new home. I was thrilled—I finally had a computer that could run modern software! I didn’t have to stay late after school anymore to write papers in a real word processor. More importantly, the internal 28.8K modem was twelve times faster than the 2400 bits per second slowpoke I’d been using over the summer. I handed the Commodore and PS/2 their eviction notices and installed the Compaq in its rightful spot on the downstairs computer desk.

Next up was a thorough inspection of this new-to-me PC. The ProLinea's exterior was… well, it was an exterior. While Compaq had their own stable of design cues, they’re all in service of maintaining the PC status quo. Sure, there are horizontal air vents and an integrated floppy drive, but Compaq’s desktops don’t stand out from the crowd of Dell, Gateway, and AST. Say what you will about IBM, but at least they have a distinct sense of industrial design. You’re more likely to notice the ProLinea’s height, or lack thereof—it was significantly thinner than the average PC clone. An embossed 4/33 case badge proudly announced an Intel 33MHz 486DX inside, but there’s more to a computer than the CPU. How much RAM and hard drive space did it have? What about its graphics and sound capabilities? None of that can be gleaned from the exterior, and the only way to know was to crack open the case.

The ProLinea’s exterior. A well-worn example seen on RecycledGoods.com.

If you're like me—and, let's face it, if you're reading this you probably are—then you would have done what I did: after a few days of using my new computer, I opened it up to see what was inside. Undoing three screws and sliding the cover off was all it took to gain entry. Compaq utilized several tricks to minimize exterior footprint and maximize internal volume. Floppy drives were stacked on top of each other, the power supply occupied the space behind them, and the hard drive stole space above the motherboard. Beside the hard drive is a riser card, which shaved height off the case by changing the orientation of the expansion slots. Three standard 16 bit ISA slots lived on side A of the riser, and one decidedly non-standard half-height ISA slot for Compaq’s proprietary modems lived on side B. One of the full-height slots was populated with a US Robotics 28.8K modem, which was decent for the time. Four SIMMs of 4MB each lived in four slots for a total of 16 megs of memory. A 240MB Quantum hard drive left the PS/2’s 20 meg drive in the dust.

The slots and ports on the ProLinea.

These were sensible specifications for the affordable 486’s golden age of 1992 or ‘93. Aside from a faster CPU, most 486-based computers had two major advancements over their 386 predecessors: an external SRAM cache and VESA local bus graphics. Unfortunately, there’s no level 2 cache in the ProLinea, which puts a bit of a damper on the 486’s performance. Was this lowering the barrier of entry, or artificial segmentation to push people towards a pricier mid-range Deskpro/I? You decide. At least Compaq included local bus graphics by integrating a Tseng Labs ET4000/W32 graphics chip and 1MB of dedicated graphics memory to the motherboard. Windows performance was more important than ever in 1993, and the W32 variant included Windows graphics acceleration without sacrificing performance in DOS. A lack of cache hurts Excel, but a wimpy graphics processor hurts every application.

But at the time I got this computer, none of that mattered. Cache or no cache, a 33MHz 486 couldn’t hang with a 233MHz Pentium II. Still, it was rare for most PCs to live through the 90s without getting upgrades to extend their lives, and my ProLinea was no exception. I was constantly tinkering with it from the day my uncle gave it to me until its retirement in 2002. After surveying what I had, I prioritized two specific upgrades: a sound card and a CD-ROM drive. Compaq didn’t include any onboard sound in the ProLinea except for the buzzy internal PC speaker. Since the hand-cranked gramophones weren't compatible, you had two choices for better sound: buy an optional sound card or spend even more money on a Deskpro/I with integrated sound I’m sure Compaq would have preferred the latter.

As a broke teenager, my goal was to get some form of sound card and CD-ROM drive without spending a lot of money. In those days, eBay was still just a startup—I’d never heard of it—so that meant a trip to our local used computer store: ReCompute. Located on First Street in beautiful downtown Pittsfield, ReCompute bought and sold all kinds of old computers and parts. The clerk recommended a double-speed Creative Labs CD-ROM drive which connected to—you guessed it—a Creative Labs SoundBlaster. Sound cards back in the day often had ports to connect a CD-ROM drive and companies like Creative sold “multimedia upgrade kits” combining a sound card, CD-ROM, cheap speakers, and software. Sometimes you'd get lucky and get a nice Encyclopedia and a copy of Sam & Max Hit the Road, other times it'd just be a clump of shovelware to inflate that dollars of value sticker.

Before ATAPI, installing a CD-ROM drive into a PC required either a SCSI adapter or a proprietary interface card. There were some sound cards that had cut-down SCSI controllers, but SCSI is overkill for attaching a single CD-ROM drive. If you're selling low-cost upgrade kits, though, every penny matters, so a costly SCSI controller doesn’t make much sense. Luckily, Creative had a margin-padding solution at the ready. Panasonic, the company actually building Creative-branded drives, had their own proprietary CD-ROM interface. It was cheaper than SCSI, used familiar 40 pin ATA cables, and took up minimal board space. Panasonic’s interface lacked SCSI's messy complexity like terminators, so it was simple to install too. Just don’t make the mistake of thinking that Panasonic’s interface was compatible with ATA, even though they used the same cables. The downside to proprietary is that without a matching card—in this case, a SoundBlaster Pro 2.0—the drive might as well be a doorstop. I don’t remember the cost—it couldn’t have been much, honestly—but it was enough that I had to borrow a few dollars from one of my brothers to close the sale.

Then again, you get what you pay for—even if, to 15-year-old me, that was a major investment—and my wonderful bargain of a Creative Combo turned out to be on the unreliable side. It got exasperating, having to fix the speaker jack again and again and again and again. Fortunately, after a rather frustrating year audio-wise, I had both a new 16-bit ESS sound card with wavetable synthesis and a faster 24X ATAPI CD-ROM drive—thank you, birthday and Christmas presents. The 28.8K modem gave way to 56K, which eventually gave way to an ethernet card to connect to a cable modem. Yes, these were all very sensible upgrades, but they’re like adding suspension parts to a car: they’re helpful for handling, but they won’t give the car more power. The ProLinea needed more power, and this brings us to the most momentous upgrade of them all: a Kingston TurboChip.

Kingston’s Air Force ad for the TurboChip.

Based on a 133MHz AMD Am5x86, the TurboChip was a drop-in upgrade CPU that was four times faster than my 33MHz 486. Although it ran at 133MHz, its architecture is derived from a 486 so its level of performance is similar a 75MHz Pentium. At a cost of $100 in 1999, the TurboChip was considerably less money than a new computer. Even though upgrade processors are never as good as a new system, it still gave the ProLinea a much needed boost. A 33MHz 486 barely met the minimum requirements for Office 97 and Internet Explorer 4.0, let alone IE 5.0. The TurboChip breathed new life into the sputtering ProLinea, improving performance in those apps and opening doors to new ones. Somehow this computer managed to play a video of the South Park movie, which I'm sure I obtained legally even if I don't remember precisely how. Such a feat would've been impossible without the upgrades. Where the TurboChip wasn’t as helpful was in gaming. Even a speedy 486 couldn’t keep up with the superior floating point performance of a Pentium. Games like Quake were a choppy mess, but I wasn’t missing that much since I could, uh, borrow my brother’s PlayStation.

TigerDirect ad for another Am5x86-based accelerator. AMD sold these processors to companies like Evergreen, PNY, Kingston, and Trinity Works.

But no matter how many upgrades I stuffed into the ProLinea, time was catching up to the aging PC. No further CPU upgrades were available, and that proprietary motherboard layout with the riser card meant I couldn’t swap in a new board without impractical modifications. The hard drive was slow and cramped and the BIOS complained loudly about drives larger than 500MB. I couldn’t fight reality anymore—I needed a whole new computer. Millions of people across America were facing the same conundrum, and wouldn’t you know it, companies like Compaq were right there waiting to give them a hand. They ranked number one in marketshare from 1994 to 2000, and only disappeared from the chart after merging with HP. But they wouldn’t have achieved that market dominance without the ProLinea. How’d they manage that, anyway? Would you believe… boardroom backstabbing?

…Of course you would.

With years of hindsight, it’s easy to say that Compaq would dominate the PC clone world. After all, they started the fire by building the first commercially successful IBM compatible computer that could withstand legal challenges from Big Blue. But that’s the thing about cloning—once you’ve proven it can be done, someone’s going to copy your copy. Compaq handled competition the best way it could: by becoming a leader. Soon it was IBM against Compaq and the horde of cloners fighting for control of the Intel-based MS-DOS ecosystem. Compaq took the performance crown by shipping the first 80386 PC in 1986, showing that IBM was no longer in control of their own platform.

One reason Compaq beat IBM to the punch was that they were iterating on an already proven design. The Deskpro 386 didn’t have fancy new slots and it wasn’t inventing new video standards. IBM, on the other hand, was hard at work on what they believed would be the true next generation of PCs. Announced in April 1987—seven months after Compaq announced the Deskpro 386—IBM’s Personal System/2 was a declaration that Big Blue was still the leader in personal computing. The PS/2 wasn’t just a new PC AT—it was an actual next generation PC platform. It introduced standards that lasted for decades, like VGA graphics and their eponymous keyboard and mouse ports. With such a show of engineering force, IBM was sure that all of those copycat cloners would fall in behind the might of Big Blue. How else could they stay “IBM compatible?”

IBM’s grand plan for regaining control of the PC platform came in the form of Micro Channel Architecture. While Compaq beat IBM to shipping a 386 PC, they did so by using the same 16-bit AT bus—better known as ISA… or eye-sah… however it’s pronounced—found in every other PC clone. Of course, the Industry Standard Architecture wasn't industry standard because it was particularly good. It was industry standard because IBM's Boca Raton dev team decided to publish the specs for anyone to read and copy, royalty-free. The explosive popularity of IBM’s PC and PC AT combined with a royalty-free bus created a fertile field for all kinds of add-in cards. Its open nature also meant a cloner could include ISA slots on a motherboard. But ISA had its limits. With a maximum width of 16 bits and sensitive clock timing, ISA was too slow to take full advantage of the 386. Plus, Installing ISA cards required arcane rituals like setting jumpers or DIP switches to configure memory addresses and interrupt requests—and woe betide you if those settings were hard-wired.

Micro Channel Slots. Attribution: Appaloosa, CC BY 4.0, via Wikimedia Commons

In 1986, shipping a machine with the ISA bus was a smart choice despite its limitations. 32-bit memory could be put on SIMMs or proprietary memory boards and avoid the worst of ISA’s speed penalties while keeping ISA slots free for peripheral cards. Even if a 32-bit bus was available, most peripherals of the era wouldn’t saturate it. For the time being, keeping compatibility with existing cards was the winning move for Compaq. But that wouldn’t always be true—ISA needed to be replaced some day. IBM decided that day was April 2, 1987—the PS/2’s launch—and the boys from Boca thought they had a winner. MCA slots had advanced features like plug-and-play software configuration, 32-bit bus width, and more megahertz for more throughput. But all these benefits came with a catch: MCA used a completely different connector than ISA, breaking compatibility with existing cards. That wouldn’t have been so bad if IBM had included an ISA slot or two in MCA PCs, but MCA was an all-or-nothing proposition. Software configuration required system-specific disks that you’d better not lose, unlike the literal plug-and-play found in NuBus on the Mac or Zorro on the Amiga. But the most aggravating thing of all was that IBM patented Micro Channel. After all, MCA took a lot of research and development, and that didn’t come for free. They thought everybody would line up to integrate this next-generation bus and wouldn’t mind paying for the privilege.

8-bit ISA, 16-bit ISA, and 32-bit EISA cards.

Attribution: Nightflyer, CC BY-SA 3.0, via Wikimedia Commons

It wasn’t long before IBM’s grand plan collapsed under the weight of their hubris. Compaq and the other cloners weren’t willing to give IBM a chunk of money for every machine they built. Instead, Compaq led a group of eight other companies in designing their own 32-bit expansion slot called the Enhanced Industry Standard Architecture, or EISA. Or “Eee-sah.” Still not sure how that’s pronounced. Backwards compatible and royalty free, EISA meant that no one needed to license Micro Channel, and MCA slots never went mainstream. Then again, EISA never went mainstream either; it was mostly found in workstations and servers. Most PCs would have to wait until the arrival of PCI to finally kill ISA dead.

While Compaq was a market leader, they weren’t without their faults. Truthfully, they weren’t that different from Apple in terms of how they pitched and priced their products. Compaq’s main clientele were businesses, power users, and professionals who demanded powerful machines that cost less than IBM’s. Other cloners, like AST, Dell, and Zenith were all competing with Compaq in that same market, but they were more popular in mid-range segments where they were constantly undercutting each other. If you’re too thrifty for a name brand, white-label PCs from places like Bob's House of Genuine Computer Parts, wink wink, or Mad Macy’s Mail Order Motherboards were always an option. Buyer beware, though—most of these small fry lacked the kind of warranty or support that you’d get from a brand name company.

Everything changed when Packard Bell and Gateway 2000 attacked. These upstarts were building computers with specs that could trade blows with the more prestigious companies while selling at white-label prices. Gateway was a mail-order operation, while Packard Bell attacked the growing big-box retail segment. Dell, AST, and other cloners responded by lowering prices and building cheaper PCs. Compaq didn’t, and their balance sheet suffered accordingly. Boardroom battles erupted in 1991 between chairman Ben Rosen and CEO Rod Canion. Rosen wanted Compaq to aggressively pursue the home and entry-level markets, while Canion wanted to stay the course. He was one of Compaq’s founders, and the company had amazing success under his leadership. Compaq was still making money hand-over-fist, so if it ain’t broke, don’t fix it, right?

Compaq's corporate reckoning came on October 23, 1991, halfway through that year's Fall COMDEX. Faced with the company's first quarterly loss, Rod Canion had to take serious action. The next day he laid off over 1,400 employees and then presented an eighteen month plan to attack the entry level market. For most companies this would have been a sensible turnaround plan. But what Canion didn’t know was that Rosen had dispatched a team to Las Vegas to covertly attend COMDEX and do a little recon. That secret team put together an alternate plan that could bring a low-cost PC to market by the summer of 1992—half the time of Canion’s proposal. With his new strategy in place, Rosen and the board fired Canion on October 25th, 1991—the final day of COMDEX.

The ultra-slim ProLinea 3/25ZS as seen in a Compaq brochure.

Compaq’s COO Eckhard Pfeiffer was promoted to CEO and the company poured everything it had into building a new low-cost product line. Announced on June 15, 1992, Compaq’s new ProLinea range of personal computers arrived a month ahead of schedule and with much fanfare for the press. Tech company press releases can get pretty schlocky, and Compaq’s were no exception. A nameless Compaq executive really thought they hit the jackpot with “the Goldilocks strategy” of offering papa, mama, and baby computers. That’s not subtext, it’s actual text! I don’t like this analogy for a variety of reasons, mainly because it’s creatively bankrupt and condescending. I’m sure the nameless executive thought this was their most brilliant idea, even though they fundamentally misunderstood both the setup and moral of Goldilocks and The Three Bears.

Still, you gotta work with what they give you. If the existing Deskpro/M was Papa Bear, the new Deskpro/I was Mama Bear and the ProLinea was Baby Bear. Starting at $899, the tiny two-slot ProLinea 3/25ZS was a warning to other low-cost makers that Compaq was ready for war—price war. Joining the compact ZS series was a bigger three-slot ProLinea S desktop with a 5 1/4” drive bay and options for more powerful processors. If either of those weren’t enough for you, the Deskpro/I and /M were there to satisfy all your power user needs. It was up to you to determine which machine was Just Right… and then eat its porridge and sleep in its bed? My opinion of fairy-tale-based marketing strategies aside, these machines were an immediate hit. Compaq didn’t let off the gas, either—a year later in 1993 they simplified the lineup by retiring the 386 CPUs and ditching the undersized ZS model, so that was goodbye to one of the baby bears. The S model was now the standard ProLinea, featuring CPUs ranging from a 25MHz 486SX to a 66MHz 486DX2. 4 megabytes of RAM came standard, and hard drive sizes ranged from 120 to 340 MB. In addition to all the standard specs, Compaq had a long options list of modems, storage, networking, and multimedia.

A Compaq Ad from 1993 featuring the new ProLinea and Deskpro families.

How much did my uncle pay for his sensible mid-range computer in 1993? I hit the books and found several reviews of the ProLinea 4/33. My old standby of PC/Computing reviewed the 1992 models, which had plenty of useful information, but for accuracy’s sake I needed a 1993 review. PC Magazine’s September 1993 value PC roundup had just what I needed. Roundup reviews like these are a fun relic of the electronics press—a time long past when budgets were big enough that editors could write a bunch of checks to review ten computers at once. PC Magazine staff writer Oliver Rist was generally positive on the ProLinea, citing its competitive performance at a low price along with Compaq’s above-average service and support. His only knock was against the video chipset, which doesn’t really square with the results in the benchmark charts. The ProLinea is right in the middle of the pack for the Graphics WinMark scores, with only a few outliers completely destroying the rest of the competition.

PC Mag’s ProLinea came with 8 megs of RAM, a 240MB hard drive, dual floppies, and a monitor for the cool cost of $2300. That was still a decent chunk of change for a computer, but a year earlier a powerful Compaq Deskpro with a 33MHZ 486DX cost nearly three times as much. Now ordinary people could buy Windows PCs that could run multiple applications simultaneously with an.... acceptable level of performance! Until the machine crashed or froze, of course, because we're still talking about Windows 3.1. Still, you could do a lot worse in 1993 than these PCs.

The ProLinea was step one in Compaq’s multi-point plan for world domination. If the new game was being number one in marketshare, then so be it, they would be number one. First, Compaq changed their sales strategies by adding new channels in addition to their traditional dealer network. The most obvious move was creating a new factory direct sales operation to compete head-to-head with Gateway and Dell. Next, they needed to counter Packard Bell in the growing big box retail segment. Stores like Circuit City, Nobody Beats the Wiz, and even Sears were pushing computers as they became cheaper and more mainstream. Apple Performas and IBM PS/1s were already in stores, and Compaq joined the fray with the Presario in 1993. Originally an all-in-one model, the Presario name grew to represent Compaq’s entry-level retail brand. For a while the same desktops and towers were labeled as ProLinea or Presario depending on whether they were sold in dealer or retail channels, but by the end of 1996, Compaq realized that was silly and condensed everything under the Presario label.

Think about famous computer names—ThinkPad, Macintosh, Vaio. All of those brands conjure up something specific, something emotional. ThinkPad is a black-and-red machine that means business and reliability. Macintosh means style, ease of use, and “it just works.” Vaio evokes cutting-edge hi-fi design and multimedia prowess. When I hear Presario, I think of nondescript beige boxes that were no different than a dozen other PCs. Far more important than the Presario's B-list name was its A-list marketing strategy, though. Compaq’s aggressive marketing combined with just the right level of hardware for the average user meant that millions of people connected to the web for the first time thanks to a Compaq computer. Presario had enough recognition to get some eulogies when HP retired the Compaq and Presario names in 2013. The ProLinea, though... as far as I can tell, nobody cared enough to write an article, or even a press release, about the retirement of Compaq's first entry-level computer brand.

I moved on from the trusty ProLinea in the year 2000 when I bought a Hewlett-Packard Pavilion with a 600MHz Pentium III, using my salary as a supermarket cashier. My dad kept the Compaq as his own personal machine, but even his tolerance for slow computers had a limit. He replaced it in October 2002 with a Compaq Presario from Staples—something in the 5000 series that had a white case with transparent gray plastic. What happened to the ProLinea after that? I have no idea. I was off to college at the time, and my younger sister wasn’t far behind. With the last of their children ready to leave the nest, my parents cleaned out the detritus generated by three sons and a daughter. Maybe it went to some e-waste pile, or maybe it was picked up by someone who cared about old technology. Hopefully it was the latter.

Unlike the move from the Commodore to the Compaq, my next PC wasn’t as much of a quantum leap. It still ran Windows, it still connected to the internet, and it still played games—it just did them all faster and with more bells and whistles. By the late 90s most traces of personality were beaten out of most PCs, leaving the workstation makers and Steve Jobs’ resurgent Apple as the only real purveyors of character. I suppose that’s the nature of many mass-market products—a Sony Walkman was a novel idea, and then the portable tape player market slowly grew stale as manufacturers built disposable items at the lowest possible cost. To their credit, Sony kept at it until the bitter end, and they still manage to put a bit of character in everything they make.

A portrait of the author’s uncle as a younger man.

So why does this boring bland basic beige box—which didn’t stick out from the crowd at all—still have a place in my heart? It’s because it was from my uncle, of course. As a microbiologist, he was deeply involved with science and technology. He saw my growing love of computers and tech and wanted to help me towards a career in that field. Yes, he knew I would spend just as much time playing games or surfing online than using it for schoolwork. But that’s OK—just having an environment to explore was enough. The world was growing more connected by the day, and you could get on board, or be left behind.

It’s hard not to look at the millions of Wintel machines shipped during the nineties and ask “where’s the character?” After all, they looked the same, used the same processors, and ran the same operating systems. Few manufacturers innovated and many ended up chasing trends, Compaq included. But the mistake I made was not recognizing that even the most neutral of computers is colored by its user. Every vintage PC I’ve picked up has some story to tell. A machine with bone-stock hardware can have the wildest software lurking on its hard drive. An unassuming beige box can conceal massive modifications. There was nothing unique or special about this particular computer—at least, not until I hot-rodded it with a bunch of upgrades. It didn’t really matter that it was a Compaq—the role could have been played by a Gateway, Packard Bell, or even a Zeos and the show would have gone on. I would’ve upgraded and stretched out any PC I owned, because it’s my nature.

I’ve grumbled quite a bit in various episodes about what we’ve lost from the golden age of microcomputing. I can’t help it; middle-age nostalgia is brain poison, and it’ll infect you if it hasn’t already. But as I’ve gotten back into serious computer history research, my old man yells at cloud instincts have given way to a more pleasant sense of wonder. By itself, a computer is just a steel box with some sand inside of it. Whether it’s a common Compaq or a colossal Cray, a computer can’t do anything without a person behind it. That was true in the eighties during the golden age, it was true in the nineties, and it’s still true today.

So even though Windows was kinda crashy and software never quite worked the way it was supposed to, things in the nineties were a Hell of a lot easier to use than they were in the eighties—and more reliable to boot. Maybe the lack of platform diversity was worse for us nerds, but it was better for society for us to settle down a bit and not introduce mutually incompatible computers every couple of years. All of the criticisms of machines like the ProLinea, and the Presarios that replaced, it were correct. Without this army of beige PCs heralded by Compaq, maybe the world wide web wouldn’t have taken off like it did. Maybe I’ve been a little too hard on beige. But at the end of the day, we all want something that gets out of our way and lets us be who we are. What’s more beige than that?

Here in Userlandia, an Apple a day keeps the Number Muncher at bay.

Welcome back to Computers of Significant History, where I chronicle the computers crucial to my life, and maybe to yours too. If you’re like me and spent any time in a US public school during the eighties or nineties, you’ve likely used a variant of the Apple II. As a consequence, the rituals of grade school computer time are forever tied to Steve Wozniak’s engineering foibles. Just fling a floppy into a Disk II drive, lock the latch, punch the power switch... and then sit back and enjoy the soothing beautiful music of that drive loudly and repeatedly slamming the read head into its bump stops. Sounds like bagpipes being repeatedly run over, doesn't it? If you're the right age, that jaw-clenching, teeth-grinding racket will make you remember afternoons spent playing Oregon Trail. ImageWriter printers roared their little hearts out, with their snare drum printheads pounding essays compiled in Bank Street Writer onto tractor feed paper, alongside class schedules made in The Print Shop. Kids would play Where in the World is Carmen Sandiego at recess, and race home after school to watch Lynne Thigpen and Greg Lee guide kid gumshoes in the tie-in TV show. Well, maybe that one was just me. Point is, these grade school routines were made possible thanks to the Apple II, or more specifically, the Apple IIe.

The Apple IIe.

Unlike the BBC Micro, which was engineered for schools from the start, the Apple II was just an ordinary computer thrust into the role of America’s electronic educator. Popular culture describes Apple’s early days as a meteoric rise to stardom, with the Apple II conquering challengers left and right, but reality is never that clean. 1977 saw the debut of not one, not two, but three revolutionary personal computers: the Apple II, the Commodore PET, and the Tandy Radio Shack 80—better known as the TRS-80. Manufacturers were hawking computers to everyone they could find, with varying degrees of success. IBM entered the fray in 1981 with the IBM PC—a worthy competitor. By 1982, the home computer market was booming. Companies like Texas Instruments, Sinclair, and Atari were wrestling Commodore and Radio Shack for the affordable computer championship belt. Meanwhile, Apple was still flogging the Apple II Plus, a mildly upgraded model introduced three years prior in 1979.

Picture it. It's the fall of 1982, and you're a prospective computer buyer. As you flip through the pages of BYTE magazine, you happen upon an ad spread. On the left page is the brand new Commodore 64 at $595, and on the right page is a three year old Apple II Plus at $1530. Both include a BASIC interpreter in ROM and a CPU from the 6502 family. The Apple II Plus had NTSC artifact color graphics, simple beeps, and 48K of RAM. True, it had seven slots, which you could populate with all kinds of add-ons. But, of course, that cost extra. Meanwhile, the Commodore had better color graphics with sprites, a real music synthesizer chip, and 64K of RAM. Oh, and the Commodore was almost a third of the price. Granted, that price didn’t include a monitor, disk drive, or printer, but both companies had those peripherals on offer. Apple sold 279,000 II Pluses through all of 1982, while Commodore sold 360,000 C64s in half that time. In public, Apple downplayed the low-end market, but buyers and the press didn’t ignore these new options. What was Apple doing from 1979 until they finally released the IIe in 1983? Why did it take so long to make a newer, better Apple II?

Part of it is that for a long time a new Apple II was the last thing Apple wanted to make. There was a growing concern inside Apple that the II couldn’t stay competitive with up-and-coming challengers. I wouldn’t call their fears irrational—microcomputers of the seventies were constantly being obsoleted by newer, better, and (of course) incompatible machines. Apple was riding their own hype train, high on their reputation as innovators. They weren’t content with doing the same thing but better, so they set out to build a new clean-sheet machine to surpass the Apple II. To understand the heroic rise of the IIe, we must know the tragic fall of the Apple III.

The Apple III.

When Apple started development of the Apple III in late 1978, IBM had yet to enter the personal computer market. Big Blue was late to the party and wouldn't start on their PC until 1980. Apple had a head start and they wanted to strike at IBM’s core market by building a business machine of their own. After releasing the Apple II Plus in 1979, other Apple II improvement projects were cancelled and their resources got diverted to the Apple III. A fleet of engineers were hired to work on the new computer so Apple wouldn’t have to rely solely on Steve Wozniak. Other parts of Apple had grown as well. Now they had executives and a marketing department, whose requirements for the Apple III were mutually exclusive.

It had to be fast and powerful—but cooling fans make noise, so leave those out! It had to be compatible with the Apple II, but not too compatible—no eighty columns or bank-switching memory in compatibility mode! It needed to comply with incoming FCC regulations on radio interference—but there was no time to wait for those rules to be finalized. Oh, and while you’re at it... ship it in one year.

Given these contradictory requirements and aggressive deadlines, it's no surprise that the Apple III failed. If this was a story, and I told you that they named the operating system “SOS," you'd think that was too on the nose. But despite the team of highly talented engineers, the dump truck full of money poured on the project, and what they called the Sophisticated Operating System, the Apple III hardware was rotten to the core. Announced in May 1980, it didn’t actually ship until November due to numerous production problems. Hardware flaws and software delays plagued the Apple III for years, costing Apple an incredible amount of money and goodwill. One such flaw was the unit's propensity to crash when its chips would work themselves out of their sockets. Apple’s official solution was, and I swear I'm not making this up, “pick up the 26-pound computer and drop it on your desk.” Between frequent crashes, defective clock chips, and plain old system failures, Apple eventually had to pause sales and recall every single Apple III for repairs. An updated version with fewer bugs and no real-time clock went on sale in fall 1981, but it was too late—the Apple III never recovered from its terrible first impression.

Apple III aside, 1980 wasn’t all worms and bruises for Apple. They sold a combined 78,000 Apple II and II Plus computers in 1980—more than double the previous year. Twenty five percent of these sales came from new customers who wanted to make spreadsheets in VisiCalc. Apple’s coffers were flush with cash, which financed both lavish executive lifestyles and massive R&D projects. But Apple could make even more money if the Apple II was cheaper and easier to build. After all, Apple had just had an IPO in 1980 with a valuation of 1.8 billion dollars, and shareholder dividends have to come from somewhere. With the Apple III theoretically serving the high end, It was time to revisit those shelved plans to integrate Apple II components, reduce the chip count, and increase those sweet, sweet margins.

What we know as the IIe started development under the code name Diana in 1980. Diana’s origins actually trace back to 1978, when Steve Wozniak worked with Walt Broedner of Synertek to consolidate some of the Apple II’s discrete chips into large scale integrated circuits. These projects, named Alice and Annie, were cancelled when Apple diverted funds and manpower to the Apple III. Given his experience with those canned projects, Apple hired Broedner to pick up where he left off with Woz. Diana soon gave way to a new project name: LCA, for "Low Cost Apple", which you might think meant "lower cost to buy an Apple.” In the words of Edna Krabapple, HAH! They were lower cost to produce. Savings were passed on to shareholders, not to customers. Because people were already getting the wrong idea, Apple tried a third code name: Super II. Whatever you called it, the project was going to be a major overhaul of the Apple II architecture. Broedner’s work on what would become the IIe was remarkable—the Super II team cut the component count down from 109 to 31 while simultaneously improving performance. All this was achieved with near-100% compatibility.

Ad Spread for the IIe

In addition to cutting costs and consolidating components, Super II would bring several upgrades to the Apple II platform. Remember, Apple had been selling the Apple II Plus for four years before introducing the IIe. What made an Apple II Plus a “Plus” was the inclusion of 48 kilobytes of RAM and an AppleSoft BASIC ROM, along with an autostart function for booting from a floppy. Otherwise it was largely the same computer—so much so that owners of an original Apple II could just buy those add-ons and their machine would be functionally identical for a fraction of the price. Not so with the IIe, which added more features and capabilities to contend with the current crop of computer competitors. 64K of RAM came standard, along with support for eighty column monochrome displays. If you wanted the special double hi-res color graphics mode and an extra 64K of memory, the optional Extended 80 Column Text card was for you. Or you could use third-party RAM expanders and video cards—Apple didn’t break compatibility with them. Users with heavy investments in peripherals could buy a IIe knowing their add-ons would still work.

Other longtime quirks and limitations were addressed by the IIe. The most visible was a redesigned keyboard with support for the complete ASCII character set—because, like a lot of terminals back then, the Apple II only supported capital letters. If you wanted lowercase, you had to install special ROMs and mess around with toggle switches. Apple also addressed another keyboard weakness: accidental restarts. On the original Apple II keyboard, there was a reset key, positioned right above the return key. So if your aim was a quarter inch off when you wanted a new line of text, you could lose everything you'd been working on. Today that might seem like a ridiculous design decision, but remember, this was decades ago. All these things were being done for the first time. Woz was an excellent typist and didn't make mistakes like that, and it might not have occurred to him that he was an outlier and that there'd be consequences for regular people. Kludges like stiffer springs or switch mods mitigated the issue somewhat, but most users were still one keystroke away from disaster.

The IIe’s keyboard separated the reset key from the rest of the board and a restart now required a three finger salute of the control, reset, and open-Apple keys. Accidental restarts were now a thing of the past, unless your cat decided to nap on the keyboard. Next, a joystick port was added to the back panel, so that you didn't have to open the top of the case and plug joysticks directly into the logic board. A dedicated number pad port was added to the logic board as well. Speaking of the back panel, a new series of cut-outs with pop-off covers enabled clean and easy mounting of expansion ports. For new users looking to buy an Apple in 1983, it was a much better deal than the aging II Plus, and existing owners could trade in their old logic boards and get the new ones at a lower price.

A Platinum IIe showing off the slots and back panel ports.