The Mystery of Mac OS’ Mangled Image Interpolation Implementation

Here in Userlandia, I’m talking rainbows, I’m talking pixels.

Bugs. Glitches. Unintended consequences. Computer software, like everything made by us imperfect humans, is full of imperfections of its own. When weird things happen, most people just mutter and/or swear. But I'm one of the few who feels compelled to learn why. When there’s something strange in the Network Neighborhood, I’m the one you called. But there’s nothing supernatural about software. Computers do exactly what they’re told, like a vexatiously literal genie. It’s not always obvious why bad things happen to good programs. And, as with any whodunit, they may only be obvious in retrospect.

One such mystery crossed my path back in June. I ran into an interesting thread on one of my usual Mac haunts: Ars Technica’s Macintoshian Achaia forum. Forum user almops was having a weird problem with Keynote. When a specific PDF was placed into Keynote, its contents—a series of colored squares—became a smooth rainbow gradient! Don't get me wrong, rainbows look cool, but they're not helpful when you need distinct solid blocks of color. The PDFs in question had been created by a suite of command line apps called generic-mapping-tools, or GMT, which generates maps and map accessories… like color bars. Almops said Adobe Acrobat displayed the PDF correctly, as did Chrome, and PDF viewers on other operating systems. Anything Apple, on the other hand—be it iWork, Preview, or Safari—displayed those color blocks as a gradient, ruining his presentation.

When I saw that thread, I knew I had to tackle the mystery. It’s the kind of obscure problem that calls for my very particular set of skills, skills I acquired over a long career. For fifteen years I worked for OEMs in the graphic arts industry—more specifically, in workflow software. These applications do the hard work of managing color, rasterizing vectors, and compositing transparencies so designs can be put on paper, film, or plates. I was part of the QA teams for these companies, where I designed features, sniffed out bugs, and figured out why things go sideways. This wasn't the first time I've seen an interpreter mangle something beyond recognition, but there's almost always a way to work around it. I requested a copy of the problem file, and almops sent along both the PDF they imported into Keynote and the PostScript file used to generate said PDF. Concealed in those files was code that could clarify this this calamitous conundrum of colorful confusion. Time to put on the deerstalker cap and do some old-fashioned detective work.

Layers of Quartz

This mystery revolves around Quartz, the display engine at the heart of Apple’s operating systems. Every copy of Mac OS (and iOS) uses Quartz to draw and composite on-screen graphics. The special thing about Quartz is that its programming model is based on PDF. That's why Mac OS applications can import PDFs into their documents without needing to roll their own PDF import routines. This is a legacy inherited from Mac OS X’s predecessor, NeXTSTEP. Though Mac OS’s Quartz is very different from NeXT’s Display PostScript, both systems are designed to bring the flexibility and fidelity of a print-oriented graphics model to a computer display.

Display PostScript had a lot of intricacies and gotchas—and I’m not even talking about the licensing fees. NeXTSTEP’s window server was a Display PostScript interpreter which executed PostScript code to update the display. When NeXTSTEP was remodeled into Mac OS X, Apple replaced Display PostScript with the Quartz display model. Quartz isn’t just a renderer—it’s a complete technology stack. One facet is Quartz 2D, better known today as Core Graphics. Quartz 2D is the graphics framework that does the hard work of drawing and rasterizing the contents of your windows. Those graphics are then passed on to the Quartz Compositor—also known as Mac OS’ Window Server—which composites all the windows together into a complete computer display.

Separating rendering from compositing was the trick that let Mac OS X build compatibility for legacy graphics and lead us into the future. Now the OS could easily combine the results of very different graphics APIs. Quartz 2D and the Cocoa framework was the way of the future but apps built using the Carbon framework could carry over QuickDraw routines from classic Mac OS. QuickTime and OpenGL could render video and 3D graphics. Quartz Compositor combined the results from all these graphics libraries into one coherent display. Another advantage of this model was its extensibility—new libraries and APIs could be added without reinventing the entire display model, something that was very difficult to do in classic Mac OS.

An average user on the web might say “I’m not a developer. Why should I care what Quartz 2D can do for me?” Well, being able to print anything to a PDF file in Mac OS without shelling out big bucks for a copy of Adobe Acrobat Pro is pretty big. So is being able to import a PDF into almost any application. Since PDF is a superset of PostScript, it’s still code that needs to be interpreted by something to display a result. That something could be a viewer application, like Adobe Acrobat, PDFPen, or PDF Expert. It could be an editor, like Callas PDFToolbox, Markzware FlightCheck, or Enfocus Pitstop Pro. Or it could be a renderer, like Adobe PDF Print Engine, Global Graphics Harlequin, or Quartz 2D. Because PDF is a codified standard, all of these applications adhere to the rules and principles of that standard when interpreting PDFs. Or, at least, that's what's supposed to happen.

An example of banding.

Almops’ PDF problem was perplexing, that’s for sure. My first theory was a blend detection bug. Making gradients in older versions of PostScript and PDF wasn’t easy. In PostScript Level 1 and 2, gradients were built from an array of paths of varying color values. Think of it like arranging a series of color slices that, from a distance, look like a smooth gradation. There were a lot of problems with this, of course—too many slices, and the interpreter would run out of memory or crash. Not enough slices, and it would show hard color edges instead of a smooth blend. This is called banding, and it looks really awkward. Most interpreters detected these arrays as blends and post-processed them to improve their smoothness. Since the introduction of PostScript Level 3, making a gradient in an application is super easy. Set the start and end points along with the number of colors in-between, and ta-da—your PDF or PS file has an actual gradient object called an shfill. But there’s still plenty of old-school level 1 and 2 blends out there, and maybe that's what Quartz thought almop’s color bar was.

This theory was quickly disproven when I used Pitstop Pro’s inspector to examine individual objects. I discovered that they weren’t a series of fills, but an image! This couldn’t be—what would cause an image to transform into a gradient? An image should just be an image! Unlike a vector object, which needs to be rasterized, an image is just a series of pixels! All it needs is scaling to render at the appropriate size. What could possibly have happened to transform these chunky blocks of color into a smooth gradient?

I needed to look closer at the image’s details. I’m not talking about zooming in—I wanted to see the metadata attributes of the image. Once again, it's Pitstop’s inspector to the rescue. It was an RGB image, eight bits per pixel, and four inches tall by four tenths of an inch wide. In pixels, it was ten pixels tall by one pixel wide, giving an effective DPI of about two and a half... wait, what? ONE pixel wide?! I opened the image in Photoshop, and confirmed the ghastly truth: Almops' image was a single pixel wide. At one pixel wide by ten pixels tall, each pixel was a single block in the color bar. The rainbow, I realized, was the result of Keynote upscaling the lowest-resolution image possible.

Resolving Power

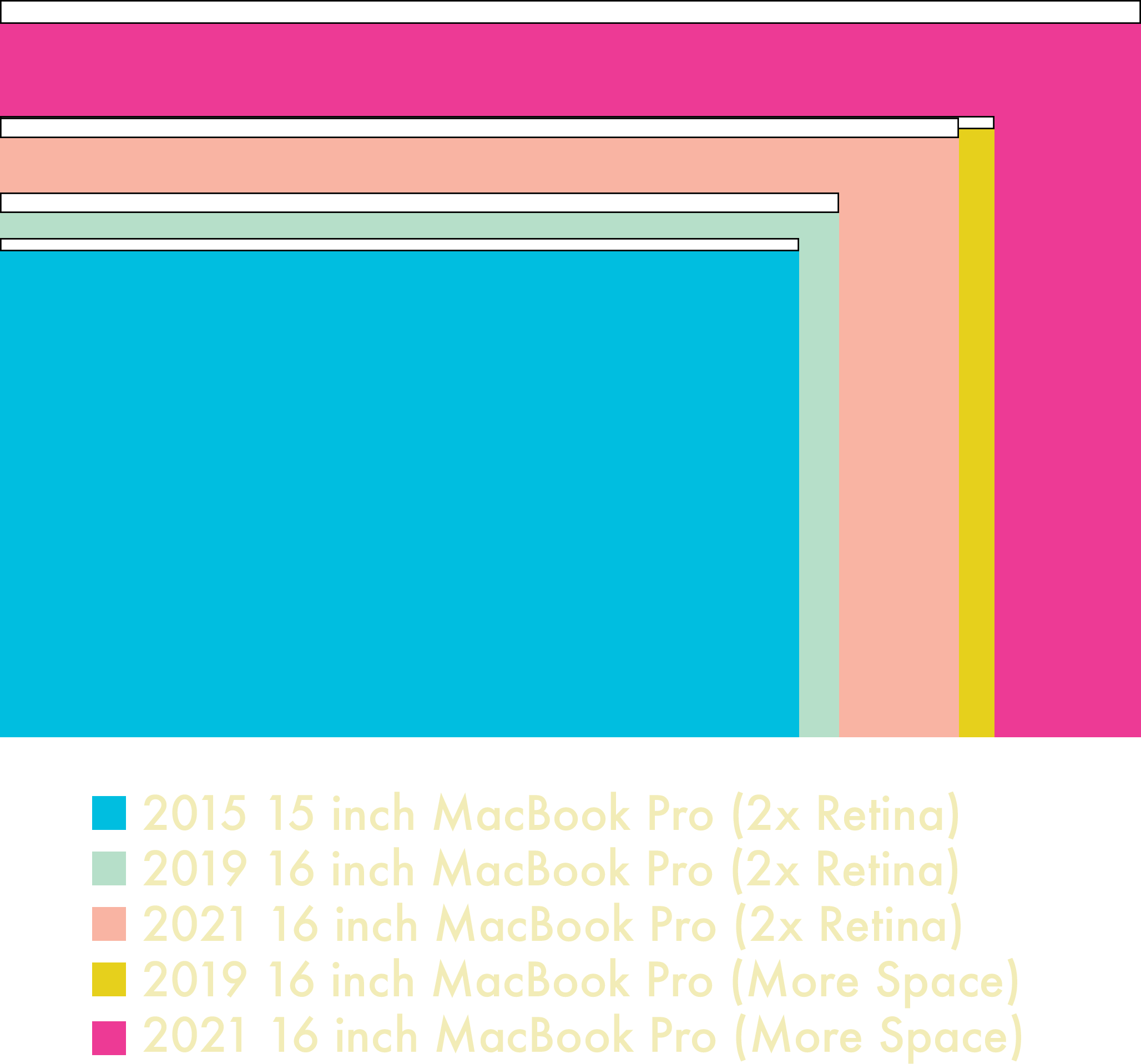

Why does resolution matter? If you’ve ever taken a photo from a random website, sent it your printer, and been horrified by its lack of sharpness, congratulations—you’ve fallen prey to a low-res image. Computer displays historically have low resolution compared to printers, much to the consternation of graphic designers, typographers, tattoo artists, cake decorators, or anyone who just wants a high-fidelity image. An image designed for screens doesn't need as much pixel resolution as one that's going to be printed, because screens can't resolve that much detail. Files used for printing often require three to four times the resolution that your monitor is capable of displaying! So how can we put a high resolution image in a page layout or drawing application, and be sure it’ll be printed at full resolution?

That's where device-independent page description languages like PostScript and PDF come in. These languages bridge the gap between the chunky pixel layouts of a display and the fine, densely packed dots of a printer. By describing the logical elements of a page—like shapes, text, and images—as a program, PostScript and PDF abstract away messy device dependencies like pixel grids. It’s up to an interpreter to rasterize PostScript or PDF objects into a format the device can understand.

Some PostScript code describing an image. An interpreter must parse this code to render it for an output device.

Remember, pixels don’t tell you anything about the physical size of an image. How big is a six-hundred-by-six-hundred-pixel image, for instance? On a six-hundred-DPI printer... it's one square inch. One very crisp and sharp square inch, because your eye can't see the individual pixels. But if you opened that same image on a one-hundred DPI computer monitor, it would display at six inches by six inches... with very obvious individual pixels. So if you wanted it to show as one square inch on both the monitor and the printer, there has to be some way to tell both the computer and the printer how large the image should be.

Well, that way is the DPI value. Take that same six hundred by six hundred pixel image mentioned earlier, set its DPI to three hundred, and a page layout application will size it at two inches by two inches. A printer will also know that image should be two inches by two inches, and it'll paint the source pixels into the device pixels, after which ink pixels will embed themselves into paper pixels, so that you can look at it with your eyeball pixels. We could scale the image up or down, but that will make the DPI go down or up. The more pixels you can pack into the same area, the sharper the image will look when printed. This isn't the same as making it bigger. If you make the image bigger but don't have more pixels to back that up, you won't get more detail no matter how many times you yell ENHANCE at the computer.

Given the barely-there resolution of almops' image, I wondered what would happen if it got a bit of help. I opened the image in Photoshop and resampled it to 100x1000, using the nearest neighbor algorithm to preserve its hard pixel edges. I saved my edits, updated the PDF, and reopened it in Preview. The gradient was gone! I was greeted with a nice column of colors that looked just like the original file did in Acrobat. Case closed, mystery solved! I posted a theory for the rainbowfying in the thread:

My guess is that when Quartz sees images like this, it has a special handling exception. Quartz creates a replacement true gradient blend with those pixels as the control points of the blend. My hunch is that this is used somewhere in Quartz for UI drawing performance reasons when using small raster elements, and because Preview is a Quartz renderer, well...

Trust me—if you eat, sleep, and breathe Mac graphics software, it almost makes perfect sense. No other viewer was doing something like this, so Quartz had to be doing something special and unusual. I even helped almops tweak their software to output a file that would never rainbow again—but we’ll come back to that later.

Objection!

As the weeks went by, I gradually lost confidence in this theory. I just couldn’t shake the feeling that there was a simpler explanation. The gradient shortcut theory sounded right, yes, but what evidence did I actually have? After all, the first version of Quartz was PDF version 1.4 compatible, and PDF had added support for gradient shfill objects back in PDF version 1.3. Why, then, would Apple use one-pixel strips as a shortcut for gradient generation? That didn’t make any sense. What was I missing? I had to reopen the case, reexamine the evidence, and figure out the truth.

What’s the piece of evidence that will blow this case wide open?

I compared myself to Holmes earlier, and maybe that was wrong too. No, maybe I’m more like Phoenix Wright, from the Ace Attorney games. Ace Attorney is about finding contradictions. You comb through crime scenes, present your evidence, and examine witness testimony. Even when you think you’ve found the culprit, your reasoning and deductions are constantly challenged. I had to accept that my initial conclusion could be wrong and look at the case from another angle—just like Phoenix Wright.

I recalled some complaints that Mac OS’ Preview application made certain images look blurry. Could that be related to the rainbow gradient problem? I opened a PDF file containing some classic Mac OS icons—first in in Preview, then in Acrobat Pro. These icons were only 32 pixels by 32, but they were scaled up to fill a page. Acrobat displayed clean, sharp pixels while Preview was a blurry mess—a tell-tale sign of bilinear interpolation. I opened that one-pixel-wide color-bar image and resampled it to 100 pixels by 1000, but this time I used the bilinear algorithm. The result was a familiar rainbow. That’s when it hit me—Preview wasn’t using a nearest neighbor or matrix transformation, it was using a bilinear algorithm to smooth out the color values! How could I have missed this? It was right there the whole time! I sure hope somebody got fired for that blunder.

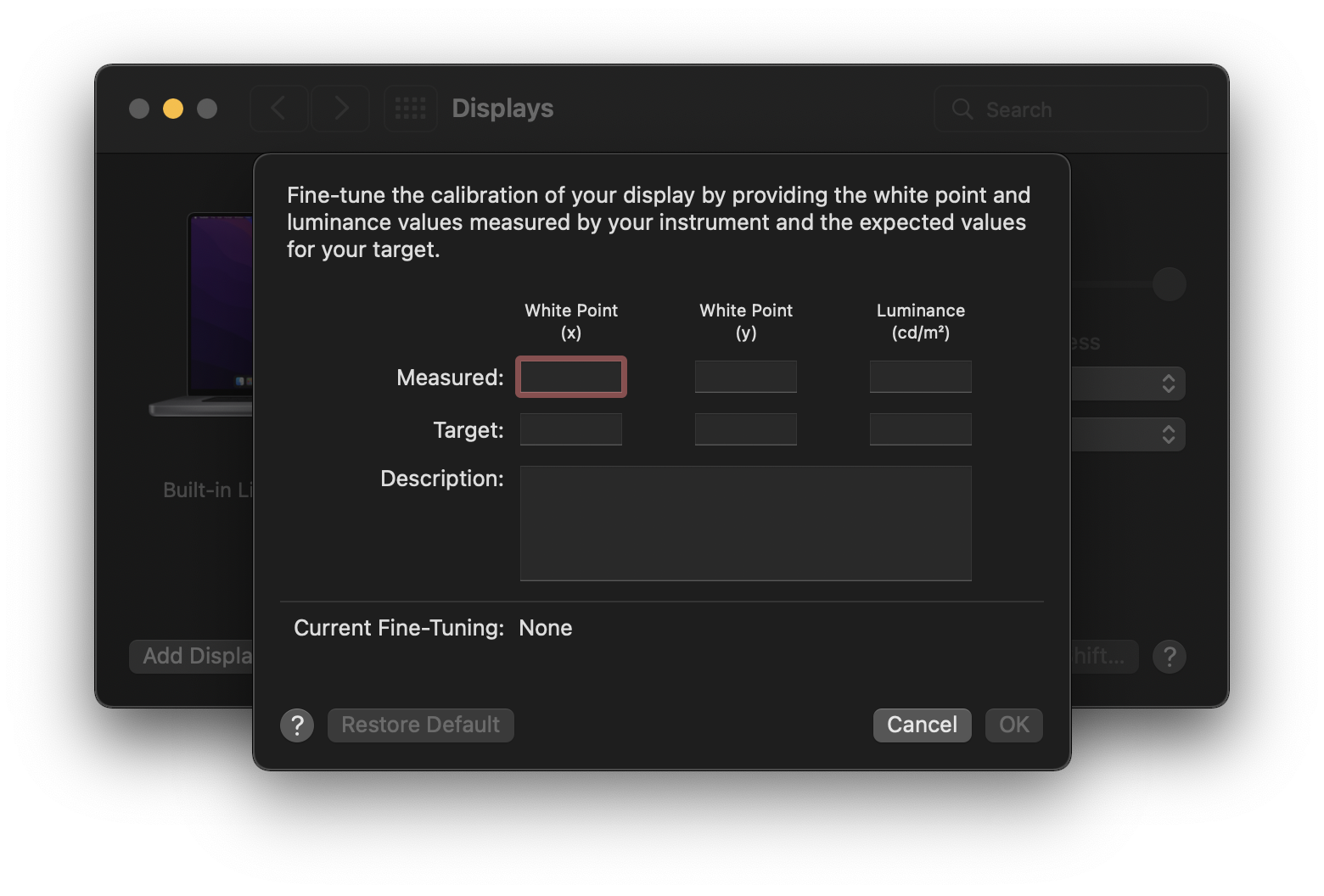

The last piece of the puzzle was to check if Quartz 2D was in fact modifying the image contents, or just displaying them with a filter. I dumped Quartz 2D’s output to a PDF file, using Mac OS’ built-in print to PDF function. I cracked the new file open with BBEdit, and scrolled to the image dictionary to examine the code. The image was still defined as one pixel wide by ten pixels tall, and it was still the same physical size. But there was a new wrinkle: when Preview interpreted the PDF, it added the interpolate flag to the PDF’s code and set it to true. I opened this new file in Acrobat Pro, and sure enough, there was a rainbow gradient instead of solid blocks of color. I’ve cracked the case, just like Phoenix Wright when—spoiler for the tutorial—he realized the clock wasn’t three hours slow, but nine hours fast! Cue the dramatic courtroom music.

Interpolation Interpretation

I hadn’t thought about the interpolate flag in years! But Quartz 2D is a PDF interpreter, and I should’ve known it was a possibility. Because PostScript and PDF are device independent, it’s up to the interpreter to scale the source pixels of the original image to the appropriate device pixels. Almops’ color bar consists of ten color swatches, each made of one image pixel and physically sized at four tenths of an inch. When viewed on a 100 DPI computer monitor, it would take forty device pixels to render one of those image pixels at the requested size. So where do all these new pixels come from?

Why, the computer makes them up, using the PIDOOMA method: Pulled It Directly Out Of My... uh, Algorithm. To scale one image pixel to forty device pixels, the PostScript or PDF interpreter uses a matrix transformation. Think of it like the paint bucket tool in an image editor—the interpreter samples the nearest source pixel’s color values and paints those values into the required device pixels. The interpreter calculates all the necessary values with a simple function that consumes a minimal amount of CPU cycles. Sounds great, doesn't it—but that efficiency has a cost, and the cost is image quality. If you've ever resized an actual photo using Photoshop's nearest neighbor algorithm, you know what I mean. When upscaling, continuous tone images like photographs look blocky or show jagged edges. When downscaling, fine details are smudged out, and you can get artifacts like moiré—that weird screen-door effect in repeating patterns.

To solve these problems some very smart mathematicians invented resampling algorithms to smoothly resize raster images. If you've ever looked at what Photoshop's menus actually say, you might recognize terms like nearest neighbor, bilinear, and bicubic—they’re all different ways of filling in those missing pixels. Nearest neighbor is great for images that need hard edges, like retro video game sprites, but as mentioned earlier, it’s not great for images that need smooth color transitions. Bilinear is better for continuous tone images because it interpolates two nearby pixels to create smooth color transitions. Bicubic is even better for photos because it uses four adjacent pixels, creating a sharper image at the cost of more processor power. Wouldn’t it be cool if the printer’s interpreter could apply these fancier algorithms when scaling images to print them, so you wouldn't have to open Photoshop every single time? Then all our photos would be as smooth as the music of Steely Dan!

The original image has been downsampled using nearest neighbor and bicubic methods. Notice the lack of jaggies on the bicubic example.

Adobe heard the demands for smoothness. They released the new and improved PostScript Level 2 in 1990, which added support for color graphics. Level 2 also added countless improvements for image objects, like the interpolate flag. Setting an image dictionary’s interpolate flag to true tells the interpreter to resample the image using a fancier algorithm like bilinear or bicubic. Even if your file had the flag set to false, you could override it at any time if the interpreter had options like “enable image smoothing.” Or the renderer could just ignore the flag entirely. The PDF and PostScript specs grant a lot of leeway to the interpreter in how it, well… interprets the interpolate flag. To wit, the PostScript Level 3 reference guide has this note at the end of interpolate’s definition:

Note: the interpolation algorithm is implementation-dependent and not under PostScript program control. Image interpolation may not always be performed for some classes of image or on some output devices.

A similar note can be found in the PDF reference guide.

NOTE: A conforming Reader may choose to not implement this feature of PDF, or may use any specific implementation of interpolation that it wishes.

This explains the difference between Adobe Acrobat and Apple’s apps. Acrobat obeys Adobe’s default spec. if the image object lacked the interpolate flag, Acrobat wouldn’t apply any fancy algorithms when upscaling the image. When set to true, Acrobat applies a bilinear interpolation, which averages the values of adjacent pixels together when scaling the image. This blurs the single pixel values together and creates—you guessed it—a smooth rainbow gradient.

Acrobat respecting the PDF interpolate flag.

The original PDF file didn’t have any interpolate flags set, but Preview interpolated all images anyway—which, as per the reference guide, it's completely allowed to do. But what if I set the flag to false? I opened almops’ original PDF in BBEdit, added an interpolate flag with a value of false, saved it, and reopened the file in Preview. No dice—it was the same old rainbow gradient. Preview doesn’t care if it was missing or false—it will always interpolate.

I should’ve expected as much because Apple frequently uses interpolation in its own apps. Keynote, Numbers, and Pages apply interpolation to any images placed in your documents. Same goes for using Preview to view PDFs with embedded images. Images in Safari are interpolated when they’re scaled, usually because they lack high-res alternates. Parts of the operating system are constantly scaling, like growing icons in the Dock or dynamically scaled windows in Mission Control. Without interpolation, all those actions would be a rough, jagged mess. But does it make sense to always interpolate images in apps like the iWork suite? After all, look what happened to almops. Luckily, there is a way for almops to create PDFs that won’t go all rainbow in Keynote.

The Fix is In

If this was a one-off problem that wasn’t likely to happen again, I would just edit the image in the PDF, resize it with nearest neighbor to 100x1000 pixels, save the file, and call it a day. But that would just be a band-aid—I wanted a cure. After some research, I found a promising solution. Remember back at the beginning I mentioned that these color bars were created by a program called GMT, or generic-mapping-tools. GMT is an open source library of command line tools for generating maps and map related graphics, and a major feature is its scriptability. Unlike iWork or Preview, GMT has a lot of knobs to turn.

I knew nothing about GMT, so I Googled “GMT psscale options” and the first hit was the command’s official documentation. Turns out that there’s a flag for psscale that determines how it writes out the color bar! Everything hinges on the -N flag and its arguments. The first helpful argument is P. When the P argument is called, psscale draws the color bar components as a series of vector squares instead of as an image. This is the perfect solution for this scenario because vector objects are paths made out of points connected by curves or lines. Because they’re math and not pixels, vectors are infinitely scaleable, and drawn at the device’s output resolution.

So if this option is available, why would you want to generate a color bar as an image? GMT recommends using an image for gradients—my guess is that they don’t write smooth shades as shfill objects. Luckily, the other flag is DPI, which does exactly what you think it does. When set, psscale will generate the image at the requested effective DPI. So if you need an image, you can set -N[600] and it’ll generate the color bar at 600 DPI. Some interpreters also handle color management on raster versus vector objects differently, but that's a problem for its own episode. Lastly, if you’re using GMT’s Modern mode and you stumble upon this same problem, the same -N flag and arguments exist for the colorbar command.

The Final Cut

Well, there it is. Mystery solved—at least, for almops. I’d still like to talk to whoever it was at Apple who decided to force all images to interpolate in most of their own apps without small image exceptions. I know, I know—exceptions are a rabbit hole that’ll leave somebody unhappy. If I were to file a bug radar or feedback about this behavior, it’ll likely be closed with a “works as designed, won’t fix.” An anticlimactic end to an otherwise enjoyable investigation.

No matter how strange or inexplicable, there’s always a rational explanation—or, at least, an explanation—for why a piece of software behaves the way it does. Even the gnarliest of bugs—the ones that crash your computer and ruin your day—can be explained. It only takes the will to decipher the clues, and maybe a little stack tracing. What separates a bug from a glitch or unintended consequence? To someone unfamiliar with the fiendishly clever intricacies of software development, almops’ rainbow problem seems like a bug. Show the rainbow problem to a developer or product manager, and you'd get a different answer.

That’s why some of your software annoyances can hang on for so long. In the case of Preview and other Apple apps, they decided that always-on interpolation provides the best image quality for photos, which is what most images are. And you know what? I agree with them! Photos are the most common type of image, by a longshot. The only flaw in Apple's plan is that you can't turn it off when it doesn’t work. A few users complaining about the occasional blurry image, versus a lot of users complaining about jaggies and moiré, isn’t a hard choice. That's not to say that the occasional blurry image isn't something to be disappointed by—but that's the thing about compromises: they don't make everyone happy.

But this time I don’t have to worry about convincing some PM that their decision is a problem. There’s something nice about figuring out a computer mystery without job-related stakes. Yes, Preview’s still going to interpolate images even when it’s a bad idea, and I can’t change that. But I managed to solve the mystery and supply a solution to prevent it from happening again. As far as I’m concerned, my job is done. Now only if Preview could interpolate an end to this episode…