Dropbox Drops the Ball

You never know when you’ll fall in love with a piece of software. One day you’re implementing your carefully crafted workflow when a friend or colleague DMs you a link. It’s for a hot new utility that all the tech tastemakers are talking about. Before you know it that utility’s solved a problem you never knew you had, and worked its way into your heart and your login items. The developer is responsive, the app is snappy, and you’re happy to toss in a few bucks to support a good product. But as time goes on, something changes. The developer grows distant, the app eats up all your RAM, and you wonder if it’s still worth the money—or your love.

That’s my story with Dropbox, the app that keeps all your stuff in sync. I still remember the day—well, my inbox remembers the day. It was June 2nd, 2010, when my coworker Stephen strolled into my cubicle and said “Hey, I started using this Dropbox thing, you should check it out.” Stephen has a habit of understatement, so from him that's high praise. Minutes later I registered an account, installed the app, and tossed some files into my newly minted Dropbox folder. It was love at first sync, because Dropbox did exactly what it said on the tin: seamlessly synchronize files and folders across computers with speed and security. A public folder and right-click sharing shortcuts made it easy to share images, files, and folders with anyone at any time. I could shuttle documents back and forth from work without relying on a crusty old FTP server. This utility was a direct hit to my heart.

How Dropbox Beat Apple at File Sync

Of course, remote file sync wasn’t a new concept to me—I’d used Apple’s iDisk for years, which was one of many precursors to Dropbox. Mac users could mount an iDisk on their desktop and copy files to Apple servers with just the classic drag and drop. Applications could open or save files to an iDisk like any other disk drive. Yet despite this easy-breezy user interface, the actual user experience of iDisk left a lot to be desired. Let’s say you have a one megabyte text file. Your Mac would re-upload the entire one meg file every time you saved it to an iDisk, even if you only changed a single character. Today, "ooh we had to upload a full meg of text every time" doesn't sound like any sort of problem, but remember: iDisk came out in 2000. A cable modem back then could upload at maybe 512 kilobits per second—and yes, that's kilobits, not kilobytes. So a one-character change meant at least a sixteen-second upload, during which your app would sit there, unresponsive. And this was considered super fast, at the time—not compared to the immediate access of your local hard disk, of course, but trust me, dial-up was much, much worse. The sensible thing was to just download the file from your iDisk to your hard drive, work on it, and then copy it back when you were done, and that was no different than FTP.

Needless to say, Apple felt they could do better. Steve Jobs himself announced major changes to iDisk in Mac OS 10.3 Panther at the 2003 WWDC Keynote.

“We’ve enhanced iDisk significantly for Panther. iDisk, as you know, is for our .Mac customers. The hundreds of thousands of people that signed up for .Mac. And iDisk has been a place where you can manually upload files to the .Mac server and manually download them. Well, that’s all changing in Panther, because in Panther we’re automatically syncing the files. And what that means is that stuff that’s in your iDisk will automatically sync with our servers on .Mac—in both directions—and it does it in the background. So what it really means is your iDisk becomes basically a local folder that syncs. You don’t put stuff in your iDisk to send it up to .Mac, you leave it in your iDisk. You can leave a document in your iDisk, open it up, modify it, close it, and the minute you close it, it will sync back up to .Mac in the background automatically.

So you can just leave stuff in your iDisk, and this is pretty cool. It’s a great way to back stuff up, but in addition to that it really shines when you have more than one computer. If I have three computers here, each with their own iDisk, I can leave a copy of the same document in the iDisk of each one, open up the document in one of those iDisks, change it and close it, and it’ll automatically sync back through .Mac to the other two. It’s really nice. In addition to this, it really works when you have untethered portables. You can be out in the field not connected to a network, change a document in your iDisk, the minute you’re connected whether you walk to an AirPort base station or hook back up to a terrestrial net, boom—that document and its change will automatically sync with .Mac.”

It’s hard not to hear the similarities between Steve’s pitch for the new iDisk and what Drew Houston and Arash Ferdowsi pitched for Dropbox. But even with offline sync, iDisk still had speed and reliability issues. And even after Apple finally ironed out iDisk’s wrinkles, it and iCloud Drive still trailed Dropbox in terms of features. Apple had a five-year head start. How could they lose to Dropbox at the "it just works" game?

Houston and Ferdowsi’s secret sauce was Dropbox’s differential sync engine. Remember that one meg text file from earlier? Every time you overwrite a file, Dropbox compares it against the previous version. If the difference is just one byte, then Dropbox uploads only that byte. It was the feather in the cap of Dropbox’s excellent file transfer performance. Its reliability and speed left iDisk in the iDust. Yet all that technowizardry would be worthless without an easy user experience. Dropbox’s deep integration into Windows Explorer and the Macintosh Finder meant it could integrate into almost any file management workflow. I knew at a glance when file transfers started and finished thanks to dynamic status icons overlaid on files and folders. Clumsy network mounts were unnecessary, because Dropbox was just a plain old folder. Best of all, it was a cross platform application that obeyed the rules and conventions of its hosts. I was so smitten with its ease of use and reliability that I moved a full gig of files from iDisk to Dropbox in less than a week.

Dropbox fulfilled iDisk’s original promise of synchronized web storage, and its public launch in September 2008 was a huge success. A free tier was available with two gigs of storage, but if you needed more space you could sign up for a fifty-gig Dropbox Plus plan at $9.99 per month. Today that same price gets you two terabytes of space. And Plus plans weren't just about storage space—paying users got more collaboration features, longer deleted file recovery times, and better version tracking. And yes, I realize that I'm starting to sound like an influencer who wants to tell you about this fantastic new product entirely out of pure unsullied altruism. Trust me, though—that’s not where this is going. Remember: first you fall in love, then they break your heart. Dropbox's core functionality was file syncing, and this was available to freeloader and subscriber alike.

Dropbox Giveth, and Dropbox Taketh Away

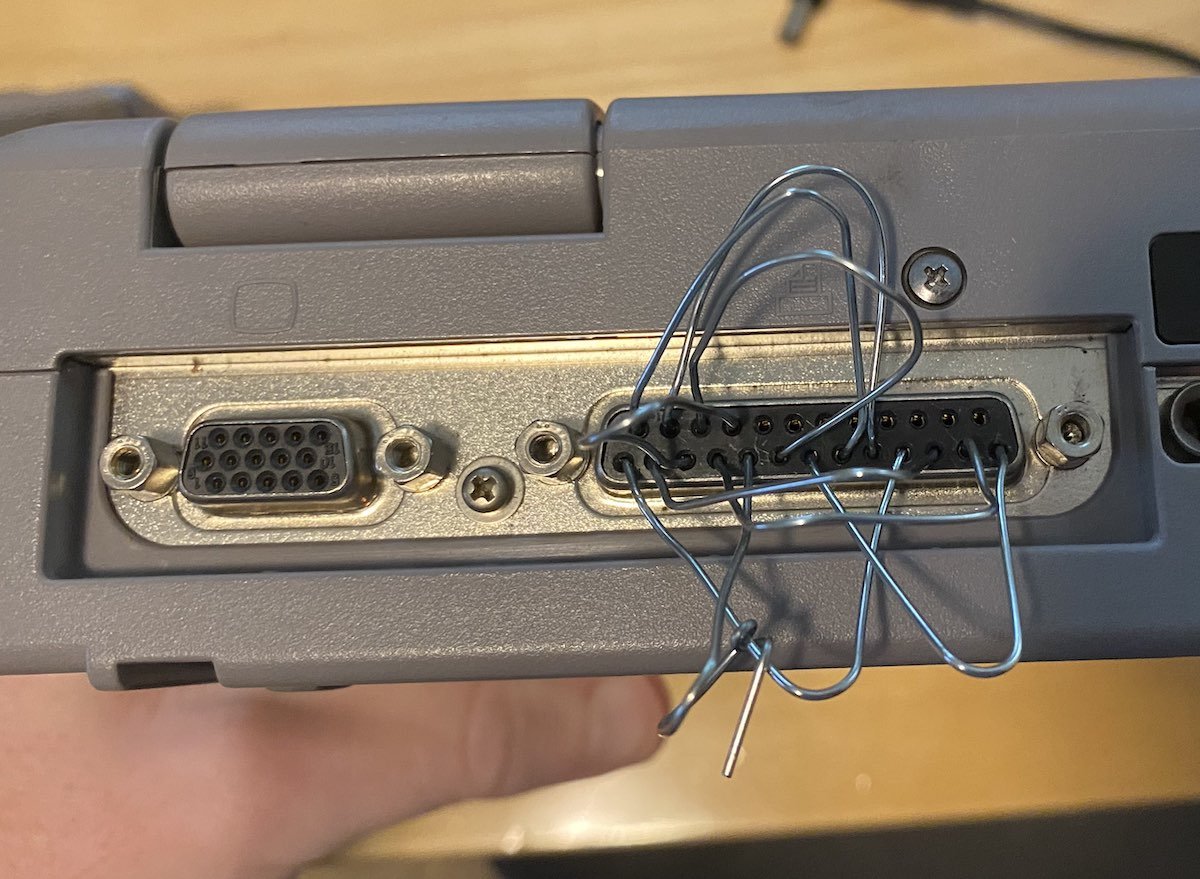

This isn’t an uncommon arrangement—business and professional users will pay for the space and version tracking features they need to do their jobs. But in March 2019, Dropbox dropped the number of devices linked to a basic free account from unlimited… to three. The only way to raise the device limit was upgrading to a Plus plan. Three devices is an incredibly restrictive limit, and basic tier users were caught off guard. My account alone had seven linked devices: iPhone, iPad, MacBook Pro, desktop PC, two work computers, and work phone. Dropbox’s intent with this change was clear—they wanted to shed unprofitable users. If a free user abandons Dropbox, that’s almost as helpful to their bottom line as that same user paying to upgrade.

Speaking of their bottom line, Dropbox Plus plan pricing actually went up to $11.99 per month soon after the device limit change. To keep a $9.99 per month price, you have to commit to a one year subscription. There’s also no options for a lower priced tier with less storage—it’s two terabytes, take it or leave it. In comparison, Apple and Google offer $9.99 per month with no yearly commitments for the same two terabytes. Both offer 200 gigs for $2.99 per month, and if that’s still too rich they offer even cheaper plans. Microsoft includes one terabyte of OneDrive storage when you subscribe to Office 365 for $6.99 a month, and if you’re already an Office user that sounds like a sensible deal. If you’re a basic user looking for a more permanent home, the competition’s carrots look a lot better than Dropbox’s stick.

Even paying users might reconsider their Dropbox subscriptions in the wake of behavior that had left user-friendly far behind, and was verging on user-hostile. Free and paying users alike grumbled when Dropbox discontinued the Public folder in 2017, even though I understand why they cut it. People were treating the Public folder as a webhost and filesharer, and that was more trouble than it was worth. But compared to the device limit, killing the public folder was a minor loss. Photo galleries suffered the same fate. Technically savvy users were annoyed and alarmed when they noticed Dropbox aggressively modifying Mac OS security permissions to grant itself levels of access beyond what was reasonably expected. And even if paying users didn't notice the device limits or the public folder or the photo album or the security misbehaviors... they definitely noticed the new Dropbox client introduced in June 2019.

This is what Dropbox thought people wanted. From their own blog.

A zippy utility was now a bloated Chromium Embedded Framework app. After all, what's a file sync utility without its very own Chromium instance? While the new client introduced many new features, these came at the cost of resources and performance. Dropbox wasn’t just annoying free users, it was annoying paying customers by guzzling hundreds of megabytes of RAM and gobbling up CPU cycles. With an obnoxious new user interface and, for several months, irritants like an icon that wouldn't let itself be removed from your Dock, the new client made a terrible first impression.

The Apple Silicon Compatibility Kerfluffle

The latest example of Dropbox irritating customers is their lateness in delivering a native client for Apple’s new processors. Apple launched the first ARM-based Macs in November 2020, and developers had dev kits for months before that. Rosetta emulation allows the Intel version of Dropbox to run on Apple Silicon Macs, but emulation inflicts a penalty on performance and battery life. With no public timelines or announcements, users grew restless as the months dragged on. When Dropbox did say something, their response rang hollow. After hundreds of posts in their forums requesting an ARM-native client, Dropbox support replied with “[Apple Silicon support] needs more votes”—definitely not a good look. Supporting an architecture isn't a feature, it's part of being a citizen of the platform! Customers shouldn't have to vote for that like it's "add support for trimming videos," it's part of keeping your product viable.

Niche market software usually takes forever to support new architectures on Mac OS or Windows, but Dropbox hasn't been niche since 2009. I expect better from them. I’ve worked for companies whose management let technical debt like architecture support accumulate until Apple or Microsoft forced our hands by breaking compatibility. But our userbase was barely a few thousand people, and our dev teams were tiny. Dropbox has over fifteen million paying users (not counting the freeloaders), a massive R&D budget, and an army of engineers to spend it. The expectations are a bit higher. After multiple Apple-focused news sites highlighted Dropbox’s blasé attitude towards updating their app, CEO Drew Houston said that they hoped to be able to support Apple Silicon in, quote, "H1 2022.” More on that later.

Compare Dropbox’s response to other major tech companies like Microsoft and Adobe. Microsoft released a universal version of Office in December 2020—just one month after Apple shipped the first M1 Macs. The holy trinity of Adobe Creative Suite—Photoshop, Illustrator, and InDesign—were all native by June 2021. Considering these apps aren’t one-button recompiles, that’s a remarkably fast turnaround. On the other hand, this isn’t the first rodeo for Microsoft and Adobe. Both companies lived through the PowerPC, Mac OS X, and Intel transitions. They know firsthand that botching a platform migration costs goodwill. And goodwill is hard to win back.

Dropbox is young enough that they haven’t lived through Apple’s previous architecture changes. Apple announced the start of the Intel transition in June 2005, and shipped Intel Macs to the public in January 2006. Dropbox's public launch wasn't until September 2008, and their app supported both Intel and PowerPC from the start. Before the Apple Silicon announcement, the closest thing to a “transition” that Dropbox faced was Apple dropping support for 32-bit apps in Mac OS Catalina. Fortunately, Dropbox was prepared for such a move: they'd added 64-bit support to the client in 2015, two years before Apple hinted at the future demise of 32-bit apps at WWDC 2017. When Catalina arrived in 2019 and axed 32-bit apps for good, Dropbox had nothing to worry about. So why is it taking so long to get Dropbox fully ARMed and operational—pun intended?

One culprit is Dropbox’s GUI. Dropbox uses Chromium Embedded Framework to render its JavaScript UI code, and CEF wasn’t Apple Silicon native until July of 2021. My issues with desktop JavaScript frameworks are enough to fill an entire episode, but suffice it to say Dropbox isn’t alone on that front. Some Electron-based apps like Microsoft Teams have yet to ship ARM-native versions on the Mac despite the OpenJS Foundation releasing ARM-native Mac OS artifacts in Electron 11.0 in November 2020. I get it: dependencies are a bear—or, sometimes, a whole family of bears. But this is a case where some honest roadmapping with your customers earns a lot of goodwill. Microsoft announced Teams’ refactoring to Edge WebView2 back in June, so we know something is coming. Discord released an ARM-native version in their Canary nightly build branch back in November. Compare that to Spotify, which also uses CEF. They too fell into the trap of asking for votes for support on issues raised in their forum. Even so, Spotify managed to get a native beta client out in July and a release version in September. CEF isn’t Dropbox’s only dependency problem, but it’s certainly the most visible. I’m sure there’s plenty of Dropbox tech support people, QA engineers, and software devs who aren’t happy about the current state of affairs, and I’ve got plenty of sympathy for them. Because I’ve been in that situation, and it stinks. Paying customers shouldn’t have to complain to the press before they get an answer from the CEO about platform support.

The Cautionary Tale of Quark

Dropbox should heed the tale of Quark and its flagship app, QuarkXPress. Back in the nineties, most Mac users were printing and graphic arts professionals, and QuarkXPress was a crucial ingredient in their creative soup. Apple announced Mac OS X in January 2000, and the new OS would feature badly needed modernizations like preemptive multitasking and protected memory. But—and this might sound familiar—existing apps needed updates to run natively under the new OS. To expedite this, Apple created the Carbon framework for their long-time developers like Adobe, Microsoft, Macromedia... and Quark. Carbonizing was a faster, easier way to update apps for Mac OS X without a ground-up rewrite. Apple needed these apps for a successful OS transition, so it was in everyone’s interest for developers to release Carbon versions as fast as possible.

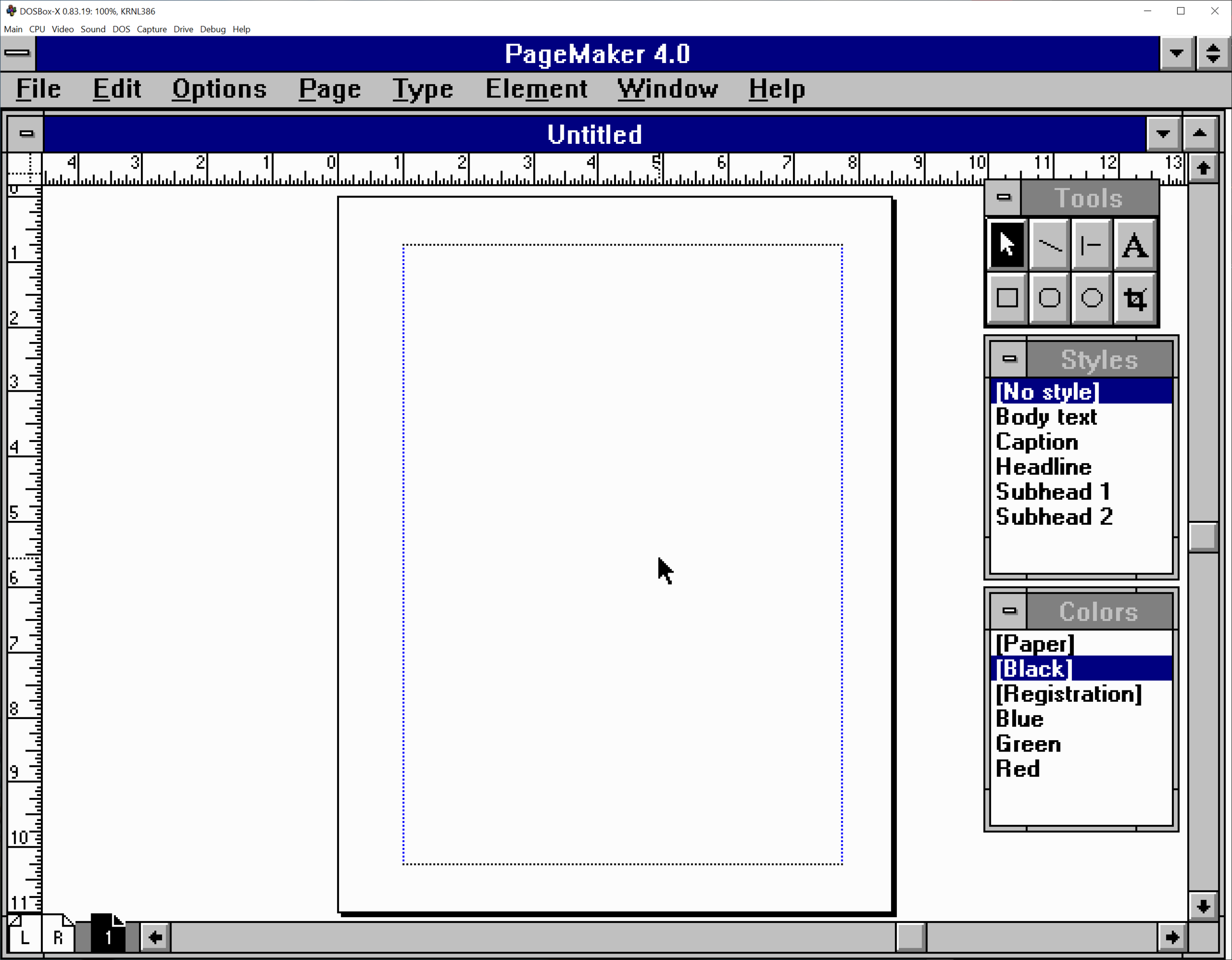

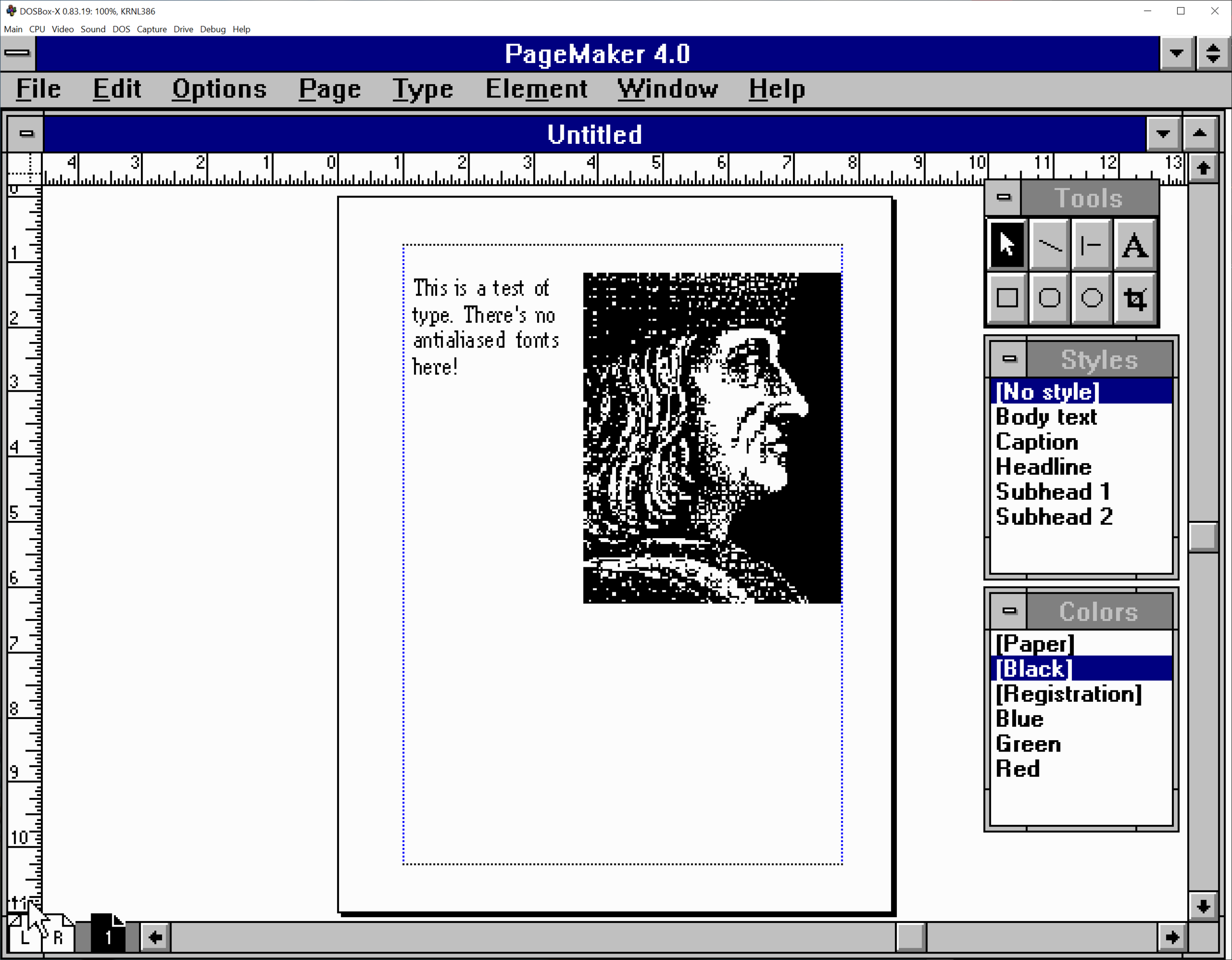

The Carbon version of XPress 5.0 previewed in Macworld.

How long did it take developers to release these updates? Remember, Mac OS 10.0 came out in March 2001, and it was very raw. Critical features like disc burning and DVD playback were missing in action. Even if some users could live without those features, it was just too slow to be usable day-to-day. It wasn't until the 10.1 update in September 2001 that you could try to use it on a daily basis, instead of poking at a few apps, saying "cool" and and then going back to OS 9 to get some work done. So Microsoft’s release of Office v.X for Mac in November 2001 was timed perfectly to catch the wave of new 10.1 users. Adobe wasn’t doing the whole Creative Suite thing at the time, so apps were released on their own schedules. Adobe’s Carbon conversions started with Illustrator 10 in October 2001, InDesign 2.0 in January 2002, and Photoshop 7.0 in March 2002. Macromedia was one of the first aboard the OS X train, releasing a Carbon version of Freehand in May 2001. Dreamweaver, Fireworks, and Flash all got Carbon versions with the MX Studio suite in the spring of 2002. Even smaller companies managed it—Extensis released a Carbon version of their font manager Suitcase in November 2001!

One year after the launch of Mac OS X, a working graphic designer could have an all OS X workflow, except for, you guessed it... QuarkXPress. How long would Quark make users wait? Well, in January 2002, they released QuarkXPress 5.0… except it wasn't a Carbon app, and it only ran in classic Mac OS. Journalists at the launch event asked about OS X, of course, and Quark PR flack Glen Turpin promised the Carbon version of QuarkXPress would be here Real Soon Now:

“The Carbon version of QuarkXPress 5 will be the next upgrade. There’s one thing we need to do before the Carbon version of QuarkXPress 5 is released: We need to launch QuarkXPress 5.0 in Europe.”

Would you believe that Quark, a company notorious for slow and unpredictable development, never shipped that promised Carbon update for version 5.0? Quark customers had to wait until QuarkXPress 6.0 in June 2003 for an OS X native version. Users who'd bought 5.0 had to upgrade again. And users who'd stayed with 4.x got charged double the price of a 5.0 upgrade—and yes, that's for upgrading to 6. Ask me how I know. Quark’s unfashionable lateness to the OS X party was another log in the chimney fire of failing customer relations. Despite Quark's many virtues, they charged out the ear for upgrades and tech support, and their leadership was openly hostile to customers. Quark CEO Fred Ebrahimi actually said that if you didn't like Quark's support for the Mac, you could, and I quote, “Switch to something else.” He thought that meant QuarkXPress for Windows. What it actually turned out to mean was Adobe InDesign.

The moral of the story is that customer dissatisfaction can reach a tipping point faster than CEOs expect. You can only take users for granted for so long before they decide to bail. Quark squandered fifteen years of market leadership and never recovered. Dropbox isn’t the only cloud storage solution out there, and they’d be wise to remember that. Google Drive and Microsoft OneDrive have native ARM clients in their beta channels. Box—not Dropbox, just plain old Box—released a native client in November 2021. Backblaze also has a native client, and NextCloud’s next release candidate is ARM native too.

When I was writing this episode, I had no idea when Dropbox would finally deliver an ARM-native client. The only clue I had was Houston’s tweet about the first half of 2022. At the time, I thought that “first half” could mean January. It could mean June. It could mean not even by June. Your guess would have been as good as mine. In my final draft I challenged Dropbox to release something in the first quarter of 2022. Imagine my surprise when just before I started my first time recording of this episode, Dropbox announced an upcoming beta version supporting Apple Silicon. This beta was already in the hands of a small group of testers, and was released to the public beta channel on January 13. I had to make a few… minor revisions to this after that. There’s still no exact date for a full final version—I’ll guess, oh, springtime. Even though that challenge wasn’t published yet, I still wrote it, and pretending I didn’t would be dishonest. I am a man of my word—you got me, Dropbox. Still, that doesn’t make up for poor communication and taking your users for granted. You still got work to do.

My Future with Dropbox and Comparing the Competition

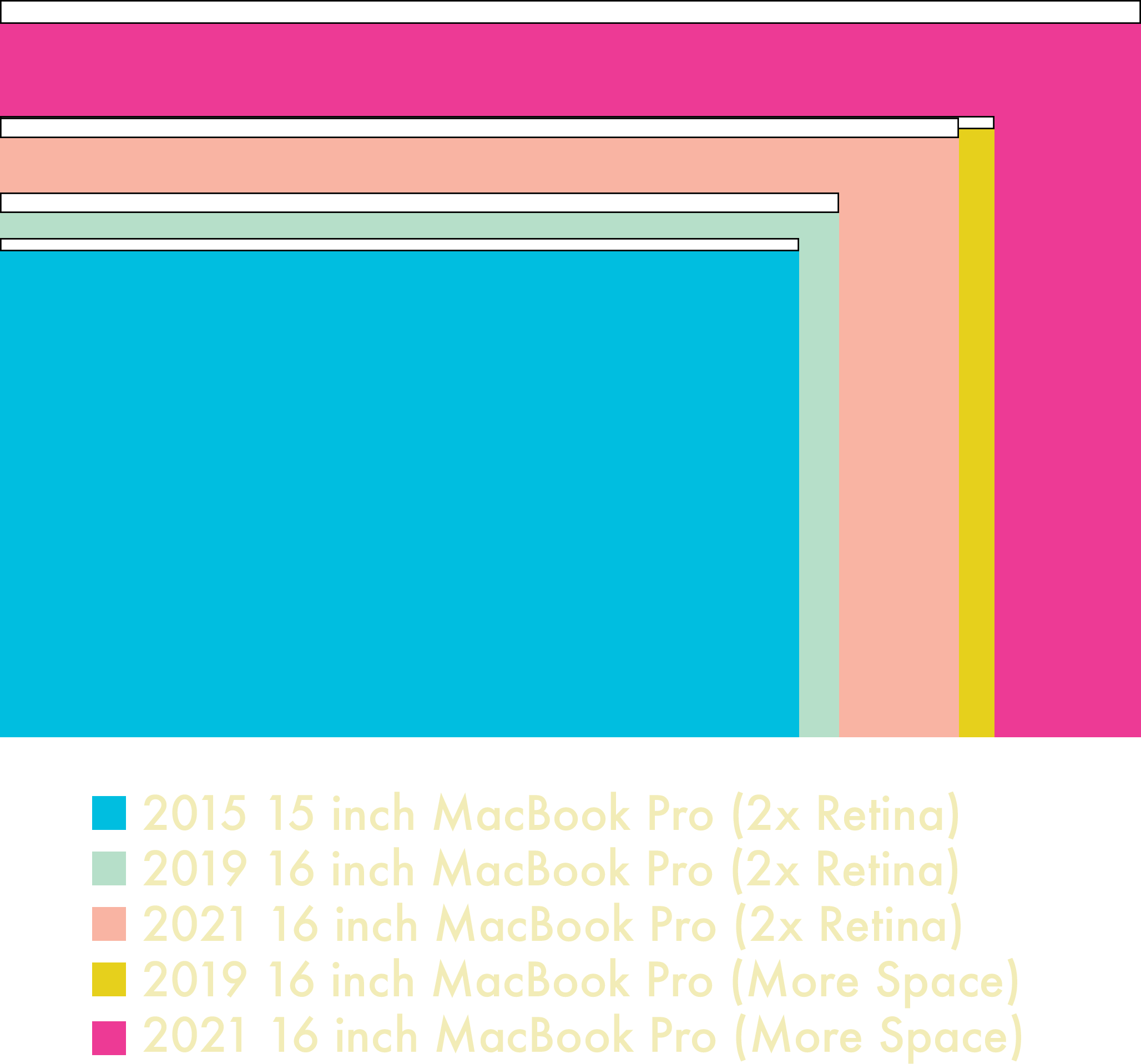

Before my fellow nerds start heckling me, I know Mac users aren’t the majority of Dropbox’s customers. Windows users significantly outnumber Mac users, and their business won’t collapse if Mac users leave en masse. But like dropping client support for Linux, it’s another sign that Dropbox is starting to slip. You have to wonder what woes might befall Windows customers in due time. After all, Dropbox has yet to ship ARM binaries for Windows, which is a problem if you're using an ARM Windows device like a Microsoft Surface or virtualizing Windows on ARM. If you really want to access Dropbox on an ARM Windows device, you’re forced to use Dropbox’s tablet app, and that’s not quite right for a cursor and keyboard environment.

Amidst all this anguish about clients, I do want to emphasize that Dropbox’s core competency—hosting, storage, and syncing—is still very good. After all, the client might be the most visible part of a cloud-based storage system, but there's still… you know… the cloud-based part. People are willing to put up with a certain amount of foibles from a client as long as their content syncs and doesn't disappear, and Dropbox's sync and web services are still top of the line. Considering how long it took Apple to get iCloud Drive to a reasonable level of service, that competency has a lot of value. External APIs bring Dropbox integration to other applications, and if you've still got a standalone 1Password vault, Dropbox will still be useful. All these factors make it hard to disentangle Dropbox from a workflow, and I get why people are waiting and won’t switch unless absolutely necessary.

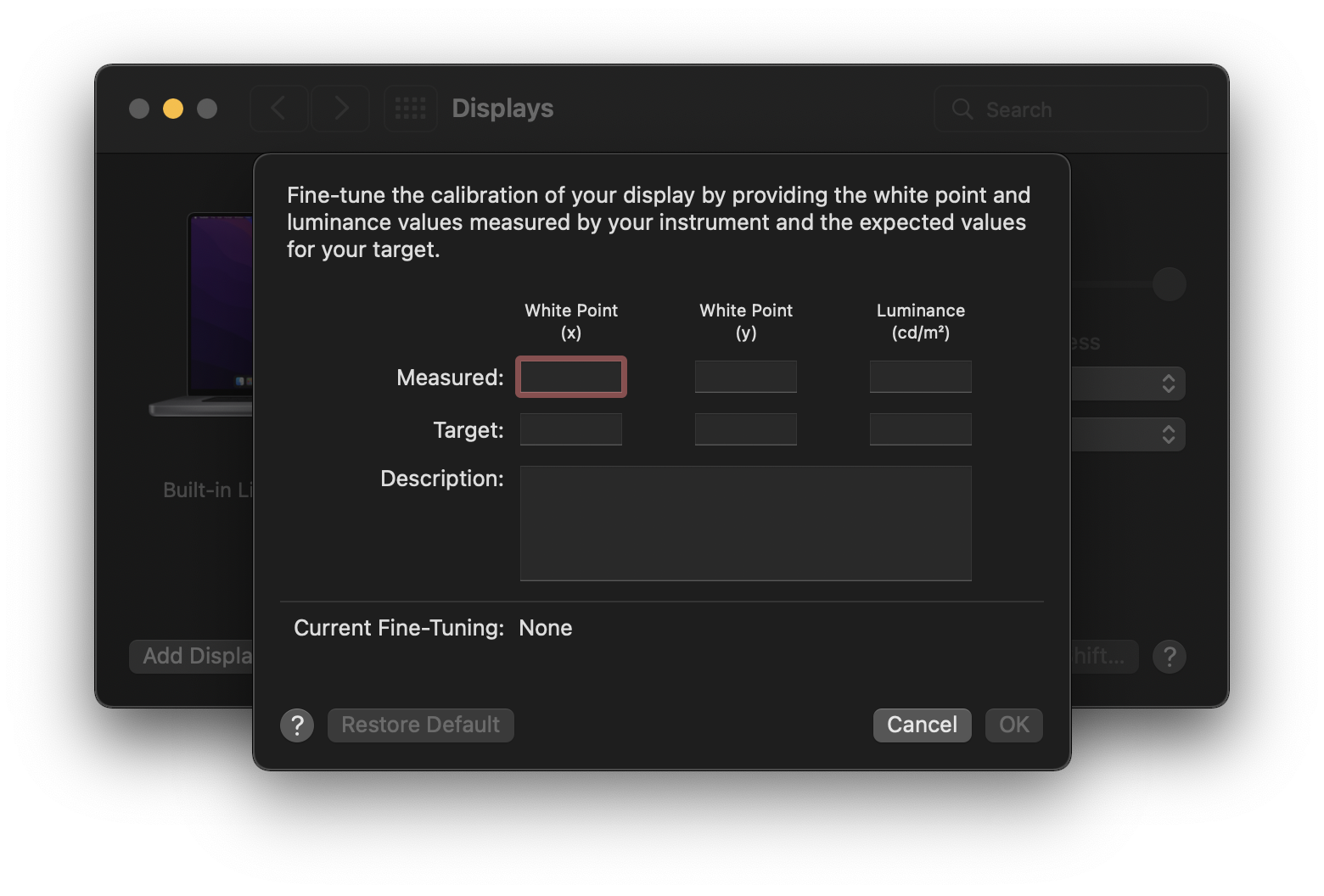

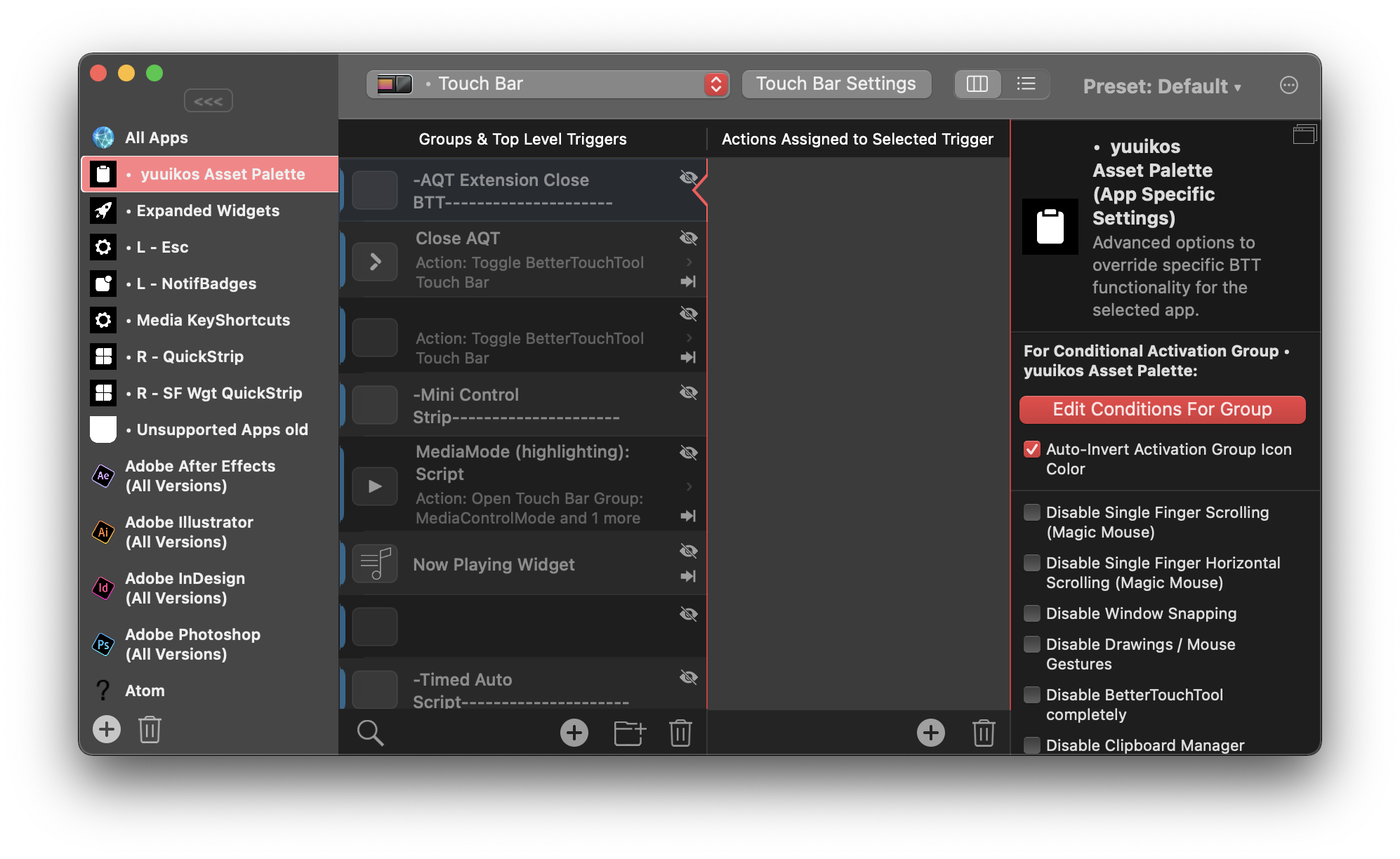

So what’s the plan? For now, I’ve switched to Maestral, a third party Dropbox client. Maestral runs natively on Apple Silicon and consumes far less resources than the official client. While Maestral syncs files just fine, it does sacrifice some features like icon overlays in the Finder. I also signed up for Apple’s 50 gigabyte iCloud plan, and in my mixed Mac and Windows environment it works pretty well. And it’s only a fraction of the price of Dropbox. iCloud’s syncing performance is satisfactory, but it still lags when it comes to workflow. Take a simple action like copying a share link. Apple’s share sheet is fine as far as interfaces go, but I don’t need to set permissions all the time. Just give me a simple right click option to copy a public link to the file or folder, please. As for Google Drive, their client software has been an absolute disaster every time I’ve used it, regardless if it’s on Mac or Windows. Microsoft OneDrive seems reasonable so far, but I haven’t subjected it to any kind of strenuous tests. If push comes to shove, I’ll probably go all-in on iCloud.

This is complete overkill when most of the time you just need to copy a public link.

I miss what Dropbox was a decade ago, and I’m sad that it might end this way. It’s not over between us yet, but the passion’s dying. Without a serious turn-around, like a leaner native client and cheaper plans, I’ll have a hard time recommending them. It’s not my first software heartache, and I doubt it'll be my last, but I’d hoped Dropbox would be different. Naive of me, maybe, but Dropbox won’t shed any tears over me. Maybe the number of people I've signed up for their paid service balances out my basic account use over the years. Enthusiasm for Dropbox has all but dried up as they’ve prioritized IPOs and venture capital over their actual users. It’s that old Silicon Valley story—you either die the hero, or live long enough to become the venture capital villain. In the meantime, I’m sure there’ll be another cute utility that’ll catch my eye—and yes, that sounds flirtatious and silly. I began this episode with a “boy meets program” metaphor, but everybody knows that fairy tales are just that—fairy tales. Relationships take work, and that includes customer relationships. If one half isn't upholding their side, maybe it's time to move on.

It's not impossible that Dropbox could win me back... but it's more likely that I'll drop them.